ΑΙhub.org

Can machines read our minds?

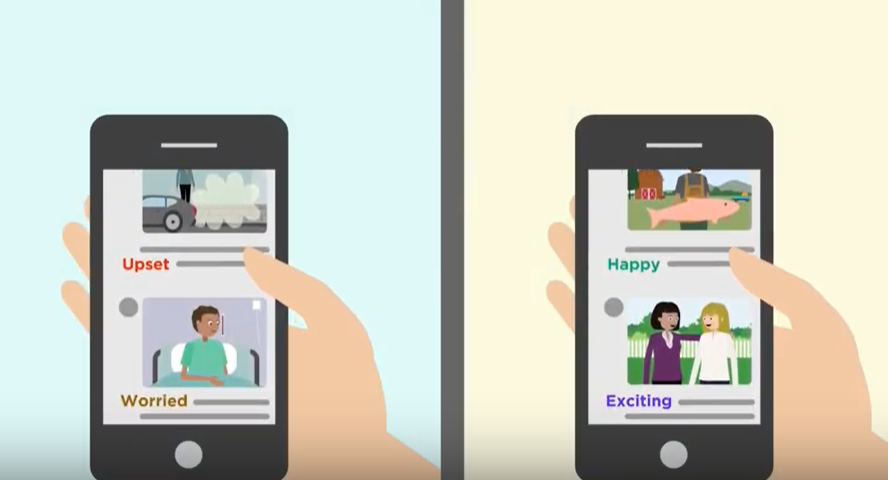

Many of us spend a significant portion of our day online and, in doing so, through our interactions with social media and IoT devices, leave a trail of “digital footprints” in our wake. Could this data be used by machine learning algorithms to infer psychometric information about us, including our emotions, attitudes, aptitudes, beliefs and more? A team at the University of Bristol have been finding out.

In an article published in Minds and Machines the Bristol team review 26 empirical studies; these studies concern deploying algorithms to predict personal information using online data. One of the key aims of the review is to better understand a research trend that has emerged across a wide range of communities and to explore the philosophical and ethical consequences of the techniques being developed. The team were interested in understanding what kind of psychological information can be inferred on the basis of our online activities, and whether an intelligent system could use this information to improve its ability to subsequently steer our behaviour towards its own goals.

The studies reviewed covered the inference (from “digital footprints”) of the following: affect and emotion, aptitudes and skills, attitudes and orientations, personality, and disorders and conditions. The team found that it is possible for machine learning algorithms to infer such information about us on the basis of online samples of our behaviour. They also learned that it is possible for the algorithms to be used to segment users into groups that share some psychological trait or mental state.

This work identifies just a portion of the many studies in which different types of behavioural samples could be used by an algorithm to infer information about us. Many more methods are still being studied and developed across different communities for the same purpose.

As the types and amount of interaction between us and our online devices increases, and as new types of sensors for measuring behavioural signals are developed, there is the expectation that by combining these sources of information a machine learning algorithm could form a very accurate image of us. The likely convergence of these technologies and methods raises many ethical issues. These issues will not be solved entirely by legislation, and the individual research communities should not be expected to develop ethical guidelines on their own. Rather, it is imperative that policymakers and researchers understand the scope of these developments, in order to better facilitate the ongoing discussions about the growing use and convergence of such machines.

Read the published papers to find out more:

Can Machines Read our Minds? Burr, C. & Cristianini, N. Minds & Machines (2019).

An Analysis of the Interaction Between Intelligent Software Agents and Human Users Burr, C., Cristianini, N. & Ladyman, J. Minds & Machines (2018) 28: 735.

This work is part of the ERC ThinkBIG project, Principal Investigator Nello Cristianini, University of Bristol.