ΑΙhub.org

D4RL: building better benchmarks for offline reinforcement learning

By Justin Fu

In the last decade, one of the biggest drivers for success in machine learning has arguably been the rise of high-capacity models such as neural networks along with large datasets such as ImageNet to produce accurate models. While we have seen deep neural networks being applied to success in reinforcement learning (RL) in domains such as robotics, poker, board games, and team-based video games, a significant barrier to getting these methods working on real-world problems is the difficulty of large-scale online data collection. Not only is online data collection time-consuming and expensive, it can also be dangerous in safety-critical domains such as driving or healthcare. For example, it would be unreasonable to allow reinforcement learning agents to explore, make mistakes, and learn while controlling an autonomous vehicle or treating patients in a hospital. This makes learning from pre-collected experience enticing, and we are fortunate in that many of these domains, there already exist large datasets for applications such as self-driving cars, healthcare, or robotics. Therefore, the ability for RL algorithms to learn offline from these datasets (a setting referred to as offline or batch RL) has an enormous potential impact in shaping the way we build machine learning systems for the future.

In off-policy RL, the algorithm learns from experience collected online from an

exploration or behavior policy.

In offline RL, we assume all experience is collected offline, fixed and no

additional data can be collected.

The predominant method for benchmarking offline deep RL has been limited to a single scenario: the dataset is generated from some random or previously trained policy, and the goal of the algorithm is to improve in performance over the original policy [i.e., 1,2,3,4,5,6]. The problem with this approach is that real-world datasets are unlikely to be generated by a single RL-trained policy, and the many of the situations not covered by this evaluation method are unfortunately known to be problematic for RL algorithms. This makes it difficult to know how well our algorithms will perform when actually used outside of these benchmark tasks.

In order to develop effective algorithms for offline RL, we need widely available benchmarks that are easy to use and can accurately measure progress on this problem. Using real-world data, such as in autonomous driving, would make a great indicator for progress, but evaluation of the algorithm becomes a challenge. Most research labs do not have the resources to deploy their algorithm on a real vehicle in order to test if their method really works. To fill the gap between realistic but infeasible real-world tasks, and the somewhat lacking but easy-to-use simulated tasks, we recently introduced the D4RL benchmark (Datasets for Deep Data-Driven Reinforcement Learning) for offline RL. The goal of D4RL is simple: we propose tasks that are designed to exercise dimensions of the offline RL problem which may make real-world application difficult, while keeping the entire benchmark in simulated domains that allow any researcher around the world to efficiently evaluate their method. In total, the D4RL benchmark includes over 40 tasks across 7 qualitatively distinct domains that cover application areas such as robotic manipulation, navigation, and autonomous driving.

What properties make offline RL difficult?

In a previous blog post, we discussed that simply running an ordinary off-policy RL algorithm on the offline problem is typically insufficient, and in the worst case can cause the algorithm to diverge. There are in fact many factors which can cause poor performance for RL algorithms, which we use to guide the design of D4RL.

Narrow and biased data distributions are a common property in real-world datasets that can create problems for offline RL algorithms. By narrow data distributions, we mean that the dataset lacks significant coverage in the state-action space of the problem. A narrow data distribution does not by itself mean the task is unsolvable – for example, expert demonstrations often produce narrow distributions which can make learning difficult. An intuitive reason for why narrow data distributions are difficult to learn from is that they often lack the mistakes that are necessary for an algorithm to learn. For example, in healthcare, datasets are often biased towards serious cases. We may only see very sick patients get treated with medicine (with a small percentage of them living), and mildly sick patients sent home without treatment (with nearly all of them living). A naive algorithm could learn that treatment causes death, but this is simply because we never see sick patients not treated, upon which we would learn that the survival rate for treatment is much higher.

Data generated from non-representable policies can arise from several realistic situations. For example, human demonstrators can use cues not observable to an RL agent, leading to partial observability issues. Some controllers can utilize state or memory to create data that cannot be represented by any Markovian policy. These situations can cause several issues for offline RL algorithms. First, being unable to represent the policy class has been shown to create bias in the Q-learning algorithm. Second, a crucial step in many offline RL algorithms, such as those based on importance weighting, is to estimate the action probabilities in the dataset. Being unable to represent the policy that generated the data can create an additional source of error.

Multitask and undirected data is a property we believe will be prevalent among large, cheaply collected datasets. Imagine if you were an RL practitioner and wished to procure a large dataset for training dialog agents and personal assistants. The simplest method to do so would be to simply log conversations between real humans, or scrape real conversations from the internet. In these situations, the recorded conversations may not have anything to do with a particular task you are trying to accomplish, such as booking a flight. However, many pieces of the conversation flow could be useful and and applicable to more than just the conversation it was observed in. One way to visualize this effect is with the following example. Imagine if an agent was trying to get from point A to C, but only observes paths from A to B and B to C. An agent can `stitch’ together the corresponding halves from the observed paths to form the shortest path from A to C.

Suboptimal data is another property which we expect to observe in realistic datasets because it may not always be feasible to have expert demonstrations for every task we may wish to solve. In many domains, such as in robotics, providing expert demonstrations is both tedious and time consuming. A key advantage of using RL instead of a method such as imitation learning is that RL gives us a clear signal for how to improve a policy, meaning we can learn and improve from a much broader scope of datasets.

D4RL Tasks

In order to capture the properties we outlined above, we introduce tasks spanning a wide variety of qualitatively different domains. All of the domains aside from Maze2D and AntMaze were originally proposed by other ML researchers, and we have adapted their work and generated datasets for use in the offline RL setting.

-

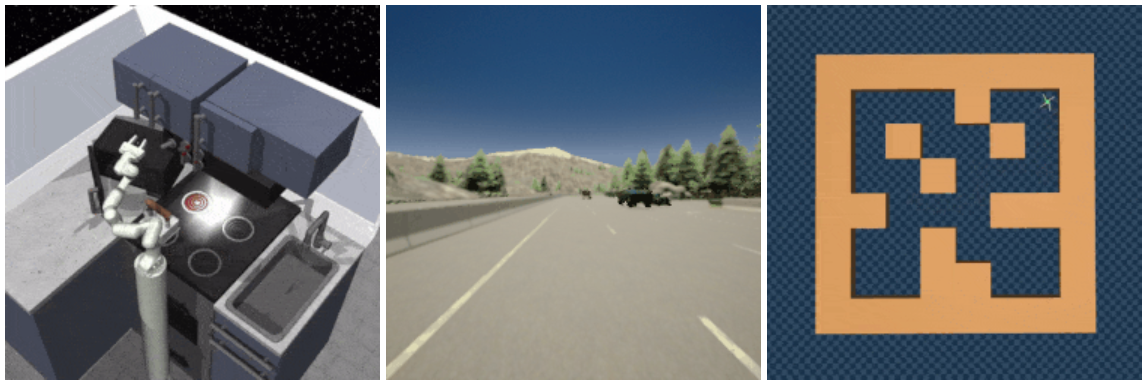

We begin with 3 navigation domains of increasing difficulty. The easiest of the domains is the Maze2D domain, which tries to navigate a ball along a 2D plane to a target goal location. There are 3 possible maze layouts (umaze, medium, and large). The AntMaze domain replaces the ball with the “Ant” quadraped robot, providing a more challenging low-level locomotion problem.

The navigation environments allow us to heavily test the “multitask and undirected” data property, since it is possible to “stitch” together existing trajectories to solve the task. The trajectories in the dataset of the large AntMaze task is shown below, with each trajectory plotted in a different color and the goal marked with a star. By learning how to repurpose existing trajectories, the agent can potentially solve the task without being forced to rely on extrapolating to unseen regions of the state space.

For a realistic, vision-based navigation task, we use the CARLA simulator. This task adds a layer of perceptual challenge on top of the two aforementioned Maze2D and AntMaze tasks.

-

We also include 2 realistic robotic manipulation tasks, using the Adroit (based on the Shadow Hand robot) and the Franka platforms. The Adroit domain contains 4 separate manipulation tasks, as well as human demonstrations recorded via motion capture. This provides a platform for studying the use of human-generated data within a simulated robotic platform.

The Franka kitchen environment places a robot in a realistic kitchen environment where objects can be freely interacted with. These include opening the microwave and various cabinets, moving the kettle, and turning on the lights and burners. The task is to reach a desired goal configuration of the objects in the scene.

-

We include two tasks from the Flow benchmark (which has been covered in a previous blog post). The Flow project proposes to use autonomous vehicles for reducing traffic congestion, which we believe is a compelling use case for offline RL. We include a ring layout and a highway merge layout.

-

Finally, we also include datasets for HalfCheetah, Hopper, and Walker2D from the OpenAI Gym Mujoco benchmark. These tasks have been used extensively in prior work [1,2,3,4], and in order to ensure that evaluations are comparable across papers, we have standardized the datasets.

Future Directions

In the near future, we would be excited to see offline RL applications move from simulated domains to real-world domains where significant amounts of offline data is easily obtainable. There are many real-world problems in which offline RL could make a significant impact, including:

- Autonomous Driving

- Robotics

- Healthcare and treatment plans

- Education

- Recommender Systems

- Dialog agents

- And many more!

In many of these domains, there already exist large, pre-collected datasets of experience that are waiting to be used by an carefully designed offline RL algorithm. We believe that offline RL holds great promise as a potential paradigm to leverage vast amounts of existing sequential data within the flexible decision making framework of reinforcement learning.

If you’re interested in this benchmark, the code is available open-source on Github, and you can check out our website for more details.

This blog post is based on the our recent paper:

- D4RL: Datasets for Deep Data-Driven Reinforcement Learning

Justin Fu, Aviral Kumar, Ofir Nachum, George Tucker, Sergey Levine

arXiv:2004.07219

This article was initially published on the BAIR blog, and appears here with the authors’ permission.