ΑΙhub.org

Applying deep learning algorithms to the task of clearing space junk

By Tanya Petersen

EPFL researchers are at the forefront of developing some of the cutting-edge technology for the European Space Agency’s first mission to remove space debris from orbit.

How do you measure the pose – that is the 3D rotation and 3D translation – of a piece of space junk so that a grasping satellite can capture it in real time in order to successfully remove it from Earth’s orbit? What role will deep learning algorithms play? And, what is real time in space? These are some of the questions being tackled in a ground-breaking project, led by ClearSpace, a spin-off from the EPFL Space Center (eSpace), to develop technologies to capture and deorbit space debris.

With more than 34,000 pieces of junk orbiting around the Earth, their removal is becoming a matter of safety. Earlier this month an old Soviet Parus navigation satellite and a Chinese ChangZheng-4c rocket were involved in a near miss and in September the International Space Station conducted a maneuver to avoid a possible collision with an unknown piece of space debris, whilst the crew of the ISS Expedition 63 moved closer to their Soyuz MS-16 spacecraft to prepare for a potential evacuation. With more junk accumulating all the time, satellite collisions could become commonplace, making access to space dangerous.

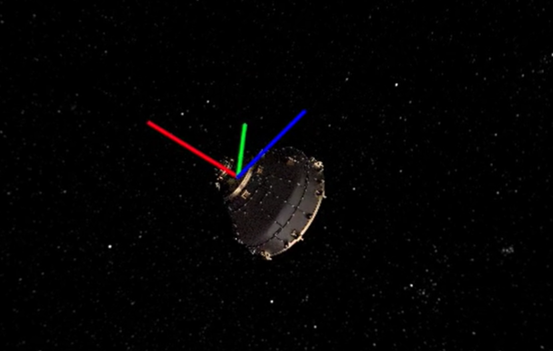

ClearSpace-1, the company’s first mission set for 2025, will involve recovering the now obsolete Vespa Upper Part, a payload adapter orbiting 660 kilometers above the Earth that was once part of the European Space Agency’s Vega rocket, to ensure that it re-enters the atmosphere and burns up in a controlled way.

One of the first challenges is to enable the robotic arms of a capture rocket to approach the Vespa from the correct angle. To this end, it will use an attached camera – its ‘eyes’ – to figure out where the space junk is so it can grasp the Vespa and then pull it back into the atmosphere. “A central focus is to develop deep learning algorithms to reliably estimate the 6D pose (3 rotations and 3 translations) of the target from video-sequences even though images taken in space are difficult. They can be over- or under-exposed with many mirror-like surfaces,” says Mathieu Salzmann, a scientist spearheading the project within EPFL’s Computer Vision Laboratory led by Professor Pascal Fua, in the School of Computer and Communication Sciences.

However, there’s a catch. Nobody has really seen the Vespa for seven years as it’s been spinning in a vacuum in space. We know it’s about 2 meters in diameter, with carbon fibers that are dark and a little shiny, but is this still what it looks like?

EPFL’s Realistic Graphics Lab is simulating what this piece of space junk looks like as the ‘training material’ to help Salzmann’s deep learning algorithms improve over time. “We are producing a database of synthetic images of the target object, including both the Earth backdrop reconstructed from hyperspectral satellite imagery, and a detailed 3D model of the Vespa upper stage. These synthetic images are based on measurements of real-world material samples of aluminium and carbon fiber panels, acquired using our lab’s goniophotometer. This is a large robotic device that spins around a test swatch to simultaneously illuminate and observe it from many different directions, providing us with a wealth of information about the material’s appearance,” says Assistant Professor Wenzel Jakob, head of the lab. Once the mission kicks off, researchers will be able to capture some real-life pictures from beyond our atmosphere and fine tune the algorithms to make sure that they work in situ.

A third challenge will be the need to work in space, in real-time and with limited computing power onboard the ClearSpace capture satellite. Dr. Miguel Peón, a Senior Post-Doctoral Collaborator with EPFL’s Embedded Systems Lab is leading the work of transferring the deep learning algorithms to a dedicated hardware platform. “Since motion in space is well behaved, the pose estimation algorithms can fill the gaps between recognitions spaced one second apart, alleviating the computational pressure. However, to ensure that they can autonomously cope with all the uncertainties in the mission, the algorithms are so complex that their implementation requires squeezing out all the performance from the platform resources,” says Professor David Atienza, head of ESL.

It’s clear that designing algorithms to be 100% reliable in such harsh, and relatively unknown, conditions, and that perform in real-time using limited computational resources, is a tremendous challenge. For Salzmann, this is part of the attraction of the project, “we need to be absolutely reliable and robust. From a research perspective, you are typically happy with 90% success but this is something that we cannot really afford in a real mission. But maybe the more exciting aspect of the project is that we are developing an algorithm that will eventually work in space. I find this absolutely amazing and that is what motivates me every day!”