ΑΙhub.org

#NeurIPS2020 invited talks round-up: part one

There were seven interesting and varied invited talks at NeurIPS this year. Here, we summarise the first three, which were given by Charles Isbell (Georgia Tech), Jeff Shamma (King Abdullah University of Science and Technology) and Shafi Goldwasser (UC Berkeley, MIT and Weizmann Institute of Science).

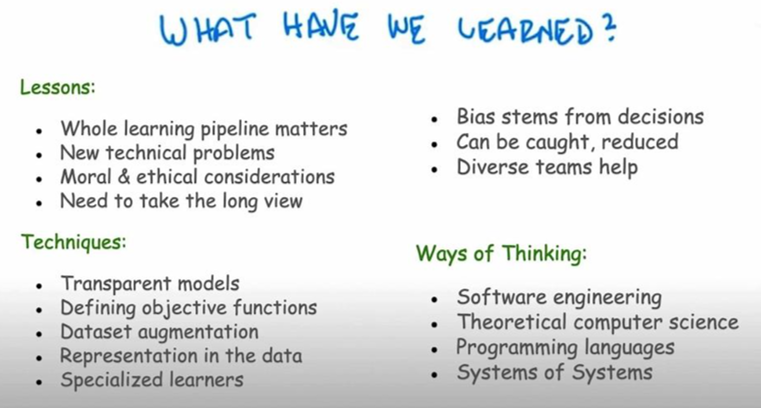

Charles Isbell: You can’t escape hyperparameters and latent variables: machine learning as a software engineering enterprise

The invited talks kicked off in style with a presentation from Charles Isbell. He had posted a teaser on Twitter indicating that he was trying something new with the format, and it certainly did not disappoint. The talk received rave reviews during both the live chat channel and afterwards on social media.

Machine learning has reached the point where it is pervasive in our lives and, like other successful technological fields, must take responsibility for avoiding the harms associated with what it is producing.

Charles’ Christmas Carol-themed talk made full use of the virtual format and saw him visit Michael Littmann, who gave a consummate performance in the role of Scrooge (a blinkered machine learning theorist), to discuss algorithmic bias and how researchers can take steps to identify and combat this bias in their work. The ghosts of machine learning’s past, present and future came in the form of interviews with many researchers working across the discipline. Their insights included approaches that might help us to develop more robust machine-learning systems.

Watch the talk here.

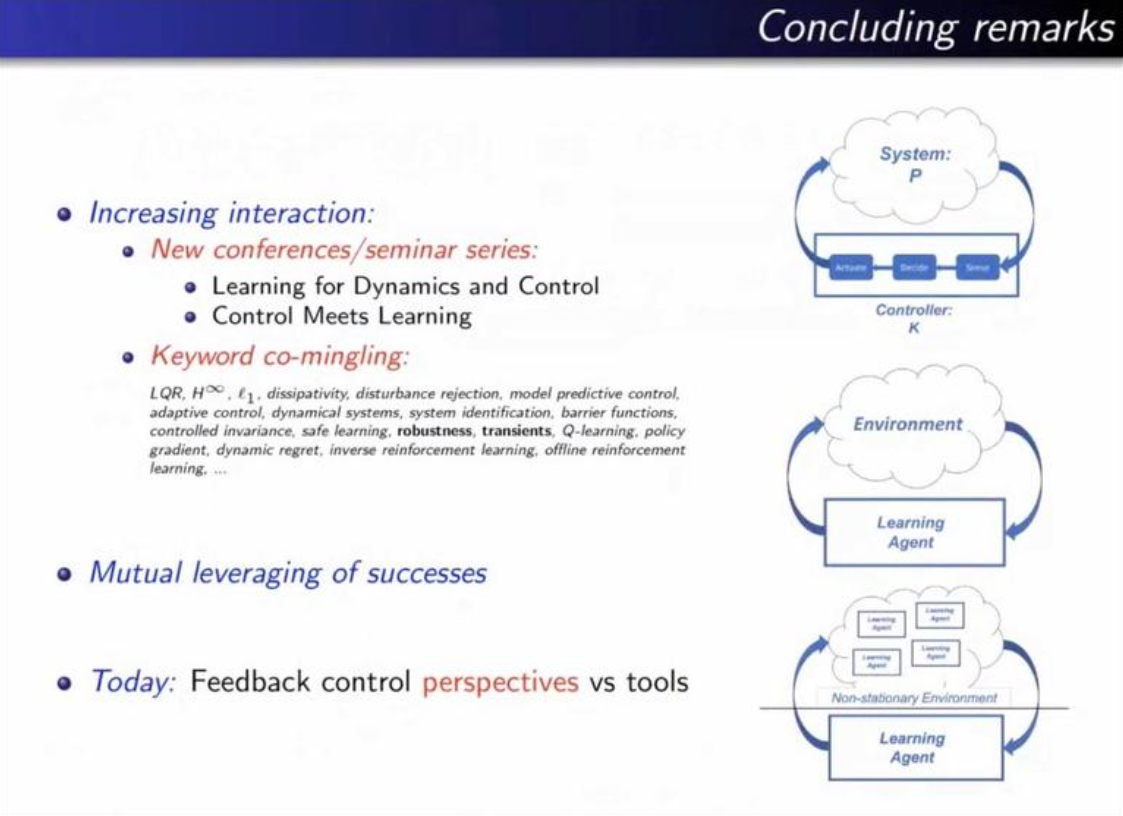

Jeff Shamma: Feedback control perspectives on learning

Jeff started with a definition of feedback control: real-time decision making in dynamic and uncertain environments. Feedback systems are a never-ending loop, with the steps of: sensing what is happening, deciding what to do, and acting. Areas where feedback control has been applied include: manufacturing, energy, biomedical, transportation, logistics, and communication. As feedback control is an enabling technology it does not perhaps receive the attention warranted for such ubiquity.

Feedback control is, of course, present in learning systems. For example, in reinforcement learning, an agent is in feedback with its environment.

During his talk, Jeff presented three benefits of feedback: 1) it can be used to stabilise and shape behaviour, 2) it can provide robustness to variation, 3) it enables tracking of command signals. These concepts were related to specific research problems in evolutionary game theory, no-regret learning, and multi-agent learning.

Watch the talk here.

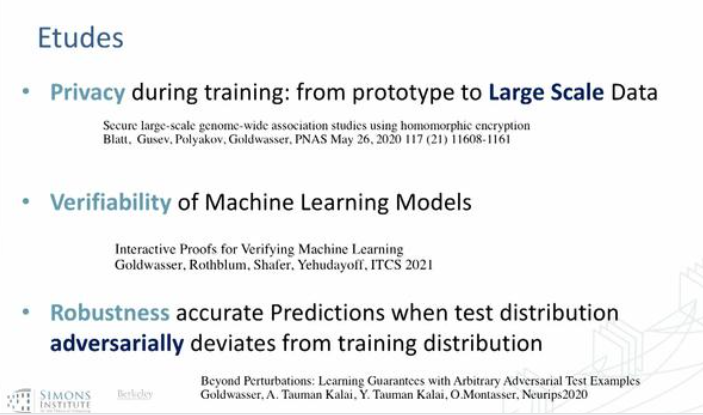

Shafi Goldwasser: Robustness, verification, privacy: addressing machine learning adversaries

To start her talk, Shafi noted that her background is in cryptography. This field has had a big impact on the world, from electronic commerce to cryptocurrencies, from cloud computing to quantum computing. Experience in this area has allowed her to approach machine learning problems from a cryptographic standpoint.

Shafi’s talk focussed on three recent works, covering the topics of privacy, verification and robustness. For each case, cryptography inspired models were detailed; an important aim in each instance being to address the challenges presented by adversaries.

The three works presented in the talk were based on these topics:

1) Verifiability of machine learning models

2) Privacy during training: from prototype to large scale data

3) Robustness – accurate predictions when test distribution deviates from training distribution

Watch the talk here.

tags: NeurIPS, NeurIPS2020