ΑΙhub.org

Deep learning microscope for rapid tissue imaging

By Jade Boyd

When surgeons remove cancer, one of the first questions is, “Did they get it all?” Researchers from Rice University and the University of Texas MD Anderson Cancer Center have created a new microscope that can quickly and inexpensively image large tissue sections, potentially during surgery, to find the answer.

The microscope can rapidly image relatively thick pieces of tissue with cellular resolution, and could allow surgeons to inspect the margins of tumors within minutes of their removal. It was created by engineers and applied physicists at Rice and is described in a study published in the Proceedings of the National Academy of Sciences.

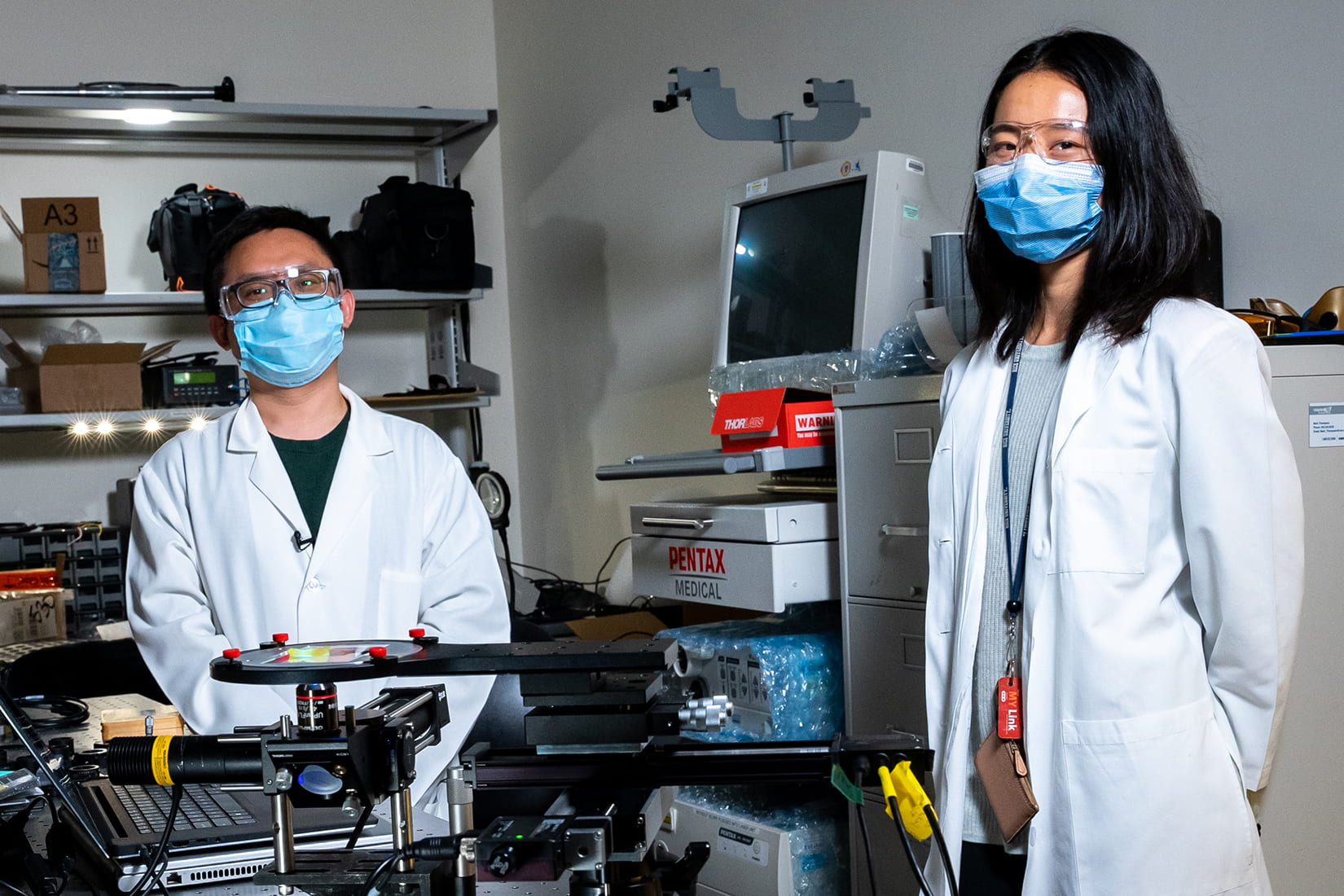

“The main goal of the surgery is to remove all the cancer cells, but the only way to know if you got everything is to look at the tumor under a microscope,” said Rice’s Mary Jin, a Ph.D. student in electrical and computer engineering and co-lead author of the study. “Today, you can only do that by first slicing the tissue into extremely thin sections and then imaging those sections separately. This slicing process requires expensive equipment and the subsequent imaging of multiple slices is time-consuming. Our project seeks to basically image large sections of tissue directly, without any slicing.”

Rice’s deep learning extended depth-of-field microscope, or DeepDOF, makes use of deep learning to train a computer algorithm to optimize both image collection and image post-processing.

With a typical microscope, there’s a trade-off between spatial resolution and depth-of-field, meaning only things that are the same distance from the lens can be brought clearly into focus. Features that are even a few millionths of a meter closer or further from the microscope’s objective will appear blurry. For this reason, microscope samples are typically thin and mounted between glass slides.

Slides are used to examine tumor margins today, and they aren’t easy to prepare. Removed tissue is usually sent to a hospital lab, where experts either freeze it or prepare it with chemicals before making razor-thin slices and mounting them on slides. The process is time-consuming and requires specialized equipment and workers with skilled training. It is rare for hospitals to have the ability to examine slides for tumor margins during surgery, and hospitals in many parts of the world lack the necessary equipment and expertise.

“Current methods to prepare tissue for margin status evaluation during surgery have not changed significantly since first introduced over 100 years ago,” said study co-author Ann Gillenwater, M.D., a professor of head and neck surgery at MD Anderson. “By bringing the ability to accurately assess margin status to more treatment sites, the DeepDOF has potential to improve outcomes for cancer patients treated with surgery.”

Jin’s Ph.D. advisor, study co-corresponding author Ashok Veeraraghavan, said DeepDOF uses a standard optical microscope in combination with an inexpensive optical phase mask costing less than $10 to image whole pieces of tissue and deliver depths-of-field as much as five times greater than today’s state-of-the-art microscopes.

Ashok Veeraraghavan (Image courtesy of Rice University)

“Traditionally, imaging equipment like cameras and microscopes are designed separately from imaging processing software and algorithms,” said study co-lead author Yubo Tang, a postdoctoral research associate in the lab of co-corresponding author Rebecca Richards-Kortum. “DeepDOF is one of the first microscopes that’s designed with the post-processing algorithm in mind.”

The phase mask is placed over the microscope’s objective to module the light coming into the microscope.

“The modulation allows for better control of depth-dependent blur in the images captured by the microscope,” said Veeraraghavan, an imaging expert and associate professor in electrical and computer engineering at Rice. “That control helps ensure that the deblurring algorithms that are applied to the captured images are faithfully recovering high-frequency texture information over a much wider range of depths than conventional microscopes. DeepDOF does this without sacrificing spatial resolution.”

“In fact, both the phase mask pattern and the parameters of the deblurring algorithm are learned together using a deep neural network, which allows us to further improve performance,” Veeraraghavan said.

To train DeepDOF, researchers showed it 1,200 images from a database of histological slides. From that, DeepDOF learned how to select the optimal phase mask for imaging a particular sample and it also learned how to eliminate blur from the images it captures from the sample, bringing cells from varying depths into focus.

“Once the selected phase mask is printed and integrated into the microscope, the system captures images in a single pass and the ML (machine learning) algorithm does the deblurring,” Veeraraghavan said.

Richards-Kortum, Rice’s Malcolm Gillis University Professor, professor of bioengineering and director of the Rice 360° Institute for Global Health, said DeepDOF can capture and process images in as little as two minutes.

Rebecca Richards-Kortum (Image courtesy of Brandon Martin/Rice University)

“We’ve validated the technology and shown proof-of-principle,” Richards-Kortum said. “A clinical study is needed to find out whether DeepDOF can be used as proposed for margin assessment during surgery. We hope to begin clinical validation in the coming year.”

Additional co-authors include Yicheng Wu, Jackson Coole, Melody Tan, Xuan Zhao and Jacob Robinson of Rice, and Hawraa Badaoui and Michelle Williams of MD Anderson.

Veeraraghavan and Robinson are both members the Rice Neuroengineering Initiative.

The research was supported by the National Science Foundation (1730574, 1648451, 1652633), the Defense Advanced Research Projects Agency (N66001-17-C-4012), the National Institutes of Health (1RF1NS110501) and the National Cancer Institute (CA16672).

Read the research article in full here.

tags: Focus on good health and well-being, Focus on UN SDGs