ΑΙhub.org

Interview with Michael Milford – using artificial intelligence for robotic navigation

Can you briefly explain your research interests?

My primary interests are in the fields of spatial intelligence – how we can develop better navigation and positioning systems for robots and autonomous vehicles. My main research approach involves using a combination of traditional algorithmic approaches, modern deep learning and biologically-inspired approaches, both in terms of software and hardware.

How do you link robotic navigation and artificial intelligence?

Spatial intelligence is one of the most tangible aspects of general intelligence, and hence it’s a great gateway by which to progress our understanding and development of intelligence in robotics. For example, spatial intelligence can be directly observed in the brain, where multiple navigationally-relevant neurons like “place cells” can be observed, and modelled in software to create better performing robotic systems.

What is the future of robotic navigation? Will there be fully autonomous vehicles that we can trust? (Not in terms of ethical concerns but from a technical standpoint.)

From a technical point of view, autonomous vehicles are very good but not yet sufficiently perfect to be practicable. The amount of further improvement remaining is a point of significant ongoing debate internationally. I think a reasonable consensus view would be that there’s no guarantee that highly autonomous (level 4+) vehicles will become widespread on our roads in any near timeframe, but at the same time it’s entirely possible.

Do you think we know enough about insect/rodent neuroscience to create reliable robotic navigation systems, or do we need to advance more in neuroscience?

I’ve drawn inspiration from neuroscience for almost two decades now, and I’m always amazed by how much progress has been made in understanding how the brain works, especially in areas like spatial neuroscience. That said, we still know very little in the grander scheme of things – which means as engineers we get to have some interesting discussions with neuroscientists and engineer best guesses to some of this missing knowledge. We’re always looking forward to further advances in our ability to study and understand neural systems.

If you compare spatial navigation abilities of rodents, humans and robots, what do you think are the key similarities and differences?

In general, robots are highly capable navigators in very specific circumstances (and may surpass the abilities of organisms in the natural world), and hopeless outside of those specific circumstances. Animals (including humans) are remarkably robust navigators, and are generally able to adapt to a very wide range of circumstances.

Can you tell us about the “evolution” of the RatSLAM algorithm [1]? What inspired you and how did it impact the research field over time?

I started studying neurally-inspired robotic navigation as part of my PhD in 2003, following on from some promising preliminary work by colleagues and former supervisors like Gordon Wyeth and Brett Browning. At the time, I’d been fascinated by artificial intelligence and robotics for some time already, and combining the study of artificial and natural spatial intelligence seemed like an exciting way to pursue this interest further.

Compared to the past, deep learning is a lot more dominant in AI, in general. What is the situation in visual navigation?

Deep learning has had a significant impact in visual navigation techniques, just like in most other fields. We were some of the first to “jump on the bandwagon” so to speak, and our early work from 2013 onwards is some of the most highly cited work in the field. Like other fields, the best performing systems are typically some pragmatic combination of traditional techniques, deep learning-based techniques and sometimes biologically-inspired techniques. That combination varies depending on what you’re trying to do – an autonomous underground mining vehicle’s navigation challenges are different to a drone operating high above the ground for example.

What books do you suggest to those interested in use of AI in navigation systems or bio-inspired AI in general?

Shameless self-plug: I’ve written extensively on these topics – a book version of my thesis “Robot navigation from nature: Simultaneous localisation, mapping, and path planning based on hippocampal models” is available, and I’ve a number of introductory titles on artificial intelligence including “The Complete Guide to Artificial Intelligence for Kids”, which has been very popular around the world.

I also benefited from much of the literature in the field – there are lots of great survey and tutorial papers available nowadays including our globally co-written paper Visual place recognition: A survey [2].

I am aware that you have a publishing company that focuses on STEM for kids. Are you planning to publish a book about AI for kids?

I have – “The Complete Guide to Artificial Intelligence for Kids”, as well as other related books including “Robot Revolution”. I’m also just about to launch a new book on Kickstarter, “The Complete Guide to Autonomous Vehicles”, which covers all the key concepts behind this exciting and potentially transformative technology, including artificial intelligence.

References

[1] Milford, Michael J., Gordon F. Wyeth, and David Prasser. RatSLAM: a hippocampal model for simultaneous localization and mapping, IEEE International Conference on Robotics and Automation (2004).

[2] Lowry, Stephanie, et al. Visual place recognition: A survey, IEEE Transactions on Robotics (2015).

Biography

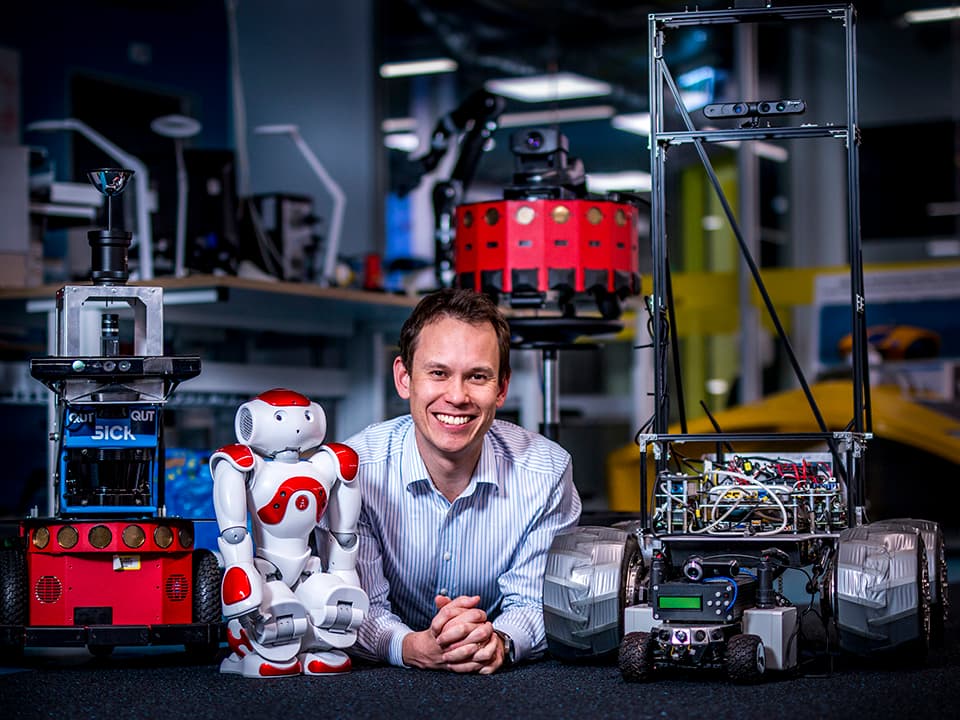

Michael Milford is currently Director of the QUT Centre for Robotics (acting), Professor at the Queensland University of Technology, Microsoft Research Faculty Fellow and Chief Investigator at the Australian Centre for Robotic Vision. His interdisciplinary research interests lie at the boundary between robotics, neuroscience and computer vision. His research models the neural mechanisms in the brain underlying tasks like navigation and perception to develop new technologies in challenging application domains. He is also passionate about engaging and educating all sectors of society around new opportunities and impacts from technology including robotics, autonomous vehicles and artificial intelligence. He is also a multi-award winning educational entrepreneur who has produced more than 20 educational titles over the past 2 decades.

Michael Milford is currently Director of the QUT Centre for Robotics (acting), Professor at the Queensland University of Technology, Microsoft Research Faculty Fellow and Chief Investigator at the Australian Centre for Robotic Vision. His interdisciplinary research interests lie at the boundary between robotics, neuroscience and computer vision. His research models the neural mechanisms in the brain underlying tasks like navigation and perception to develop new technologies in challenging application domains. He is also passionate about engaging and educating all sectors of society around new opportunities and impacts from technology including robotics, autonomous vehicles and artificial intelligence. He is also a multi-award winning educational entrepreneur who has produced more than 20 educational titles over the past 2 decades.