ΑΙhub.org

Helping drone swarms avoid obstacles without hitting each other

By Clara Marc

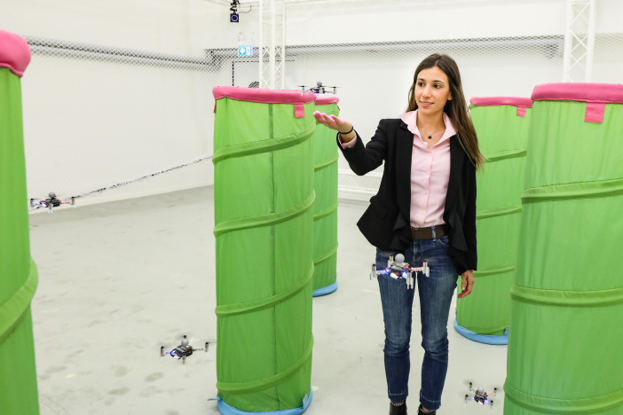

Engineers at EPFL have developed a predictive control model that allows swarms of drones to fly in cluttered environments quickly and safely. It works by enabling individual drones to predict their own behaviour and that of their neighbours in the swarm.

There is strength in numbers. That’s true not only for humans, but for drones too. By flying in a swarm, they can cover larger areas and collect a wider range of data, since each drone can be equipped with different sensors.

Preventing drones from bumping into each other

One reason why drone swarms haven’t been used more widely is the risk of gridlock within the swarm. Studies on the collective movement of animals show that each agent tends to coordinate its movements with the others, adjusting its trajectory so as to keep a safe inter-agent distance or to travel in alignment, for example.

“In a drone swarm, when one drone changes its trajectory to avoid an obstacle, its neighbours automatically synchronize their movements accordingly,” says Dario Floreano, a professor at EPFL’s School of Engineering and head of the Laboratory of Intelligent Systems (LIS). “But that often causes the swarm to slow down, generates gridlock within the swarm or even leads to collisions.”

Not just reacting, but also predicting

Enrica Soria, a PhD student at LIS, has come up with a new method for getting around that problem. She has developed a predictive control model that allows drones to not just react to others in a swarm, but also to anticipate their own movements and predict those of their neighbours. “Our model gives drones the ability to determine when a neighbour is about to slow down, meaning the slowdown has less of an effect on their own flight,” says Soria. The model works by programming in locally controlled, simple rules, such as a minimum inter-agent distance to maintain, a set velocity to keep, or a specific direction to follow. Soria’s work has just been published in Nature Machine Intelligence.

With Soria’s model, drones are much less dependent on commands issued by a central computer. Drones in aerial light shows, for example, get their instructions from a computer that calculates each one’s trajectory to avoid a collision. “But with our model, drones are commanded using local information and can modify their trajectories autonomously,” says Soria.

A model inspired by nature

Tests run at LIS show that Soria’s system improves the speed, order and safety of drone swarms in areas with a lot of obstacles. “We don’t yet know if, or to what extent, animals are able to predict the movements of those around them,” says Floreano. “But biologists have recently suggested that the synchronized direction changes observed in some large groups would require a more sophisticated cognitive ability than what has been believed until now.”

Read the article in full

Predictive control of aerial swarms in cluttered environments

Enrica Soria, Fabrizio Schiano, and Dario Floreano