ΑΙhub.org

AI can help doctors work faster – but trust is crucial

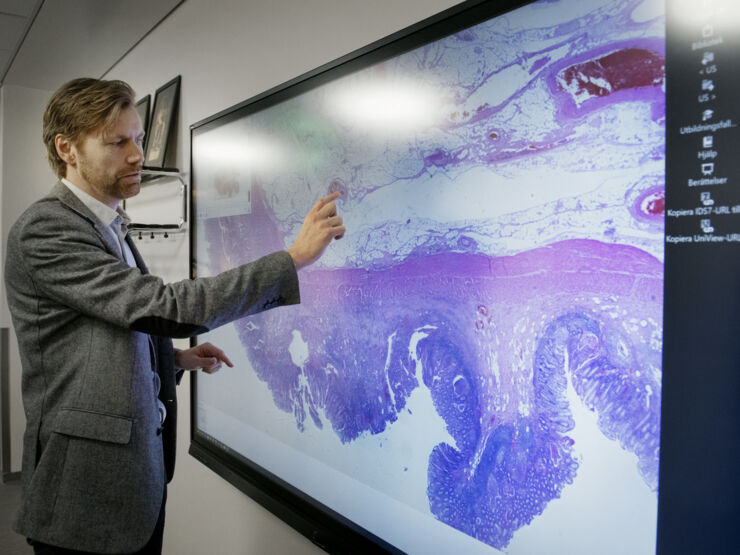

Claes Lundström examines a digital pathology slide. The tools of the future are tested using AI technology and imaging at the national research arena AIDA. Photo credit: Kajsa Juslin

Claes Lundström examines a digital pathology slide. The tools of the future are tested using AI technology and imaging at the national research arena AIDA. Photo credit: Kajsa Juslin

By Karin Söderlund Leifler

If artificial intelligence (AI) is to be of help in healthcare, people and machines must be able to work effectively together. A new study shows that doctors who use AI when examining tissue samples can work faster, while still doing high-quality work.

How can AI systems in healthcare be designed to facilitate the interaction between people and computers? Martin Lindvall has researched this very question, with a particular focus on deep learning. In simple terms, this involves AI that is trained by finding patterns in large amounts of data. This kind of AI can, for example, be trained to identify cancerous cells in medical pictures.

A big challenge for those who develop AI for healthcare purposes is that AI doesn’t always get things right.

“We have learnt to expect that the AI will make mistakes. But we know that we can make it better over time, by telling it when it’s wrong and when it’s right. At the same time as taking account of that, we need to work on these systems so that they are fit for purpose and effective for users. It’s also important that the users feel that the machine-learning brings something positive”, says Martin Lindvall, who has recently taken his industry-based doctorate at the Wallenberg AI Autonomous Systems and Software Program (WASP) at LiU.

Can we trust AI to get it right?

“Computer programmes that use machine learning will inevitably make mistakes in ways that are hard to predict”, says Martin Lindvall.

Within medical imaging, AI can be trained to find abnormalities in, for example, tissue samples. But it turns out that models trained on machine learning are sensitive and easily affected by little things, such as if you change the manufacturer of the chemicals that are used to dye the tissue cuts, how thick they are, and whether there is dust on the glass in the scanner. This kind of disruptive effects can lead to the model malfunctioning.

“These factors are now well-known among AI developers, and developers make sure to check for them. But we can’t be sure that these kinds of disruptions won’t also be discovered in the future. So we want to ensure that there’s a barrier to prevent problems that we’re not even aware of yet.”

In turn, this can make it hard for users to know whether they can trust AI. In order for AI tools to work in clinical environments they must, of course, fit in with healthcare workflows in an effective and safe way.

“AI-based support has to be good enough such that the user doesn’t need to spend just as much time checking the AI’s conclusions as they do actually using it.”

Putting the user in the drivers’ seat

Together with his colleagues, Martin Lindvall has developed an interface for interaction between people and computers – an interface specifically for helping doctors to examine tissue samples. Here, AI works as a kind of assistant for the human user, rather than as a replacement for humans. The machine learning component of this can, for example, help pathologists in examining tissue samples of lymph nodes that have been removed during operations on cancer in the large intestine. If the pathologist finds tumour cells in any of the lymph nodes, it can mean that the cancer has spread to other parts of the body, in which case the patient is offered treatment.

“We chose this routine because pathologists have told us that it’s quite simple, but tedious and time-consuming. AI could have something to bring to the table there. The challenge lay in creating AI support that can help the process go faster. Usually, pathologists do these kind of things very fast. They’re fantastic”, says Martin Lindvall.

In the interface, which the researchers call “Rapid Assisted Visual Search” (or RAVS), the pathologist first gets an overview of the tissue. The AI then indicates several areas of suspected cancer. If the doctor does not see anything in those areas, the sample is considered cancer-free. Martin Lindvall believes that this strikes a balance between examining all tissue in detail and speeding up the process. The aims are to help the doctor feel confident in the result, to speed up the process and avoid incorrect decisions. Six pathologists have evaluated the interface, and they presented their conclusions at the International Conference on Intelligent User Interfaces (IUI ’21).

One distinguishing aspect of the interface is that the researchers have made it possible for the user to at any point ignore the AI-generated suggestions and instead examine all tissue as they normally do.

“Most users start out in the same way. They see what the AI suggests, but ignore it. Over time, however, they gain confidence in the AI, and start to use it more. So this interactive aspect of the system acts as a safety barrier and allows the pathologist to feel comfortable. The user is more in the driving seat compared to AI products with more autonomy”, says Martin Lindvall.

The researchers’ conclusions in the study are that the pathologists worked faster when using the RAVS interface. Martin Lindvall believes that the interaction between people and assistive AI can play an important role in speeding up the introduction of AI in medicinal decision-taking, since it both creates a safety barrier that is currently lacking in autonomous AI and helps the user to build confidence in the system.

Furthermore, this kind of system can learn as it is being used. The system can be gradually improved through a human expert telling the AI whether its suggestions were correct. This opens up the possibility of this kind of interface acting as a springboard for more independent AI systems in the future.

The importance of “soft” values

Lots happened with research on AI and medical imaging during Martin Lindvall’s PhD at LiU.

“I started as a PhD student in 2016, and at that time there were vanishingly few studies that applied deep learning to tissue samples. There are now several huge studies of this kind, with different AI applications that have turned out to perform better than specialist doctors when it comes to some very specific tasks. It’s very impressive. I’ve wondered before: ‘Is this just hype?’ But no, this is for real. But there are challenges. If you don’t take care of the “soft” values, such as the user’s confidence in the system, then there’s a risk that it will take longer than necessary before we see these systems used in healthcare.”

Read the study in full

Rapid Assisted Visual Search: Supporting Digital Pathologists with Imperfect AI

Martin Lindvall, Claes Lundström, and Jonas Löwgren

26th International Conference on Intelligent User Interfaces (2021).

tags: Focus on good health and well-being, Focus on UN SDGs