ΑΙhub.org

#AAAI2022 invited talks – data-centric AI and robust deep learning

In this article, we summarise two of the invited talks from the AAAI Conference on Artificial Intelligence. We hear from Andrew Ng and Marta Kwiatkowska, who talked about data-centric AI and robust deep learning respectively.

Andrew Ng – The data-centric AI

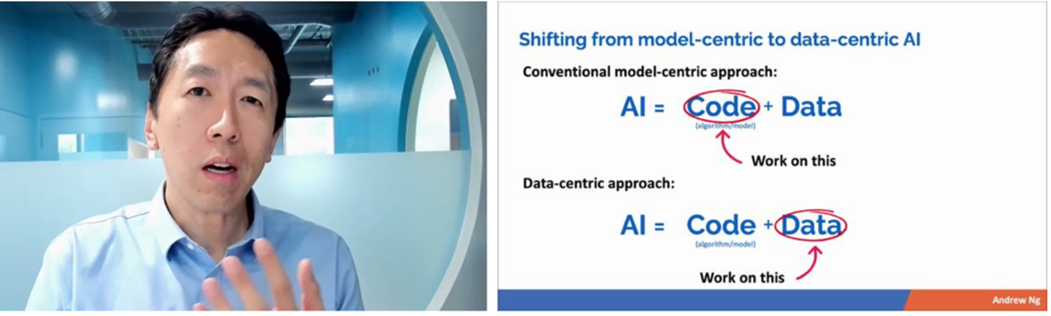

Andrew began with a definition of data-centric AI – “the discipline of systematically engineering the data used to build an AI system”. AI systems tend to consist of two parts: data and code. The conventional approach for developing such systems, and one which many researchers take, is to download a dataset and then work on the code. However, for many problems Andrew believes that this method is not the most effective. Instead, the other option (the data-centric option) is to hold the code fixed and focus on working on the data.

Andrew acknowledged that this is an approach that a number of practitioners have already been championing. He believes there is a lot of potential in this approach, particularly if ideas can be pooled and a data-centred approach is made much easier for everyone to implement.

Data-centric AI. Screenshot from Andrew Ng’s talk.

Data-centric AI. Screenshot from Andrew Ng’s talk.

Andrew shared some examples of research directions in data-centric AI that he finds exciting. These include:

- Data-centric AI competitions and benchmarks

- Measuring data quality

- Data iteration – engineering data as part of a machine learning project workflow

- Data management tools

- Crowdsourcing

- Data augmentation and data synthesis

- Responsible AI

Data-centric AI competitions and benchmarks

Over the past few decades, the community has set a lot of benchmarks for algorithms. The premise being that everyone has to download the same dataset and the performance of different algorithms are compared. Therefore, as AI systems are a marriage of code and data, it also seems important to build benchmarks to measure the quality of the data.

Last year, Andrew and two colleagues organised a data-centric competition, which centred on a roman numerals version of MNIST (the famous number recognition dataset). They attracted 489 teams, who competed to submit the best training data. Andrew and his team then trained a set model using the submitted training data from each entry and measured the performance. Since this first competition there have been a number of others and it is likely we’ll see a sharp rise in such events.

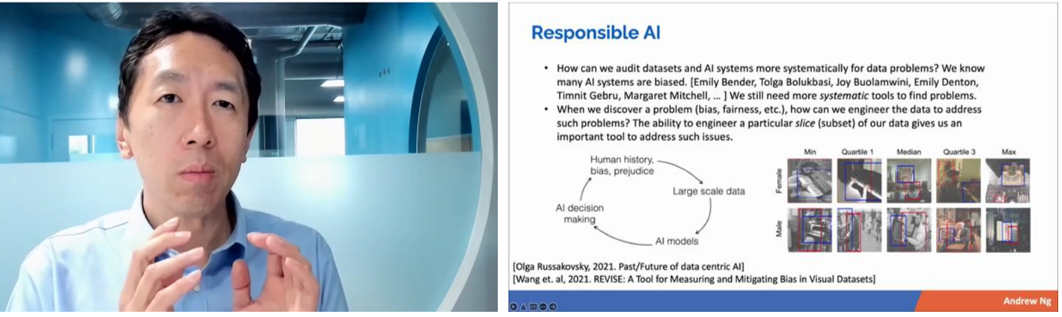

Responsible AI

It is well-known that many AI systems are biased. Andrew noted that in many cases where bias has been detected it has been down to the work of one, or more, researchers who’ve spotted and highlighted a problem. He pointed to the work in this space of researchers such as Timnit Gebru, Joy Boulamwini, Emily Bender, Tolga Bolukbasi, Emily Denton and Margaret Mitchell.

A key question Andrew posed in his talk was: how can we audit datasets and systems more systematically for data problems? As such, one research direction in pursuit of this would be the development of systematic tools that anyone could use to reliably find problems of bias in an AI system.

Responsible AI. Screenshot from Andrew Ng’s talk.

Responsible AI. Screenshot from Andrew Ng’s talk.

To close, Andrew directed researchers to the data-centric AI resource hub that he hosts with his team. It collates blog posts, talks and best practices on the topic of data-centric AI.

To find out more about Andrew’s research, you can watch his complete talk here.

Marta Kwiatkowska – Safety and robustness for deep learning with provable guarantees

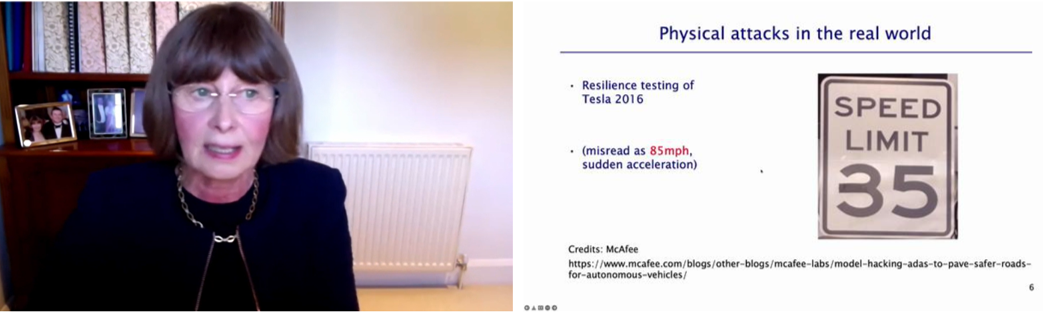

With the rise of deep-learning, and its application to a variety of tasks, we need to be increasingly concerned with safety. Marta specifically considered the example of autonomous cars, showing some dashboard camera images where a red traffic light was classified as green with just one pixel change to the data. This is a purely digital example, where a change has been made to a digital image. However, there have already been physical attacks in the real world. Due to a slight modification to a traffic sign (see image below), an autonomous vehicle was fooled into reading a 35 miles per hour speed limit as 85 miles per hour. In this case, the incident happened in a test environment. However, there have already been fatal accidents due to autonomous vehicle errors on public roads.

Marta’s research centres on finding ways to ensure that this kind of thing doesn’t happen. She is concerned with researching safety assurance processes, with the ultimate aim of introducing these measures to real-world systems.

Screenshot from Marta Kwiatkowska’s talk.

Screenshot from Marta Kwiatkowska’s talk.

The community has been aware for a while that deep neural networks can be fooled. They are unstable with respect to adversarial perturbations. Sometimes these perturbations take the form of artificial white noise, which, when added to an image, can cause the network to misclassify that image. The white noise addition is imperceptible to the human eye, but to an unstable network, it can have a big effect. These adversarial perturbations have formed the core of practical attacks and they are also transferable between different architectures.

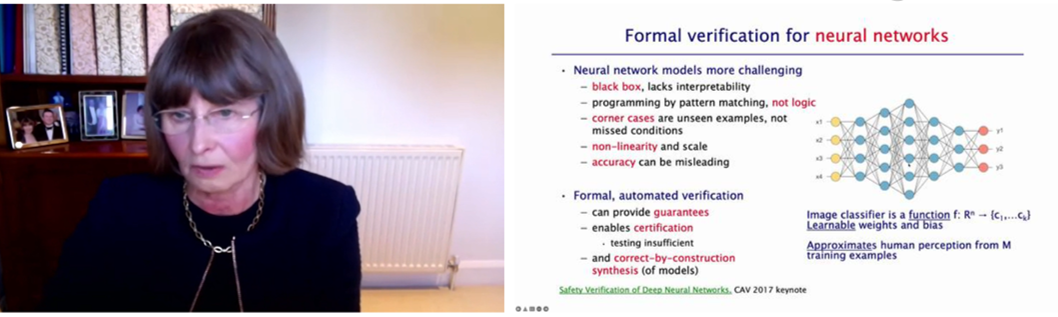

In her presentation, Marta talked about progress she has made over the past five years on software verification for neural networks. Typically in software verification, one builds models from the software, or from the problem specification, and represents these models, often as graphs. Once a rigorous abstraction has been built, this abstraction can be used to do proofs using software such as theorem provers or model checkers. One can then synthesize models that are guaranteed to be correct. The aim is to develop techniques that are algorithmic and automated.

Only more recently has this type of modelling been extended to neural networks. Formal verification for neural networks is very challenging, in large part due to their non-linearity and scale. Although the networks basically consist of functions, those functions have a vast number of parameters, and those parameters are learned, and approximate some ideal specification.

Screenshot from Marta Kwiatkowska’s talk.

Screenshot from Marta Kwiatkowska’s talk.

In her work, Marta adapts formal verification techniques for neural networks. One particular area of focus is robustness evaluation with respect to adversarial perturbations. The goal is to provide provable guarantees, for example, that a traffic light is never going to be green under some noise perturbation. Her methods allow for generation of models that are correct by construction.

In her talk, Marta demonstrated her work through application to areas such as image classification, autonomous control and natural language processing.

So far, Marta has made much progress, and has developed a range of techniques in the field of verification. However, many challenges remain. The scenarios she is considering are very complex and the tools still need to be scaled up to meet the requirements of real-world applications. There is still a need to further develop understanding to confidently and rigorously apply these tools in the wild. Another avenue for investigation is neuro-symbolic models.

You can find out in more detail the methods and algorithms behind Marta’s research in her talk, which can be seen in full here.

tags: AAAI, AAAI2022