ΑΙhub.org

#ICML2022 invited talk round-up 1: towards a mathematical theory of ML and using ML for molecular modelling

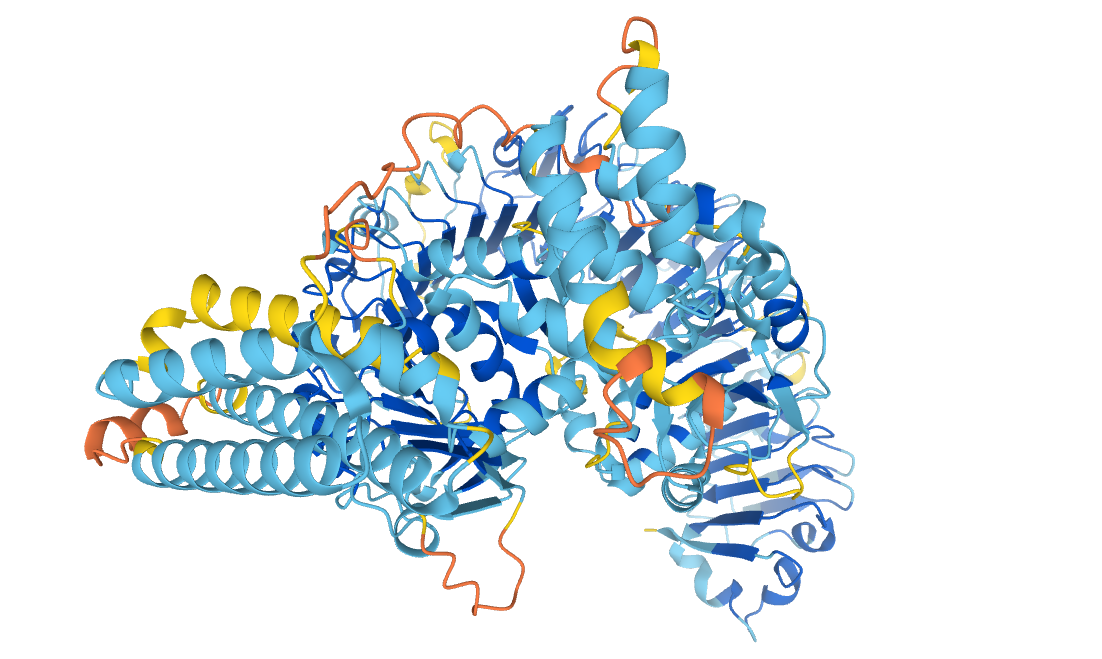

A protein. Reproduced under a CC-BY-4.0 license from the AlphaFold Protein Structure Database.

A protein. Reproduced under a CC-BY-4.0 license from the AlphaFold Protein Structure Database.

In this post, we summarise the first two invited talks from the International Conference on Machine Learning (ICML 2022). These presentations covered two very different topics: mathematical theories of machine learning, and machine learning models for healthcare and the life sciences.

Towards a mathematical theory of machine learning – Weinan E

In his talk, Weinan gave a review of the current status of the field of mathematical theory for neural network-based machine learning. The central theme of his work treats the understanding of high dimensional functions. He began by making the statement that “machine learning is about solving some standard mathematical problems but in very high dimensions”. In this context of mathematics, one could think of supervised learning as approximating a target function using a finite training set. Using the example of image classification, the function we are interested in is the function that maps each image to its category. We know the value of the function on a finite sample (the labels) and the goal is to find an accurate approximation of the function.

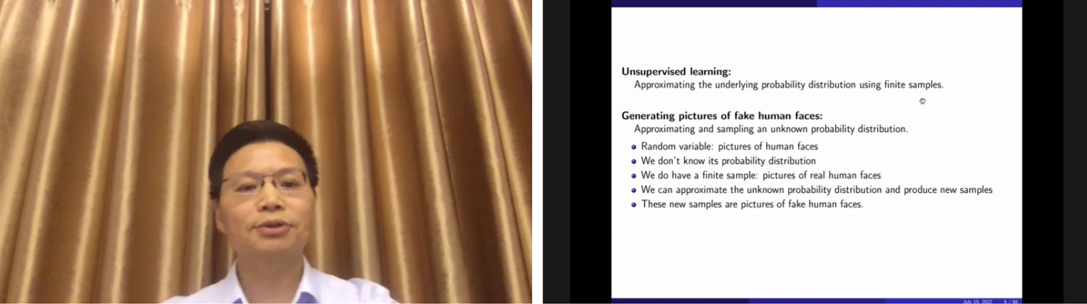

To give another example, in mathematical terms unsupervised learning is a problem of approximating the underlying probability distribution using finite samples. A typical problem in unsupervised learning is the generation of non-existing data, such as the generation of fake human faces. Although the probability distribution isn’t known, we do have a finite sample of real human faces. With that finite sample, we can approximate the unknown probability distribution and produce new samples. In terms of other machine learning methods, reinforcement learning is about solving a Bellman equation for the associated Markov decision process, and time-series learning concerns approximating dynamical systems.

Screenshot from Weinan’s talk.

Screenshot from Weinan’s talk.

Weinan noted that computational mathematicians have been solving these kinds of problems for many years. The only real difference is dimensionality. Taking the CIFAR-10 problem as an example, the function we are interested in is that which maps each image to its category. Each image is 32 x 32 pixels and there are three colour dimensions, so the overall dimensionality of the problem is 3072. This poses a problem for classical approximation theory (where one would typically approximate a function using piecewise linear functions over a mesh) because, as the dimensionality of the problem grows, the computational cost increases exponentially. This is true for all classical algorithms that approximate functions using polynomials. Neural networks can do this kind of problem much more efficiently.

In his talk, Weinan focused on a number of proofs which treated different aspects of neural network-based algorithms, including errors in supervised learning, random feature models, approximation theory for two-layer networks, gradient-based training, and approximating probability distributions in unsupervised learning.

He concluded by saying that, although there are many things still to be understood, there is a reasonable picture of both approximation theory in high dimensions, and global minimum selection in later stages of training. He stressed that this work is not just about proving theorems – other methodologies are needed, such as careful design of numerical experiments and asymptotic analysis.

You can see the slides from the talk here.

Solving the right problems: Making ML models relevant to healthcare and the life sciences – Regina Barzilay

Regina began by giving a couple of research highlights from her group. The first concerned research on building models for predicting the future risk of cancers. In this work, the team constructed a model that can look at an image from a scan and predict that, within five years, for example, the patient is likely to test positive for a particular cancer. If the trajectory of the patient is known, then they could receive more screenings and be monitored more carefully. They have already built models for lung cancer and breast cancer, and they are working on a model for prostate cancer. The model is already used in some hospitals and the team are starting clinical trials to test whether it can ready help reduce mortality rates in practice.

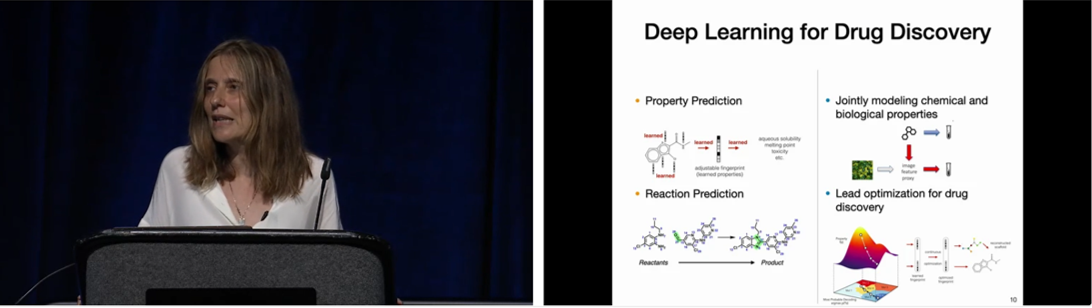

The second highlight is in the field of antibiotic discovery. Most of the antibiotics that we use today were designed decades ago, and lack of new antibiotics stems from the fact that it is not profitable for pharmaceutical companies to develop them. This problem motivated work on a model that led to the discovery of Halicin, a new antibiotic. Regina and her co-authors trained a graph neural network model on 2,500 molecules, which they had experimentally tested for effectiveness against E.coli. For the purposes of the model this gave them a 2d representation of the molecule and a number. This number reflected the inhibitory capacity of the molecule in question. They used the trained model to computationally screen 107 molecules. From this huge number, they were left with 102 candidates to test empirically, then just one candidate to test on animals. You can read more about this work in the Cell paper A Deep Learning Approach to Antibiotic Discovery.

Screenshot from Regina’s talk.

Screenshot from Regina’s talk.

Regina noted that since 2015, this graph algorithm method has been the dominant one for modelling protein prediction. However, progress in terms of performance has stalled. She urged the audience that it’s time to look beyond this method and explore other possibilities. She and her team have been investigating improvements to the methodology. Augmenting the information already in use – the 2d representation of the molecule and the number (which reflects the inhibitory capacity of the molecule) – they add some information about the target. They have applied this method to predicting synergetic combinations of molecules. Say, for example, you have two different molecules that both kill a certain percentage of a virus. A synergetic combination occurs when you use these two molecules in tandem and they kill a greater percentage of the virus than if you added the two separately.

The reason that a new methodology is needed is that there is not much data on these synergetic combinations, perhaps only data on 200 combinations. By adding biological insights, such as which targets are involved, the predictions can be improved.

Regina also talked about strategies for splitting data into training and test sets, and the implications that this has for generalization. The accuracy of the predictions is highly dependent on the method used to split the data. Regina proposed that the field comes up with a standard method for doing this splitting algorithmically based on the properties of the data, and that the testing scenario used should be tailored based on the proposed application.

Regina concluded her talk with a call to the community to focus on the right problems, specifically those that could have a significant positive impact. There is the potential to make the same progress in the life sciences as has been achieved so far with, for example, natural language processing.

You can find out more about Regina’s work here.

tags: ICML, ICML2022