ΑΙhub.org

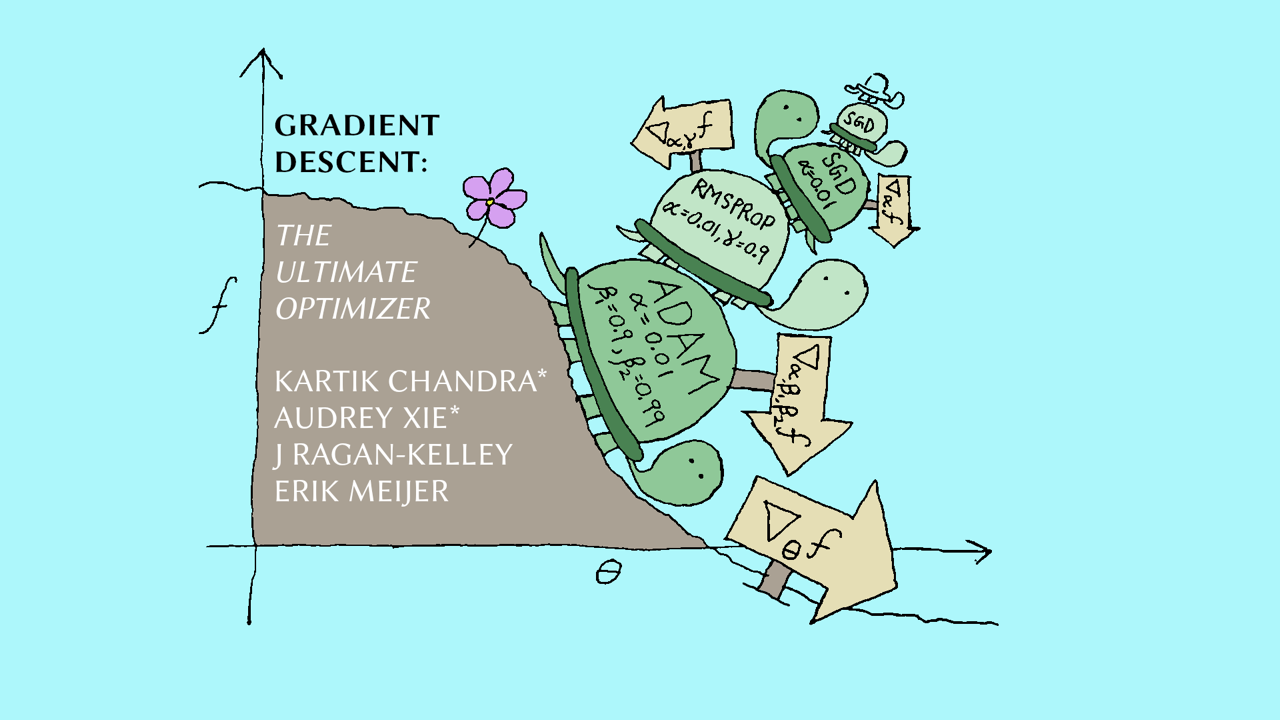

#NeurIPS2022 outstanding paper – Gradient descent: the ultimate optimizer

Kartik Chandra, Audrey Xie, Jonathan Ragan-Kelley and Erik Meijer won a NeurIPS 2022 outstanding paper award for their work Gradient descent: the ultimate optimizer. Here, they tell us more about their work, the methodology and their main findings.

What is the topic of the research in your paper?

Our paper studies the classic problem of “hyperparameter optimization”.

Nearly all of today’s machine learning algorithms use a process called “stochastic gradient descent” (SGD) to train neural networks. SGD requires users to pick certain settings, or “hyperparameters,” before running it. Just like baking a cake requires you to pick an oven temperature and cooking time, running SGD requires you to pick hyperparameters like the “step size” and “momentum.” And just like in baking, the best settings can be hard to find, even after a lot of trial and error.

Our paper shows how SGD can *itself* be used to intelligently find good SGD hyperparameters. This is not a new idea, but our method is highly practical and easier to use than existing methods, and it generalizes straightforwardly to many popular SGD variants.

We also address the natural follow-up question, “don’t you still need to pick hyperparameters for the SGD that picks the hyperparameters”? Our answer: we can keep “stacking” SGD recursively: each new level trains the previous level’s hyperparameters! As the SGD tower grows taller, the top-level human-picked hyperparameters matter less and less.

Could you tell us about the implications of your research and why it is an interesting area for study?

Our work offers a way to dramatically simplify one of the most frustrating tasks in machine learning research: picking hyperparameters. Because our method replaces the costly trial-and-error required to find good hyperparameters, we also hope it can help cut down on the amount of computation and energy needed to train neural networks – and thus the ecological impact of AI research.

Could you explain your methodology?

Our method works by making a subtle modification to the famous “backpropagation” algorithm, so that it can train not only the neural network, but also the SGD hyperparameters operating on that neural network. This idea lends itself to an elegant implementation that lets us “eat our own tail” and repeatedly stack more and more SGDs on top of each other.

What were your main findings?

Our main finding was that our method recovers good hyperparameters across a wide range of tasks and SGD variants. We tested it on several benchmarks, including popular neural network architectures used in computer vision (CV) and natural language processing (NLP), and observed that even if we picked “bad” initial hyperparameters our method would recover and perform about as well as “good” hyperparameters. This robustness increased as we made the SGD stacks taller.

One particularly striking result was that our method could intelligently vary hyperparameters over time, in a way that closely matched “schedules” designed by expert ML researchers.

What further work are you planning in this area?

We are now working on extending this method to work for hyperparameters used in other kinds of AI algorithms, such as in robotics.

About the authors

|

Kartik Chandra is a PhD student at MIT. He is supported by the Hertz Foundation, the Paul & Daisy Fellowship for New Americans, and the National Science Foundation. |

|

Audrey Xie is a third-year undergraduate student at MIT studying computer science and mathematics. |

|

|

Jonathan Ragan-Kelley is the Esther and Harold E. Edgerton Assistant Professor of Electrical Engineering & Computer Science at MIT. |

|

|

Erik Meijer is a Dutch computer scientist best known for his work on Haskell, C#, Visual Basic, and Dart, as well as for his contributions to LINQ and the Reactive Framework (Rx). |

tags: NeurIPS, NeurIPS2022