ΑΙhub.org

Interview with Leanne Nortje: Visually-grounded few-shot word learning

In their work Visually grounded few-shot word learning in low-resource settings, Leanne Nortje, Dan Oneata and Herman Kamper propose a visually-grounded speech model that learns new words and their visual depictions. In this interview, Leanne tells us more about their methodology and how it could be beneficial for low-resource languages.

What is the topic of the research in your paper?

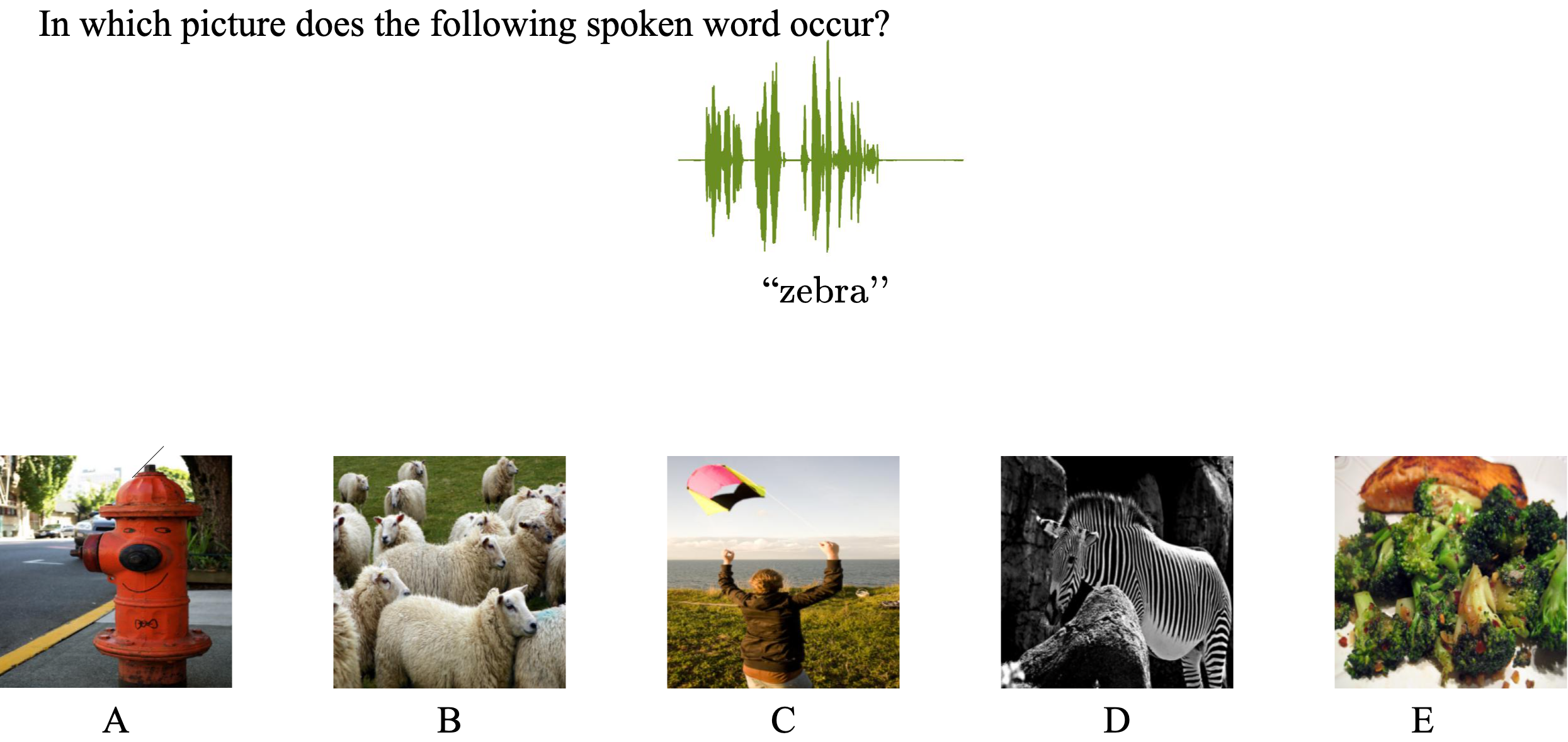

We look into using vision as a form of weakly transcribing audio. This will be particularly helpful for low-resource languages where, in extreme cases, such languages have no written form. We specifically consider the task of retrieving relevant images for a given spoken word by learning from only a few image-word pairs, i.e. to do multimodal few-shot word learning. The aim is to give a model a set of spoken word examples where each word is paired with a corresponding image. Each paired word-image example contains a novel (new) class. After using only these examples to learn the few-shot word classes, the model is given another set of images – a matching set containing an image for each class. When we query the model with a spoken instance of one of these novel classes, the model should identify which image in the matching image set matches the word. For instance, imagine showing a robot images of different objects (zebra, kite, sheep, etc.) while saying the word for each picture. After seeing this small set of examples, we ask the robot to find a new image corresponding to the word “zebra”.

Could you tell us about the implications of your research and why it is an interesting area for study?

Our research has two main impacts. The first is the development of speech systems that cater to low-resourced languages. Current speech systems are trained on large corpora of transcribed speech, which are expensive and time-consuming to collect. This research aims to develop techniques that enable researchers to train speech systems from very few labelled data examples. Secondly, these models are inspired by how children learn languages. Therefore, we can probe the models to gain insight into the cognition and learning dynamics of children.

Could you explain your methodology?

Intuitively, only a few examples, e.g. five, per word class will not be sufficient to learn a model capable of identifying the visual depictions of a spoken word. Therefore, we use the given word-image example pairs to mine new unsupervised word-image training pairs from large collections of unlabelled speech and images. In terms of architecture, we use a vision branch and an audio branch which is connected with a word-to-image attention mechanism to determine the similarity between a spoken word and an image.

What were your main findings?

For the fewer shot scenario, where we have a small amount of examples per class to learn from, we outperform any existing approach. We see that the mined word-image pairs are essential to our performance boost. For retrieving multiple images containing the visual depiction of a spoken word, we get consistent scores across varying numbers of examples per class.

What further work are you planning in this area?

For future work, we are planning to extend the number of novel classes we can learn using this approach. We also plan on applying this model on an actual low-resource language: Yoruba.

About Leanne Nortje

|

I am currently doing a PhD which combines speech processing and computer vision in weakly supervised settings by using small amounts of labelled data. The inspiration behind my models is how efficiently children learn language from very few examples. If systems can learn as rapidly, we could develop less data-dependent systems. In 2018 I received my BEng Electrical and Electronic Engineering degree cum laude from Stellenbosch University. Thereafter, I did my MEng Electronic Engineering degree in 2019 to 2020. I passed my masters cum laude and received the Rector’s Award for top masters student in Engineering. |

Find out more

- The paper: Visually grounded few-shot word learning in low-resource settings, Leanne Nortje, Dan Oneata, Herman Kamper.

- The project website