ΑΙhub.org

#IJCAI2023 distinguished paper: Interview with Maurice Funk – knowledge bases and querying

Maurice Funk, and co-authors Balder ten Cate, Jean Christoph Jung and Carsten Lutz, won a distinguished paper award at the 32nd International Joint Conference on Artificial Intelligence (IJCAI) for their work SAT-Based PAC Learning of Description Logic Concepts. In this interview, Maurice tells us more about knowledge bases and querying, why this is an interesting area for study, and their methodology and results.

What is the topic of the research in your paper?

Our research is in the area of knowledge representation, or more specifically knowledge bases and querying. A knowledge base contains facts like a traditional database e.g. “Bob is a fish” and “Amelia is a dog”, but also background knowledge formulated in some formal language e.g. “every fish is an animal”, “every dog is an animal”. Querying a knowledge base then returns all answers that can be derived from the facts and the background knowledge: asking for all animals yields both “Amelia” and “Bob”. In our work we focus on description logic knowledge bases, where the background knowledge is formulated in some description logic and concepts formulated in some description logic are used as queries.

But in this paper, we consider the “reverse” task to querying a knowledge base: given as input a knowledge base, some answers (positive examples) and some non-answers (negative examples), compute a query that yields all the positive examples as answers, but none of the negative examples. We call such a query a fitting query, and an algorithm that computes this a fitting algorithm. This is sometimes referred to as learning a query from examples. For example, if we use “Amelia” as a positive example and “Bob” as a negative example, then the concept “Dog” is a fitting query.

In general, there are many queries that fit given examples. As usual in learning tasks, not every fitting query is desirable, some might overfit the positive examples and some might overfit the negative examples. In our paper, we want to find fitting algorithms that do not overfit but generalize to unseen examples and also do not need a lot of examples to do so.

Could you tell us about the implications of your research and why it is an interesting area for study?

Knowledge bases and the related knowledge graphs are key to represent and organize large amounts of knowledge. But expanding and maintaining them is a time consuming process that requires both logical expertise as well as domain knowledge. That is why there is great interest in learning based approaches to support these tasks and learning queries from examples is one of them.

There are a lot of implementations of learning queries from examples both for databases and knowledge bases using different algorithms, but as far as we are aware, no existing implementation comes with guarantees about its ability to generalize to unseen examples.

Could you explain your methodology?

We first formalize what it means for a query to “generalize to unseen examples” by adapting Valiant’s framework of probably approximately correct (PAC) learning to our setting. This also formalizes “sample-efficiency”: the number of examples needed to generalize to unseen examples needs to be bounded by a polynomial. So, we want our algorithms for learning queries from examples to be sample-efficient PAC learning algorithms.

We propose to use bounded fitting algorithms, inspired by approaches such as bounded model checking. Those are fitting algorithms that search for a query that fits the examples by size. So they first search for a fitting query of size 1, then of size 2, and so on. This means that bounded fitting algorithms always find a fitting query of the smallest size. We apply a classic result from computational learning theory to our setting and show that every fitting algorithm that computes fitting queries of approximately smallest size (Occam algorithm) is a sample-efficient PAC learning algorithm. Hence, all bounded fitting algorithms are sample-efficient PAC learning algorithms.

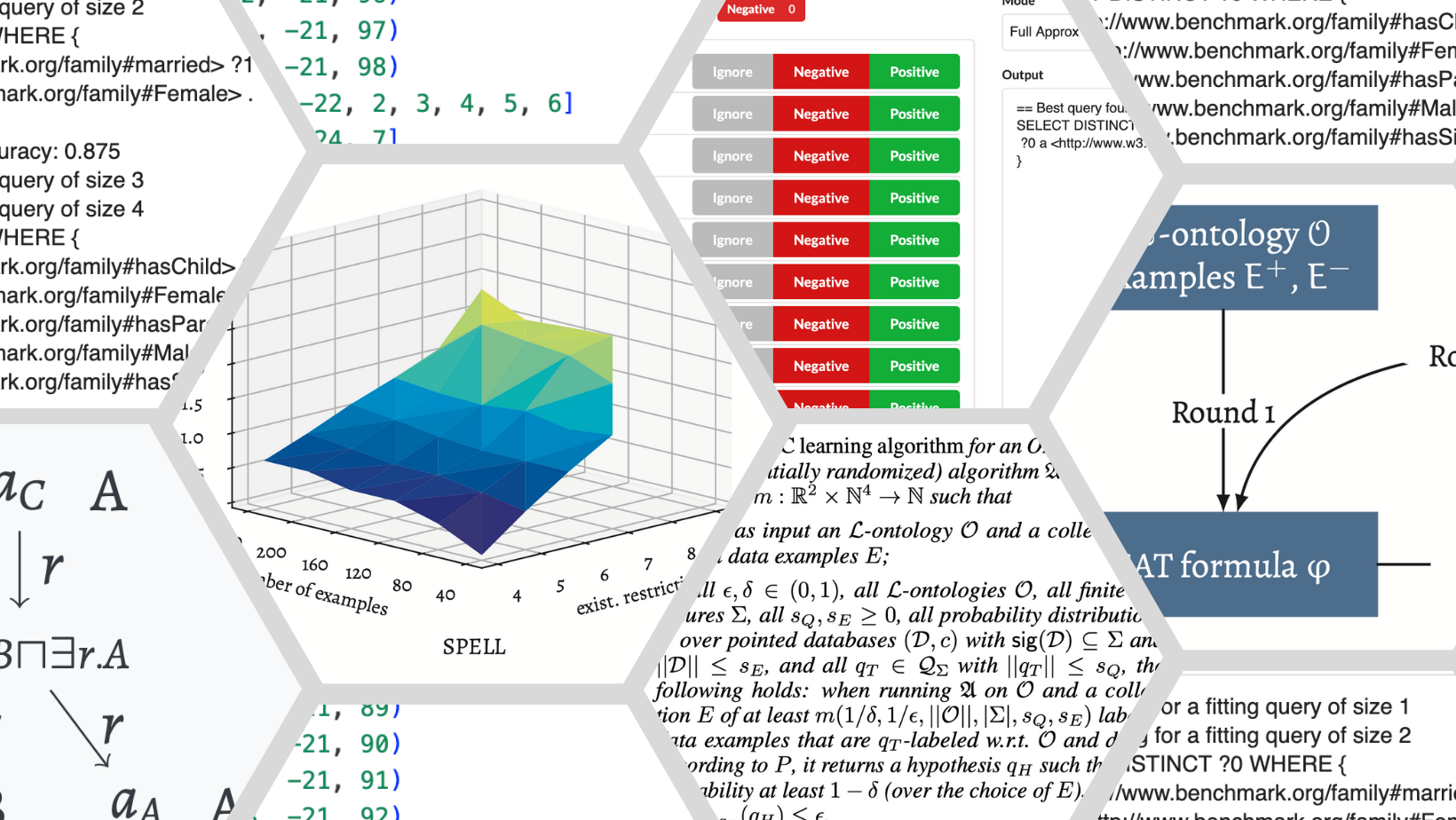

We implemented a bounded fitting algorithm for knowledge bases and queries formulated in the description logic ![]() (core of the OWL 2

(core of the OWL 2 ![]() profile language) and called our implementation SPELL (SAT-based PAC

profile language) and called our implementation SPELL (SAT-based PAC ![]() concept Learner). Since computing fitting queries of bounded size is a hard problem, our implementation uses a SAT-solver to achieve good performance. We then compared SPELL against another implementation of learning

concept Learner). Since computing fitting queries of bounded size is a hard problem, our implementation uses a SAT-solver to achieve good performance. We then compared SPELL against another implementation of learning ![]() queries from examples, the ELTL component of the DL-Learner tool, using both artificial benchmarks and benchmarks constructed from real knowledge bases.

queries from examples, the ELTL component of the DL-Learner tool, using both artificial benchmarks and benchmarks constructed from real knowledge bases.

What were your main findings?

Our main result is that bounded fitting algorithms are always sample-efficient PAC learning algorithms, and hence our implementation SPELL is also a sample-efficient PAC learning algorithm. Additionally we also show that several other algorithms that do not produce a small fitting query are not sample-efficient PAC learning algorithms, that means that there are cases where they require an exponential number of examples to generalize to unseen examples. These algorithms are algorithms that produce certain logically interesting fitting queries: queries that imply all fitting queries, queries that are implied by all fitting queries and queries of small quantifier depth. These findings suggest that optimization criteria other than size are in conflict with generalization.

In our experiments we found that SPELL performs very well. In benchmarks constructed from the knowledge base YAGO 4, it often takes only 1/10th of the time that ELTL takes to compute an ![]() query that fits the examples. In further artificially constructed benchmarks, we were able to highlight different strengths and weaknesses of SPELL and ELTL, caused by the different implemented algorithms (ELTL is based on refinement operators).

query that fits the examples. In further artificially constructed benchmarks, we were able to highlight different strengths and weaknesses of SPELL and ELTL, caused by the different implemented algorithms (ELTL is based on refinement operators).

Finally, we did some preliminary experiments on the ability of SPELL and ELTL to generalize to unseen examples. Both implementations were able to generalize well from few examples with no significant difference. More benchmarks and experiments in this area are needed, but are difficult to construct.

What further work are you planning in this area?

Currently, SPELL is limited to queries written as concepts in the description logic ![]() . In the future we want to extend our implementation to more expressive query languages, like the description logics

. In the future we want to extend our implementation to more expressive query languages, like the description logics ![]() ,

, ![]() or

or ![]() . While the general idea of this extension is straightforward, some of the ideas we used to get good performance for

. While the general idea of this extension is straightforward, some of the ideas we used to get good performance for ![]() stop working when moving to more expressive query languages.

stop working when moving to more expressive query languages.

Furthermore, the framework of PAC learning assumes that there always is a fitting query that perfectly describes the examples. In reality this might not be the case as some examples may be labeled erroneously or the query language might be not expressive enough to separate the positive from the negative examples. To capture this, our algorithms need to admit non-perfect fitting queries, and new questions about what generalization means appear.

About Maurice

|

Maurice Funk is a PhD student at Leipzig University advised by Carsten Lutz. He has a M.Sc. in computer science from the University of Bremen. His research is concerned with exact learning under background knowledge, mostly focused on description logics. |

Read the work in full

SAT-Based PAC Learning of Description Logic Concepts, Balder ten Cate, Maurice Funk, Jean Christoph Jung and Carsten Lutz.

tags: IJCAI2023