ΑΙhub.org

Is compute the binding constraint on AI research? Interview with Rebecca Gelles and Ronnie Kinoshita

Rebecca Gelles and Ronnie Kinoshita

Rebecca Gelles and Ronnie Kinoshita

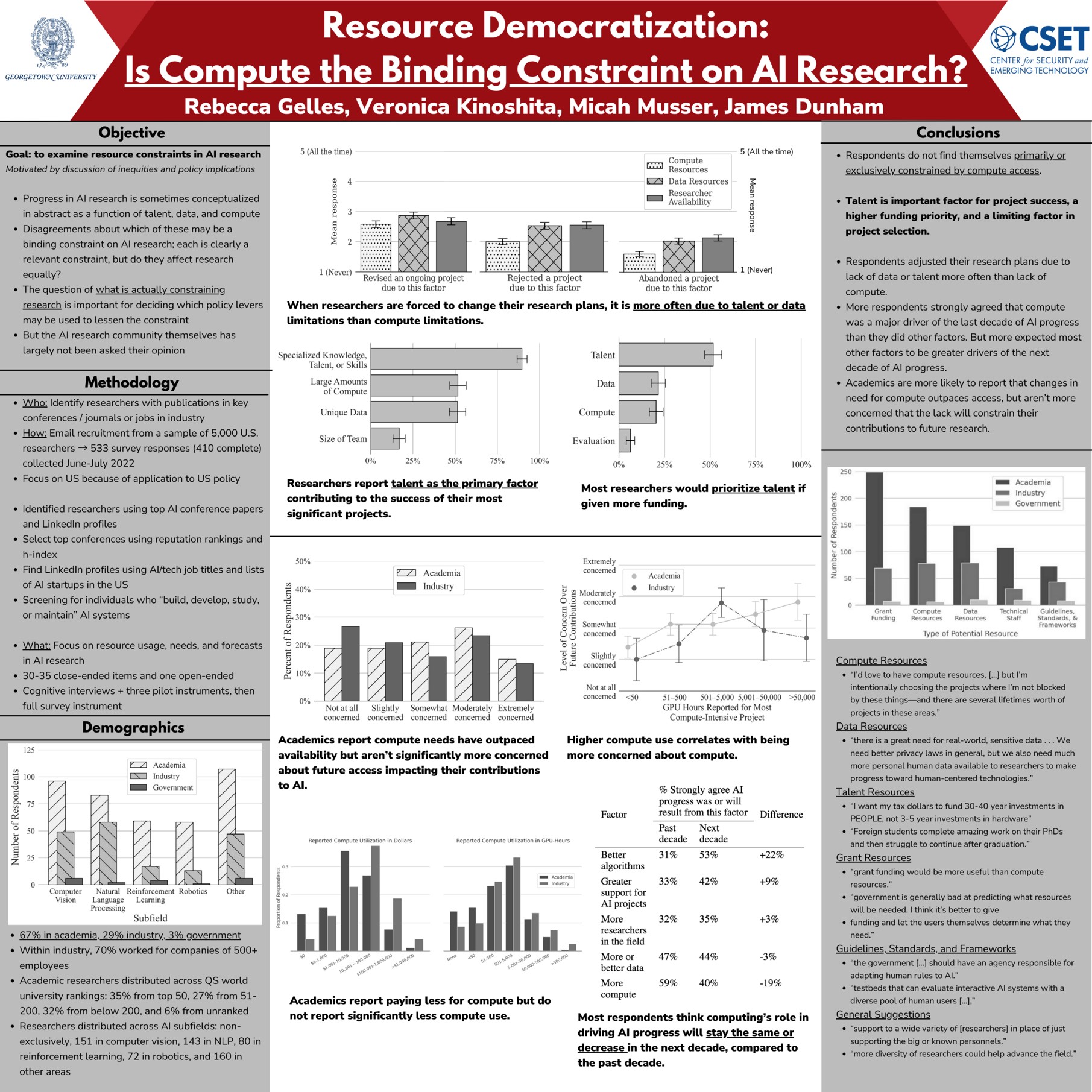

In their work Resource Democratization: Is Compute the Binding Constraint on AI Research?, presented at AAAI 2024, Rebecca Gelles, Veronica Kinoshita, Micah Musser and James Dunham investigate researchers’ access to compute and the impact this has on their work. In this interview, Rebecca and Ronnie tell us about what inspired their study, their methodology and some of their main findings.

What is the subject of your study and what inspired you to investigate this?

We wanted to engage directly with AI researchers to understand their resource constraints when deciding what types of research to dedicate their time to. We also wanted to dig deeper and try to understand how resource constraints play out across the AI research community – particularly looking at divides between industry and academia, a popular topic of discussions about AI resource constraints. The reason we were interested in this question is that there’s a lot of discourse, particularly from the policy community, about the democratization of AI, addressing inequities in the field, and the fear that progress in AI may be held back by resource constraints. These kinds of discussions in policy are what have led to recent efforts like the United States’ pilot National AI Research Resource, which is part of President Biden’s October 2023 AI executive order. And these discussions are happening, talking about computational constraints, data constraints, or talent constraints, across governments around the world. But most of those conversations have thus far been dominated by policymakers rather than day-to-day AI researchers participating in shaping the field. We wanted to ask actual AI researchers about their lived experiences to get a better understanding of what the on-the-ground constraints actually looked like and to bring some of their expertise into these discussions.

How did you go about designing your survey?

The first thing to do when designing any survey is to figure out who you should ask, i.e., who your population is going to be. The types of people and what their experiences are affect both what kinds of questions you ask and how you actually identify and contact the set of respondents within that population to answer your questions. In this case, our focus for the survey was specifically on a U.S.-based pool of AI researchers – that is, people who are directly involved in studying and working on AI. That means the study may not cover everyone whose work is touched by AI – for example, those who use AI at work in other fields or who adapt production AI code occasionally to help with their analysis. We decided on this focus because our belief was that the AI progress that policymakers were most concerned about was most likely to be carried out by this core group of AI researchers.

After identifying our population, we then turned to the design of the questionnaire. We developed a series of questions to ask respondents about a variety of topics related to their resource usage, constraints, and projects. We used standard survey methods to ensure quality research design and tested each of our research questions in multiple ways and minimized respondent bias while keeping the survey accessible enough for respondents to remain interested in completing it. Furthermore, we then tested the survey with a series of testing interviews with subject-matter experts before sending out a pilot version to measure the response rate and make any final adjustments. This whole process went through our university’s IRB board for review, as we were doing research with humans, and we wanted to make sure that everything was done ethically and thoughtfully.

Tell us about how you carried out the survey.

To solicit participation from respondents in our population, we compiled lists of top conferences and journals in both AI and major AI subfields, e.g., natural language processing, machine learning, computer vision, and robotics. We selected these conferences and journals using a combination of reputation rankings (based on csrankings.org) and H-index. We then collected author names and contact information from these conferences and journals over the past five years whose primary affiliation was with a U.S. organization. We scoped our participant pool this way as we were most interested in the U.S. policy implications.

This initial list produced over 27,000 email addresses, with both academic and industry respondents included. As respondents from academia were overrepresented in this sample, we were concerned about our ability to capture the voice of industry researchers, who were less likely to publish in these venues. For this reason, we supplemented it with two sets of LinkedIn lists. For the first, we identified a list of AI startups spread across the United States using the CBInsights report “The United States of Artificial Intelligence Startups.” Then, we limited it to anyone at those companies with a technical job title. This gave us a list of primarily startup employees. For the other, we searched using a more specific list of AI-only job titles at any job located in the United States, in order to increase our industry representation. We then ran 5,000 of these results through the email-finding service RocketReach, and added the resulting emails to our sample.

What were your main findings, and were you particularly interested or surprised by any of these?

Our main findings were the following:

- Researchers report talent as the primary factor contributing to the success of their most significant projects, and most researchers would prioritize talent if given more funding.

- When researchers are forced to change their research plans, it is more often due to talent or data limitations than compute limitations.

- Most respondents think computing’s role in driving AI progress will stay the same or decrease in the next decade when compared to the past decade.

- Academics report paying less for compute but do not report significantly less compute use.

- Academics report compute needs have outpaced availability but are not significantly more concerned about future access impacting their contributions to AI.

- Higher compute use correlates with being more concerned about compute.

We were quite surprised by many of our findings, although they make more sense in retrospect, especially after talking to many researchers afterward, and reading comments from researchers in our open-ended follow-up questions. Due to popular discourse on compute importance, we expected researchers to prioritize compute needs above all else, and to therefore be very concerned about a growing lack of compute resources. That’s not, universally, what we found.

Ultimately, we think the key to our findings is that academic researchers who don’t have as much compute have already figured out how to work without it, and so they aren’t as concerned as people expect them to be. They’ve chosen subfields and projects that don’t require as many computational resources and they’re already settled into those. This doesn’t mean the next set of researchers wouldn’t benefit from greater compute or that providing more compute wouldn’t enable solving problems that we can’t now; it just means the overall level of widespread concern in the academic community is probably much lower than policymakers believe it to be.

Looking specifically at the proposed National AI Research Resource, what did your survey reveal here?

What we found overall is that researchers seemed very interested in the potential of a National AI Research Resource, but they were more hesitant to use it in practice, expressing concerns about how it would be implemented. Most researchers’ ideal for such a resource was that it simply provide more grant funding, and the frequent explanation we got for this was that they just wanted to have access to money to use for their work however they saw fit without dealing with bureaucracy. There was interest in computational resources, but also a lot of concern about obsolescence, or barriers to entry, or technical complications, and similar perspectives on data resources and talent resources. Many researchers would also clearly appreciate more guidance on what they should and shouldn’t be doing in regards to AI and how to handle AI safety and harms issues.

What were the main conclusions you drew from your study?

If we want to reduce the resource constraints in the AI community, it’s not enough to provide some large government computational resources with limited technical support and call it a day. Additional technical resources may help some researchers, but certainly not all, and even then those resources need to be maintained, updated, and supported. In the meantime, more should be done to address some of the other challenges beyond compute access that researchers face, and policymakers should consider what those challenges are, and whether they’re related to access to data, talent, equity, or overall funding.

Read the work in full

Resource Democratization: Is Compute the Binding Constraint on AI Research?, Rebecca Gelles, Veronica Kinoshita, Micah Musser, James Dunham.

About the authors

|

Ronnie Kinoshita is currently the Deputy Director of Data Science and Research at Georgetown’s Center for Security and Emerging Technology (CSET). In her previous role as Survey Research Analyst at CSET, she supported various lines of research across the organization. Ronnie holds a M.S. in Industrial-Organizational Psychology from the University of Tennessee at Chattanooga. She received her B.A. from Hendrix College in Psychology and Chemistry. |

|

Rebecca Gelles is a Data Scientist at Georgetown’s Center for Security and Emerging Technology. Previously, she spent almost seven years in government, where she graduated from the Director’s Summer Program (DSP) and the Cryptanalytic Computer Network Operations Development Program (C2DP). Rebecca holds a B.A. in Computer Science and Linguistics from Carleton College and a M.S. in Computer Science from University of Maryland College Park, where her research focused on how the media influences users’ computer security postures and on artificial intelligence techniques for defending IoT devices from cyber attacks. |

tags: AAAI, AAAI2024