ΑΙhub.org

Interview with Yuan Yang: working at the intersection of AI and cognitive science

In this interview series, we’re meeting some of the AAAI/SIGAI Doctoral Consortium participants to find out more about their research. The Doctoral Consortium provides an opportunity for a group of PhD students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. In this latest interview, we hear from Yuan Yang, who completed his PhD in May. This autumn, Yuan will be joining the College of Information, Mechanical and Electrical Engineering, Shanghai Normal University as an associate professor.

Tell us a bit about your PhD – where did you study, and what was the topic of your research?

From August 2018 to May 2024, I did my PhD in the computer science department at Vanderbilt University, which is located in the famous music city – Nashville, Tennessee. I have been doing research in the lab of Artificial Intelligence and Visual Analogical Systems directed by Dr Maithilee Kunda. Most of our works involve studying how visual mental imagery contributes to learning and intelligent behavior in humans and in AI systems, with applications in cognitive assessment and special education, especially in relation to autism and other neurodiverse conditions. Being influenced by the these works, I became interested in topics in human cognition, such as visual abstract reasoning, analogy making, and mental imagery. On one hand, I look into how insights into these cognitive abilities can help build better AI systems; on the other, I consider how AI can help us better understand these cognitive abilities.

Could you give us an overview of the research you carried out during your PhD?

My PhD advisor provided me with the luxury to fully explore my research interests at the initial stage of my PhD. At first, I was obsessed with coding for robots to perform various tasks in virtual environments, for example, the Animal-AI Olympics and the initiative for Interactive Task Learning, and many other class projects about robots.

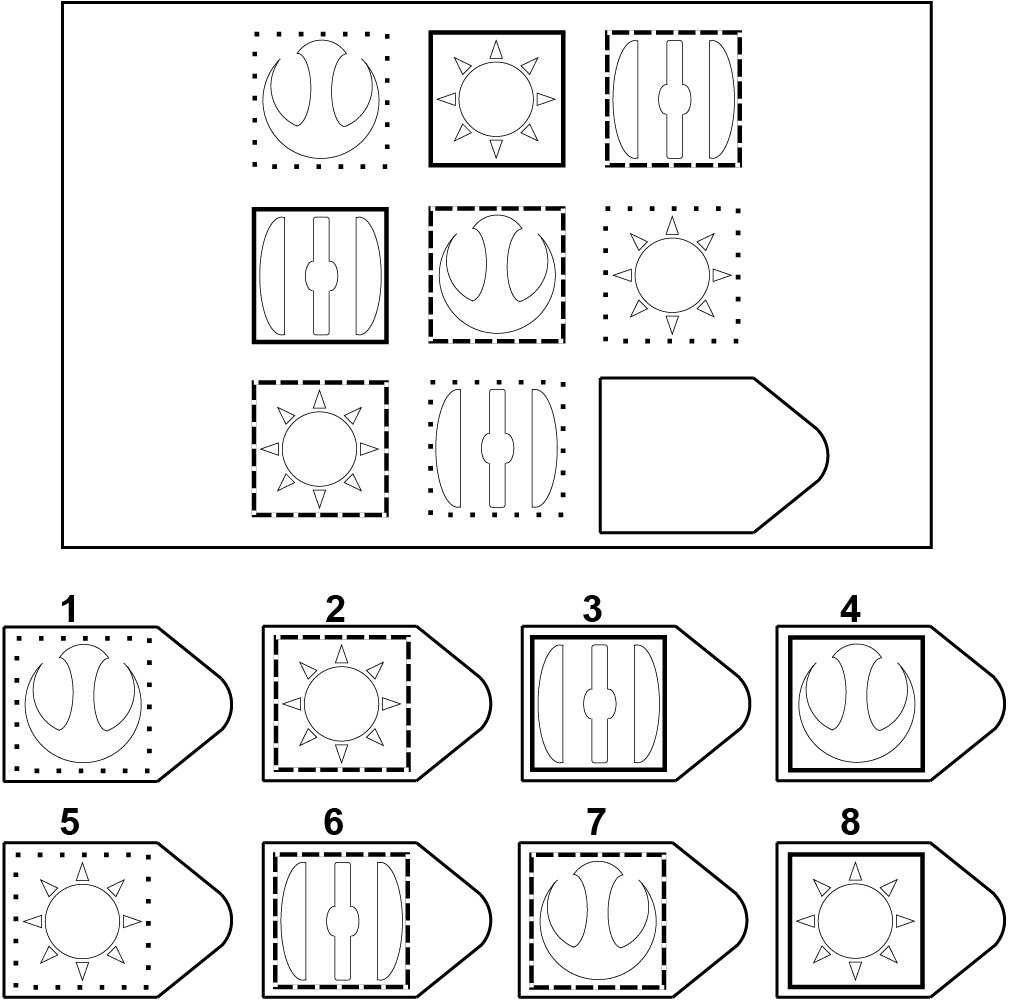

After this stage, my attention was gradually drawn to deeper cognitive factors that could possibly underlie different robot tasks. This led to several changes in my research. First, to avoid the extra complexity caused by the robot systems and virtual environment systems, I switched from the robot tasks to Visual Abstract Reasoning (VAR) tasks – a kind of task that is commonly used in human cognitive assessment. One of most well-known VAR tasks that I use are the Raven’s Progressive Matrices (RPM) tests, shown in Figure 1 (this is an example item I created, not a real test item). These tests look less complex than robot tasks; but it in fact present a big challenge to AI when presented or formulated in ways human cognitive assessments are administered. I first built imagery-based symbolic systems to solve VAR tasks, where the core idea was to represent the reasoning process as images and sequences of image operations. Then I realized that analogy-making ability was an important component of the cognitive factors tested by VAR tasks and I began to delve into the analogy-making theories. As I had been working on imagery, I created an “imagery version” of analogy-making theory, inspired by existing analogy-making theories and my imagery works. I also experimented with deep neural nets on VAR tasks. I tried to implement the ideas I obtained from imagery and analogy-making studies into deep neural nets. Given the synergic research paradigm I mentioned above, comparison had to be made between human cognitive studies and deep neural nets, for example, in terms of information processing mechanisms, generalization ability, testing protocols, and so on. But there has been a big gap because the two sides do not usually align very well.

Figure 1. An example of a Raven’s Progressive Matrices test.

Figure 1. An example of a Raven’s Progressive Matrices test.

Is there an aspect of your research that has been particularly interesting?

I guess the interesting part of my research is “being abstract”. We humans can think in abstract ways and we form various abstract concepts or symbols in our minds. And all the VAR tests are actually testing our ability to handle abstract concepts, for example, to detect, recall, learn, create, modify, and apply abstract concepts in different perceptual contexts. Since we humans are so used to thinking in abstract ways and so capable of doing do, we usually do not think this is a problem for AI systems. But, in fact, when such ability is specially tested in AI, for example, through RPM or similar tests or datasets, we would find that no AI system is guaranteed to have this kind of ability, even only for a very limited set of abstract concepts. For example, how would you train or design your AI systems to understand the concept of “recursion” or “proof by contradiction”? How would you verify your AI systems in terms of these abstract concepts? My feeling is that some abstract concepts (especially the important ones) require AI systems to “jump out of their own systems” and observe and reason about their own behavior. However, we know that there exist some general limitations for “jumping out of their own systems” for computational or formal systems. But how come humans have the ability to handle abstract concepts? Or, is it just an illusion that humans have this kind ability? These questions probably involve a lot of philosophical and mathematical thinking, and interdisciplinary efforts from the perspective of cognitive science.

What are your plans for building on your PhD research – what aspects would you like to investigate next?

There are a lot of unanswered questions in my dissertation, for example, the ability to handle abstract concepts in different ways and the misalignment between human cognitive studies and AI studies. I don’t think I can answer all of them in the near future (or even in my lifetime). I guess I would start from refining my previous works to make it easier to study these theoretical questions. In particular, I will start with task/test design, dataset collection, and experimental design to target specific research questions more accurately.

What made you want to study AI?

In short, Terminator 2: Judgment Day. At first, I thought I could build a robot like T800 in the movie. As I move on in my research, I feel these theoretical research questions about intelligence are more and more intriguing to me and I can work on them for my whole life.

What advice would you give to someone thinking of doing a PhD in the field?

I think my advice would be that the most important thing about doing a PhD is doing it rather than thinking about it. And because AI is a very young field compared to other well-established fields, I guess there are many unexplored places in AI. So a PhD student in AI should be more likely to find a unique research direction that is most interesting to him or herself.

About Yuan

|

Yuan Yang works in the intersecting area of AI and cognitive science, focusing on how AI can help understand human core cognitive abilities (e.g., fluid intelligence, visual abstract reasoning, analogy making, and mental imagery), and conversely, how such understanding can facilitate the development of AI. His research has been supported by the projects in the AIVAS lab that aim to help autistic people in their learning and career development. He holds a B.S. and a M.S. in computer science from Northwestern Polytechnical University, and a Ph.D. in computer science from Vanderbilt University. This fall, he will join the College of Information, Mechanical and Electrical Engineering, Shanghai Normal University as an associate professor. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2024, ACM SIGAI