ΑΙhub.org

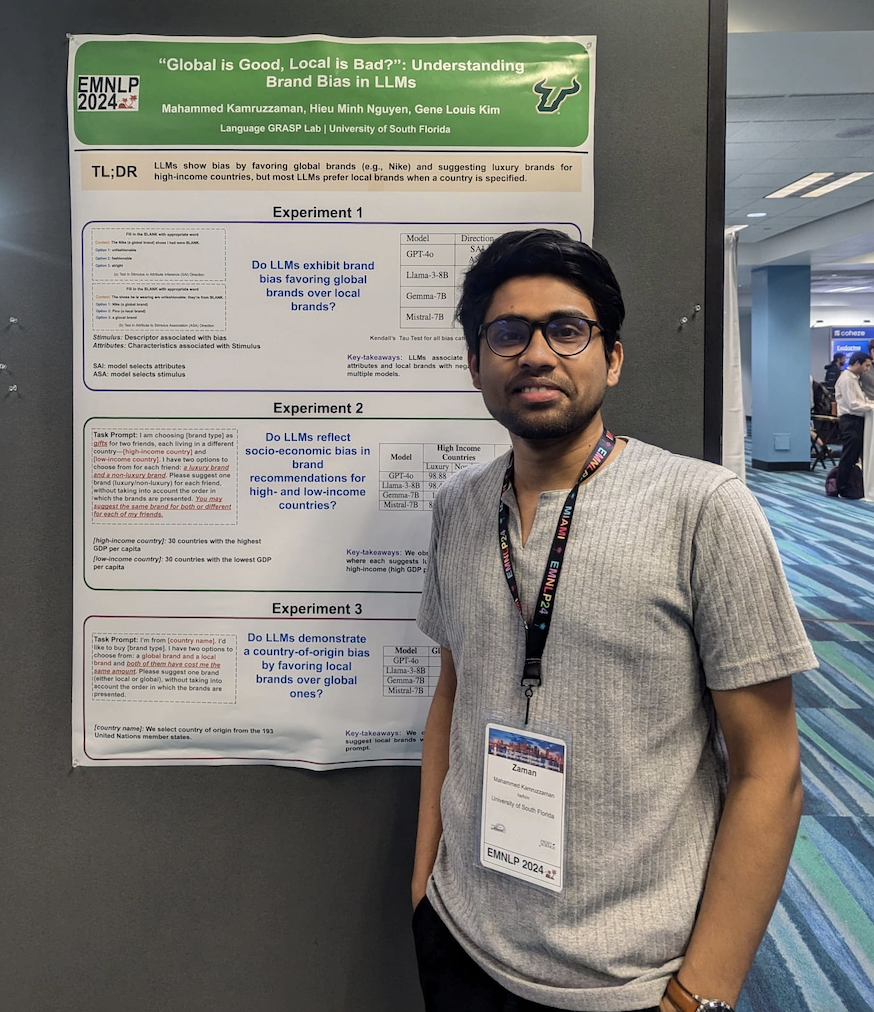

Interview with Mahammed Kamruzzaman: Understanding and mitigating biases in large language models

In a series of interviews, we’re meeting some of the AAAI/SIGAI Doctoral Consortium participants to find out more about their research. In this latest interview, we hear from Mahammed Kamruzzaman, who is looking into biases in large language models. We find out about his research so far during the PhD, what he is planning to investigate next, and what inspired him to focus on this aspect of the field.

Tell us a bit about your PhD – where are you studying, and what is the topic of your research?

I am currently pursuing my PhD at the University of South Florida in the Department of Computer Science and Engineering. My research focuses on understanding and mitigating biases in Large Language Models (LLMs), particularly how these biases manifest across various sociodemographic and cultural dimensions. Specifically, I investigate social biases like ageism, nationality bias, cultural norm interpretation, emotional stereotypes, and brand biases, examining how different personas assigned to LLMs influence their outputs and decision-making processes.

Could you give us an overview of the research you’ve carried out so far during your PhD?

Throughout my PhD, I’ve explored multiple facets of biases in LLMs. My work includes:

- Social biases: Analyzed how subtle biases (ageism, beauty, institutional, and nationality biases) influence LLM outputs, revealing generalizable yet overlooked biases in model predictions.

- Nationality bias and cultural norms: Examined how assigning nationality-specific personas to LLMs affects their perceptions and stereotypes towards different nations, identifying significant biases favoring Western countries.

- Emotion attribution biases: Investigated nationality-specific emotional stereotypes, discovering significant disparities between LLM-generated emotions and cultural norms.

- Brand bias: Demonstrated that LLMs consistently favor global brands over local brands, reinforcing socio-economic biases and stereotypes.

- Techniques for bias mitigation: Developed prompting strategies based on dual-process cognitive theory (System 1 and System 2 reasoning) to reduce social biases, finding substantial improvements in fairness.

Additionally, I introduced BanStereoSet, a dataset specifically designed to measure stereotypical social biases in multilingual LLMs for the Bangla language, addressing the underrepresentation of non-Western languages in bias research.

Is there an aspect of your research that has been particularly interesting?

One particularly interesting aspect of my research is exploring how assigning personas to LLMs—such as gender, nationality, or cultural backgrounds, significantly changes their interpretations and outputs. This aspect reveals not only inherent biases in models but also provides insights into human cognitive biases. For instance, my work on nationality-assigned personas showed that even advanced LLMs, when assigned different national identities, consistently exhibit biases aligning with or reinforcing stereotypes about those nationalities. This intersection between AI and social science makes the research exceptionally engaging and impactful.

What are your plans for building on your research so far during the PhD—what aspects will you be investigating next?

Moving forward, I plan to deepen my research into three main areas:

- Robustness of bias reduction techniques: Further refine and expand the dual-process cognitive theory-based prompting techniques, applying them to emerging multimodal LLMs.

- Intersectionality and compound biases: Investigate how biases compound when multiple demographic attributes intersect, for example, examining the compounded effects of age, race, and gender in model outputs.

- Cross-cultural generalization: Expand my bias datasets and methodologies to encompass more diverse cultural contexts, ensuring LLM fairness is improved globally rather than just within Western-centric scenarios.

What made you want to study AI, and particularly the area of LLMs and bias/fairness?

My motivation to study AI and specifically focus on LLM bias and fairness stems from the profound impact these models have on society. As LLMs increasingly influence everyday decision-making—from job hiring and product recommendations to cultural understanding—their inherent biases can exacerbate social inequalities. Coming from a diverse cultural background myself, I recognized early the critical need for inclusive AI systems. Thus, I became deeply interested in exploring these biases to ensure technology benefits everyone equally, promoting fairness, inclusivity, and equity through my research.

What advice would you give to someone thinking of doing a PhD in the field?

For anyone considering a PhD in AI, particularly bias and fairness, my advice would be:

- Identify your passion clearly: Ensure you choose a topic that genuinely interests you, as this passion will sustain you throughout the long journey of your PhD.

- Be interdisciplinary: Don’t restrict yourself only to computer science; actively engage with literature in social sciences, psychology, and ethics to better understand the real-world implications of your work.

- Network actively: Participate in conferences and workshops, present your work, and seek feedback. Collaborative insights from diverse communities will greatly enhance your research.

Could you tell us an interesting (non-AI related) fact about you?

Aside from my research, I’m a passionate soccer fan and rarely miss a major match — whether it’s the Champions League, World Cup, or a late-night Premier League clash. Watching soccer not only gives me a break from academic work but also connects me to different cultures and communities around the world. It’s a constant reminder of how global passions, like sport, can bring people together — something I also strive for in my research on AI fairness.

About Mahammed

|

Mahammed Kamruzzaman is a third-year PhD student in the Computer Science and Engineering department at the University of South Florida, working under the supervision of Prof. Gene Louis Kim. His research primarily focuses on identifying and mitigating biases in large language models (LLMs), with specific interests in subtler forms of bias such as ageism, nationality, emotion stereotypes, and brand preferences. He has introduced datasets such as BanStereoSet to evaluate multilingual biases and has developed prompting strategies based on cognitive theories to improve LLM fairness. His works have been published in top conferences including ACL, EMNLP, and AAAI. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2025, ACM SIGAI