ΑΙhub.org

How does AI affect how we learn? A cognitive psychologist explains why you learn when the work is hard

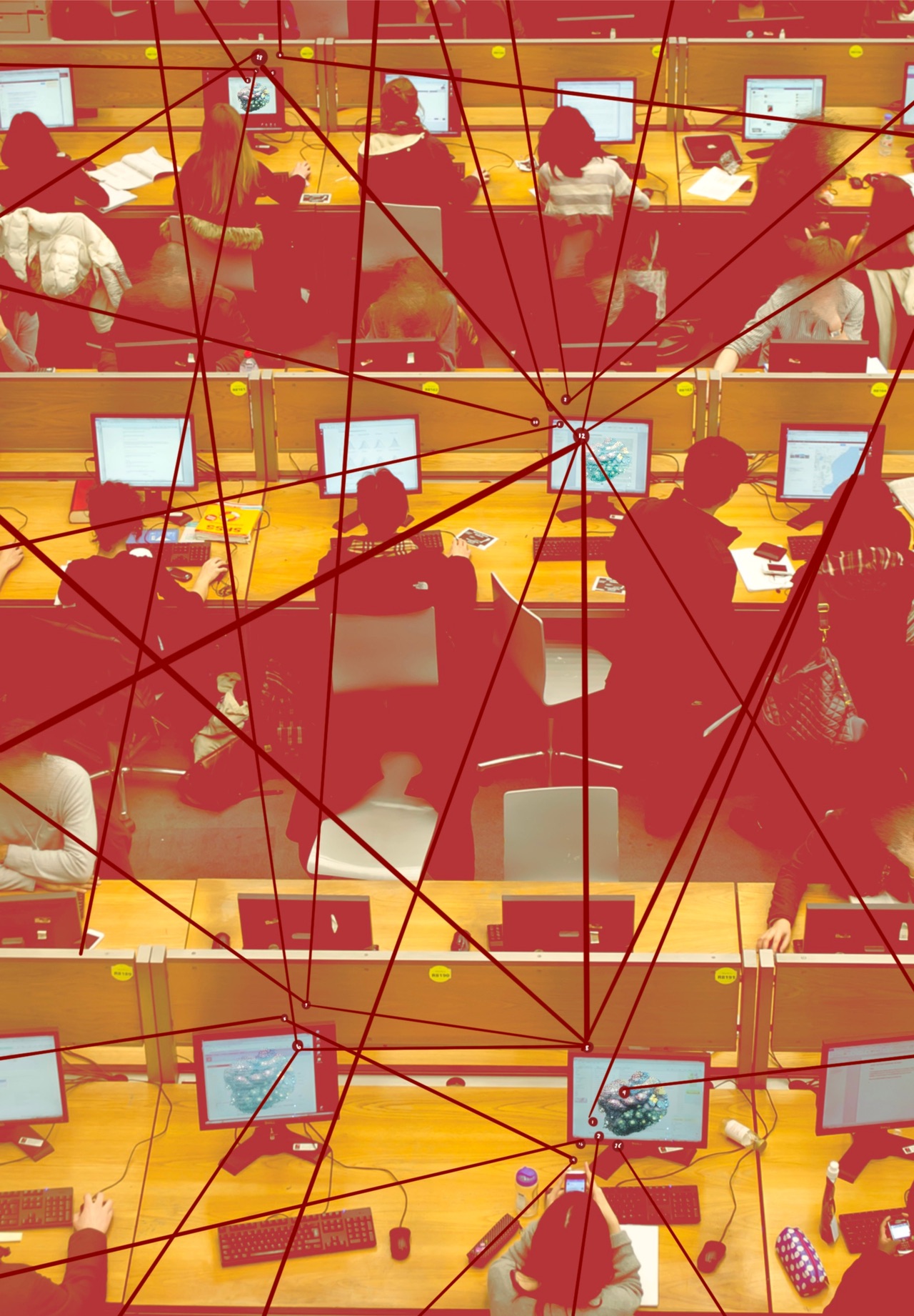

Hanna Barakat & Cambridge Diversity Fund / Data Lab Dialogue / Licenced by CC-BY 4.0

Hanna Barakat & Cambridge Diversity Fund / Data Lab Dialogue / Licenced by CC-BY 4.0

By Brian W. Stone, Boise State University

When OpenAI released “study mode” in July 2025, the company touted ChatGPT’s educational benefits. “When ChatGPT is prompted to teach or tutor, it can significantly improve academic performance,” the company’s vice president of education told reporters at the product’s launch. But any dedicated teacher would be right to wonder: Is this just marketing, or does scholarly research really support such claims?

While generative AI tools are moving into classrooms at lightning speed, robust research on the question at hand hasn’t moved nearly as fast. Some early studies have shown benefits for certain groups such as computer programming students and English language learners. And there have been a number of other optimistic studies on AI in education, such as one published in the journal Nature in May 2025 suggesting that chatbots may aid learning and higher-order thinking. But scholars in the field have pointed to significant methodological weaknesses in many of these research papers.

Other studies have painted a grimmer picture, suggesting that AI may impair performance or cognitive abilities such as critical thinking skills. One paper showed that the more a student used ChatGPT while learning, the worse they did later on similar tasks when ChatGPT wasn’t available.

In other words, early research is only beginning to scratch the surface of how this technology will truly affect learning and cognition in the long run. Where else can we look for clues? As a cognitive psychologist who has studied how college students are using AI, I have found that my field offers valuable guidance for identifying when AI can be a brain booster and when it risks becoming a brain drain.

Skill comes from effort

Cognitive psychologists have argued that our thoughts and decisions are the result of two processing modes, commonly denoted as System 1 and System 2.

The former is a system of pattern matching, intuition and habit. It is fast and automatic, requiring little conscious attention or cognitive effort. Many of our routine daily activities – getting dressed, making coffee and riding a bike to work or school – fall into this category. System 2, on the other hand, is generally slow and deliberate, requiring more conscious attention and sometimes painful cognitive effort, but often yields more robust outputs.

We need both of these systems, but gaining knowledge and mastering new skills depend heavily on System 2. Struggle, friction and mental effort are crucial to the cognitive work of learning, remembering and strengthening connections in the brain. Every time a confident cyclist gets on a bike, they rely on the hard-won pattern recognition in their System 1 that they previously built up through many hours of effortful System 2 work spent learning to ride. You don’t get mastery and you can’t chunk information efficiently for higher-level processing without first putting in the cognitive effort and strain.

I tell my students the brain is a lot like a muscle: It takes genuine hard work to see gains. Without challenging that muscle, it won’t grow bigger.

What if a machine does the work for you?

Now imagine a robot that accompanies you to the gym and lifts the weights for you, no strain needed on your part. Before long, your own muscles will have atrophied and you’ll become reliant on the robot at home even for simple tasks like moving a heavy box.

AI, used poorly – to complete a quiz or write an essay, say – lets students bypass the very thing they need to develop knowledge and skills. It takes away the mental workout.

Using technology to effectively offload cognitive workouts can have a detrimental effect on learning and memory and can cause people to misread their own understanding or abilities, leading to what psychologists call metacognitive errors. Research has shown that habitually offloading car navigation to GPS may impair spatial memory and that using an external source like Google to answer questions makes people overconfident in their own personal knowledge and memory.

Are there similar risks when students hand off cognitive tasks to AI? One study found that students researching a topic using ChatGPT instead of a traditional web search had lower cognitive load during the task – they didn’t have to think as hard – and produced worse reasoning about the topic they had researched. Surface-level use of AI may mean less cognitive burden in the moment, but this is akin to letting a robot do your gym workout for you. It ultimately leads to poorer thinking skills.

In another study, students using AI to revise their essays scored higher than those revising without AI, often by simply copying and pasting sentences from ChatGPT. But these students showed no more actual knowledge gain or knowledge transfer than their peers who worked without it. The AI group also engaged in fewer rigorous System 2 thinking processes. The authors warn that such “metacognitive laziness” may prompt short-term performance improvements but also lead to the stagnation of long-term skills.

Offloading can be useful once foundations are in place. But those foundations can’t be formed unless your brain does the initial work necessary to encode, connect and understand the issues you’re trying to master.

Using AI to support learning

Returning to the gym metaphor, it may be useful for students to think of AI as a personal trainer who can keep them on task by tracking and scaffolding learning and pushing them to work harder. AI has great potential as a scalable learning tool, an individualized tutor with a vast knowledge base that never sleeps.

AI technology companies are seeking to design just that: the ultimate tutor. In addition to OpenAI’s entry into education, in April 2025 Anthropic released its learning mode for Claude. These models are supposed to engage in Socratic dialogue, to pose questions and provide hints, rather than just giving the answers.

Early research indicates AI tutors can be beneficial but introduce problems as well. For example, one study found high school students reviewing math with ChatGPT performed worse than students who didn’t use AI. Some students used the base version and others a customized tutor version that gave hints without revealing answers. When students took an exam later without AI access, those who’d used base ChatGPT did much worse than a group who’d studied without AI, yet they didn’t realize their performance was worse. Those who’d studied with the tutor bot did no better than students who’d reviewed without AI, but they mistakenly thought they had done better. So AI didn’t help, and it introduced metacognitive errors.

Even as tutor modes are refined and improved, students have to actively select that mode and, for now, also have to play along, deftly providing context and guiding the chatbot away from worthless, low-level questions or sycophancy.

The latter issues may be fixed with better design, system prompts and custom interfaces. But the temptation of using default-mode AI to avoid hard work will continue to be a more fundamental and classic problem of teaching, course design and motivating students to avoid shortcuts that undermine their cognitive workout.

As with other complex technologies such as smartphones, the internet or even writing itself, it will take more time for researchers to fully understand the true range of AI’s effects on cognition and learning. In the end, the picture will likely be a nuanced one that depends heavily on context and use case.

But what we know about learning tells us that deep knowledge and mastery of a skill will always require a genuine cognitive workout – with or without AI.![]()

Brian W. Stone, Associate Professor of Cognitive Psychology, Boise State University

This article is republished from The Conversation under a Creative Commons license. Read the original article.