ΑΙhub.org

AIhub blog post highlights 2025

Over the course of the year, we’ve had the pleasure of working with many talented researchers from across the globe. As 2025 draws to a close, we take a look back at some of the excellent blog posts from our contributors.

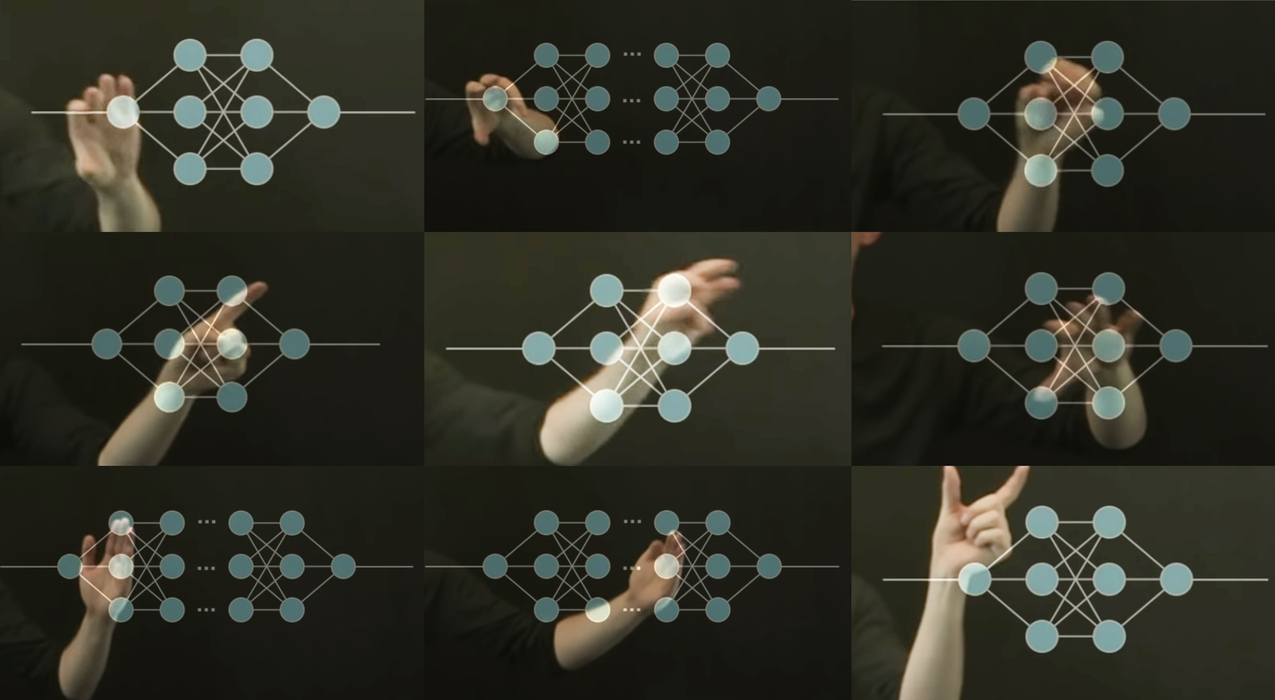

TELL: Explaining neural networks using logic

By Alessio Ragno

This work contributes to the field of explainable AI by developing a novel neural network that can be directly transformed into logic.

Understanding artists’ perspectives on generative AI art and transparency, ownership, and fairness

By Juniper Lovato, Julia Witte Zimmerman and Jennifer Karson

The authors explore the tensions between creators and AI-generated content through a survey of 459 artists.

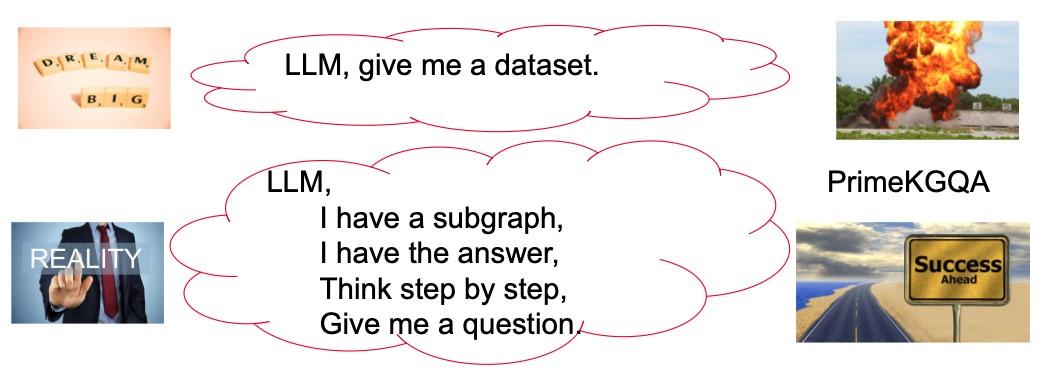

Generating a biomedical knowledge graph question answering dataset

By Xi Yan

Find out more about work presented at ECAI on generating a comprehensive biomedical knowledge graph question answering dataset.

#AAAI2025 outstanding paper – DivShift: Exploring domain-specific distribution shift in large-scale, volunteer-collected biodiversity datasets

By Elena Sierra and Lauren Gillespie

Learn more about work on biodiversity datasets that won the AAAI outstanding paper award – AI for social alignment track.

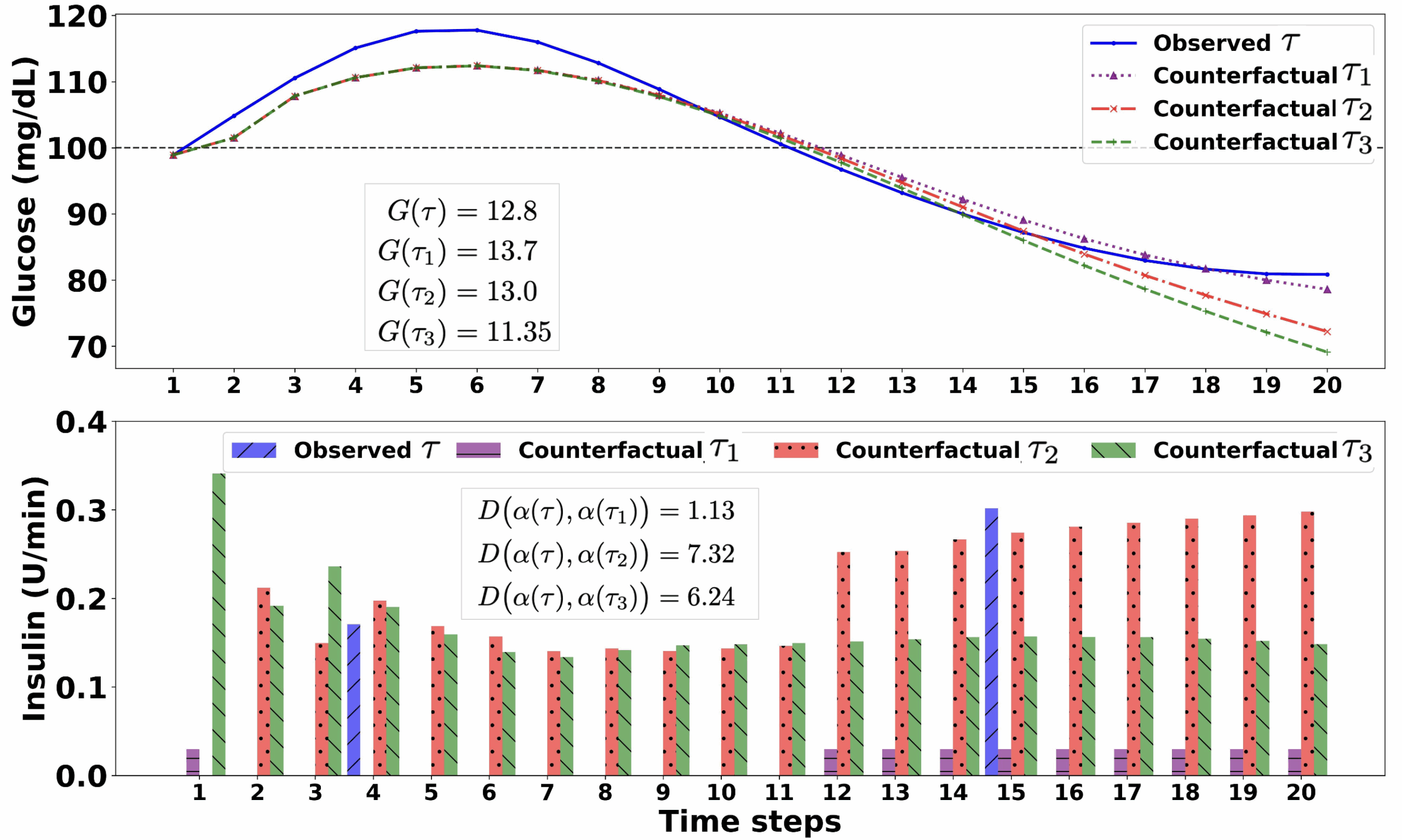

Exploring counterfactuals in continuous-action reinforcement learning

By Shuyang Dong

Shuyang Dong proposes a framework for generating counterfactual explanations in continuous action reinforcement learning.

Making optimal decisions without having all the cards in hand

By Nathanaël Fijalkow, Hugo Gimbert, Florian Horn, Guillermo Perez and Pierre Vandenhove

AAAI outstanding paper award winners tackle the challenging problem of developing algorithms that themselves generate other algorithms based on a few examples or a specification of what is expected.

#IJCAI2025 distinguished paper: Combining MORL with restraining bolts to learn normative behaviour

By Agata Ciabattoni and Emery Neufeld

Winners of an IJCAI distinguished paper award write about their work introducing a framework for guiding reinforcement learning agents to comply with social, legal, and ethical norms.

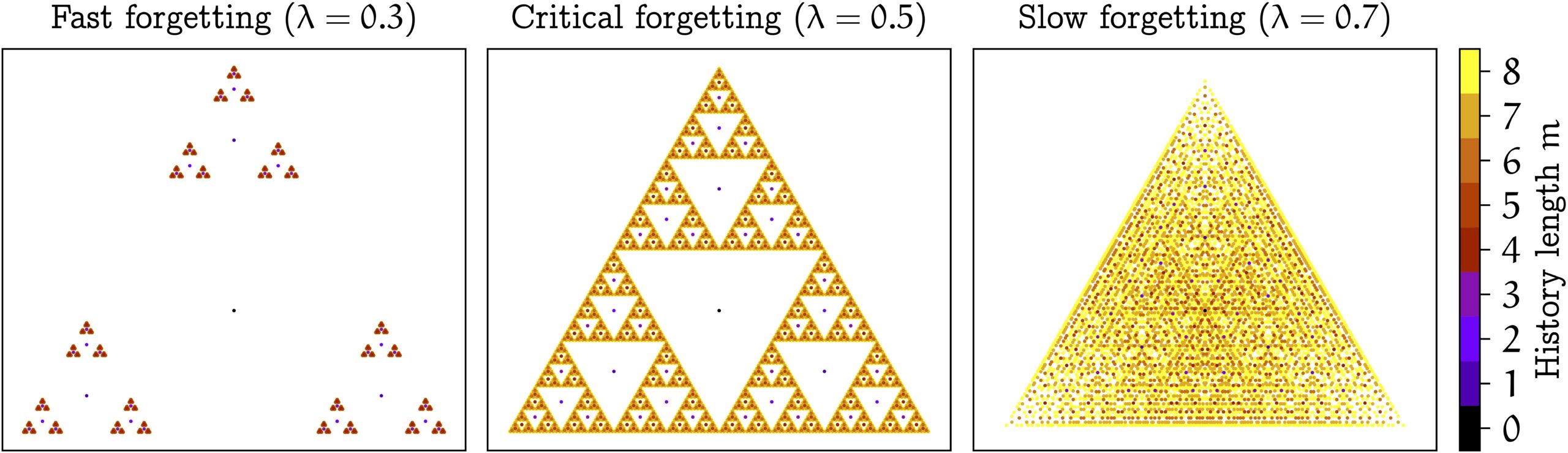

Memory traces in reinforcement learning

By Onno Eberhard

Onno Eberhard summarizes work presented at ICML 2025 on partially observable reinforcement learning which introduces an alternative memory framework – “memory traces”.

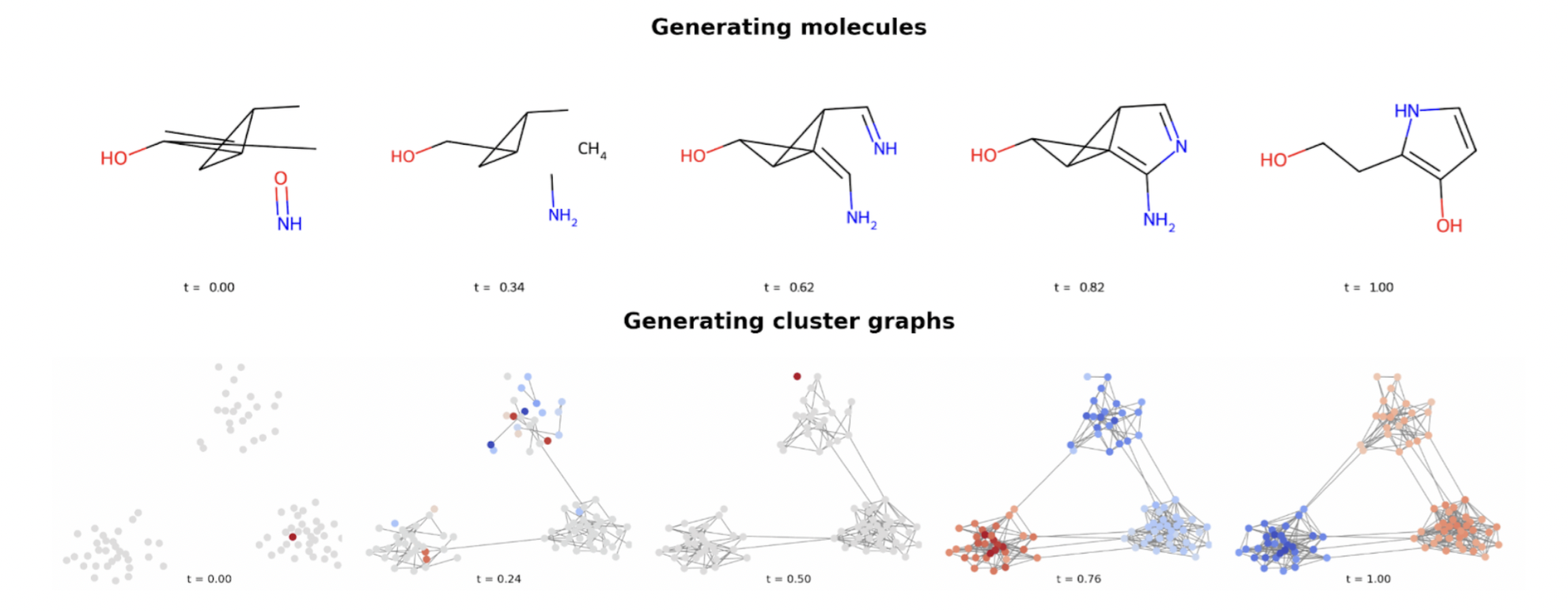

Discrete flow matching framework for graph generation

By Manuel Madeira and Yiming Qin

In work presented at ICML 2025, Manuel Madeira and Yiming Qin write about a discrete flow matching framework for graph generation.

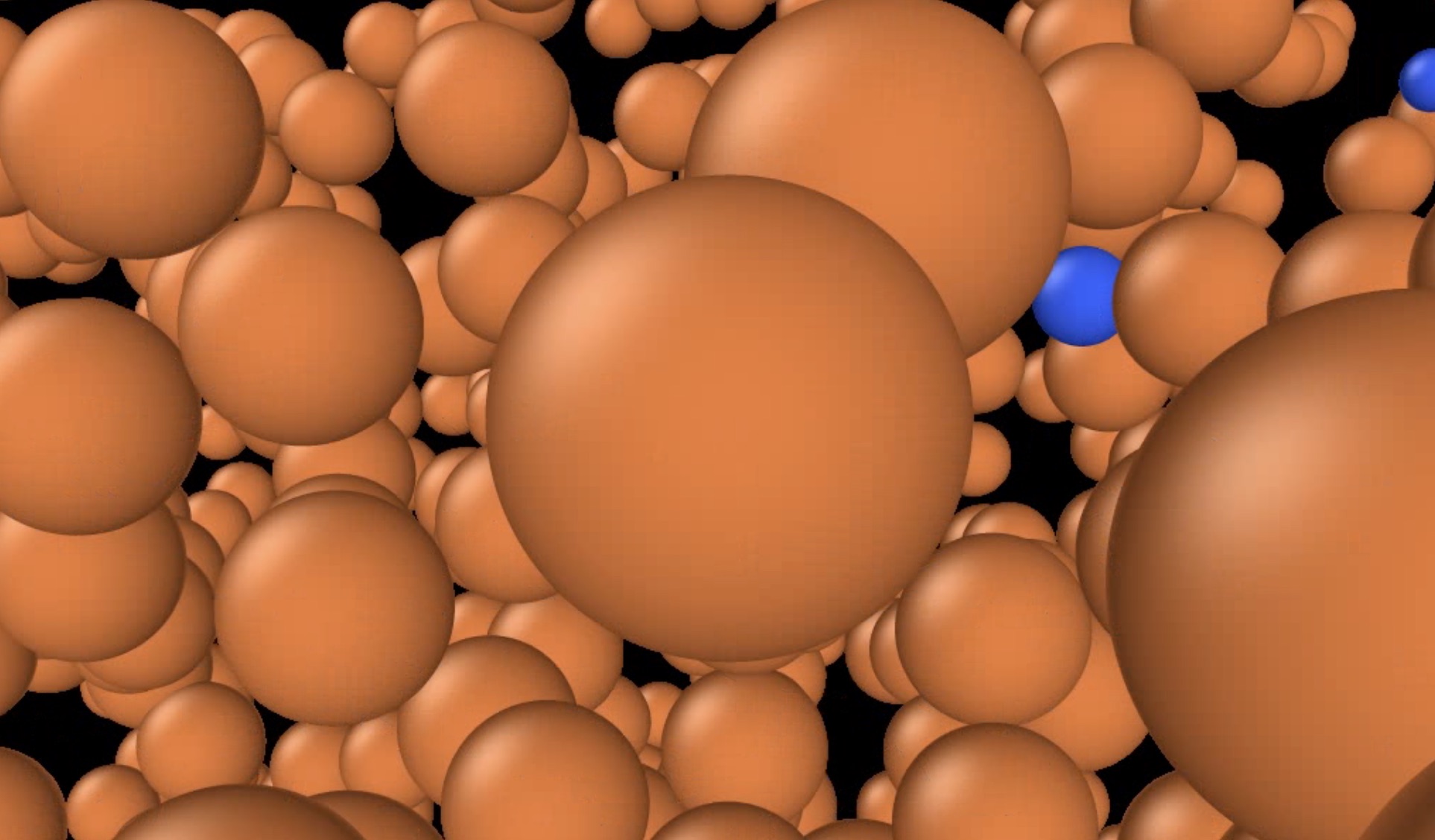

Machine learning for atomic-scale simulations: balancing speed and physical laws

By Filippo Bigi, Marcel Langer and Michele Ceriotti

How should one balance speed and physical laws when using ML for atomic-scale simulations? Find out more in this blog post about work presented at ICML 2025.

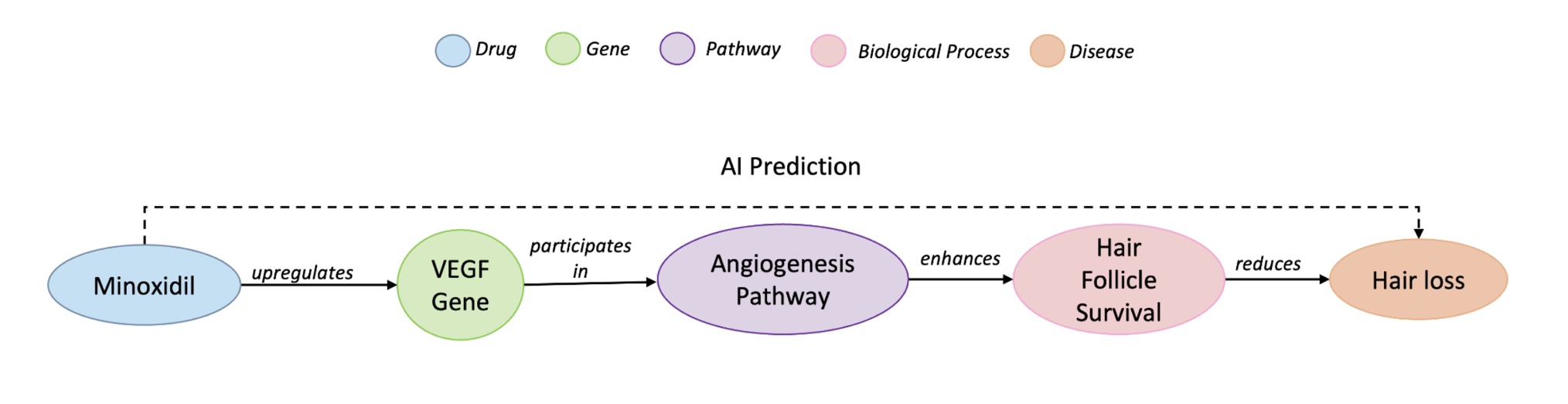

Rewarding explainability in drug repurposing with knowledge graphs

By Susana Nunes and Catia Pesquita

This work, presented at IJCAI 2025, introduces a reinforcement learning approach that not only predicts which drug-disease pairs might hold promise but also explains why.

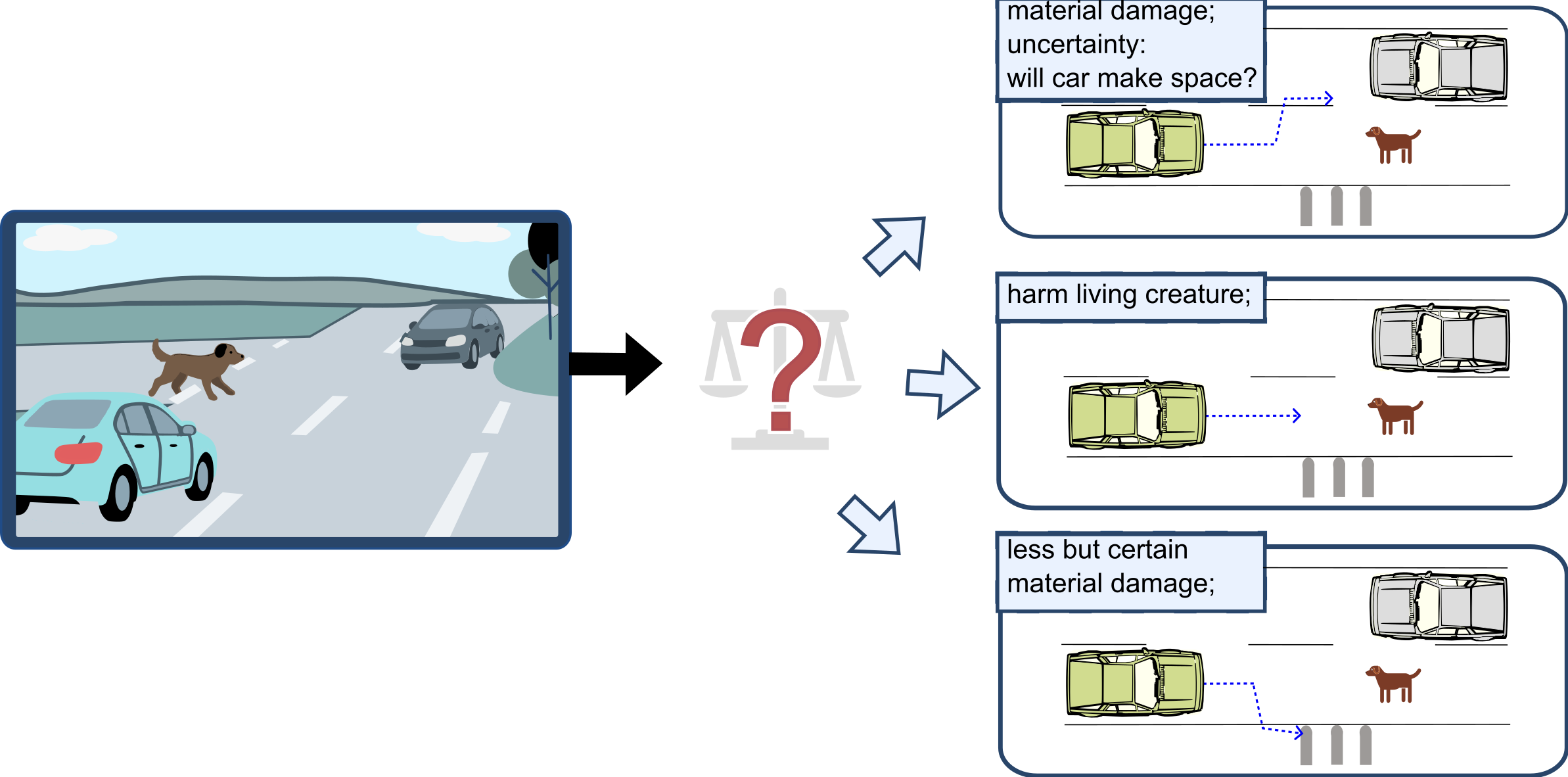

Designing value-aligned autonomous vehicles: from moral dilemmas to conflict-sensitive design

By Astrid Rakow

Astrid Rakow writes about designing “conflict-sensitive” autonomous traffic agents that explicitly recognise, reason about, and act upon competing ethical, legal, and social values.

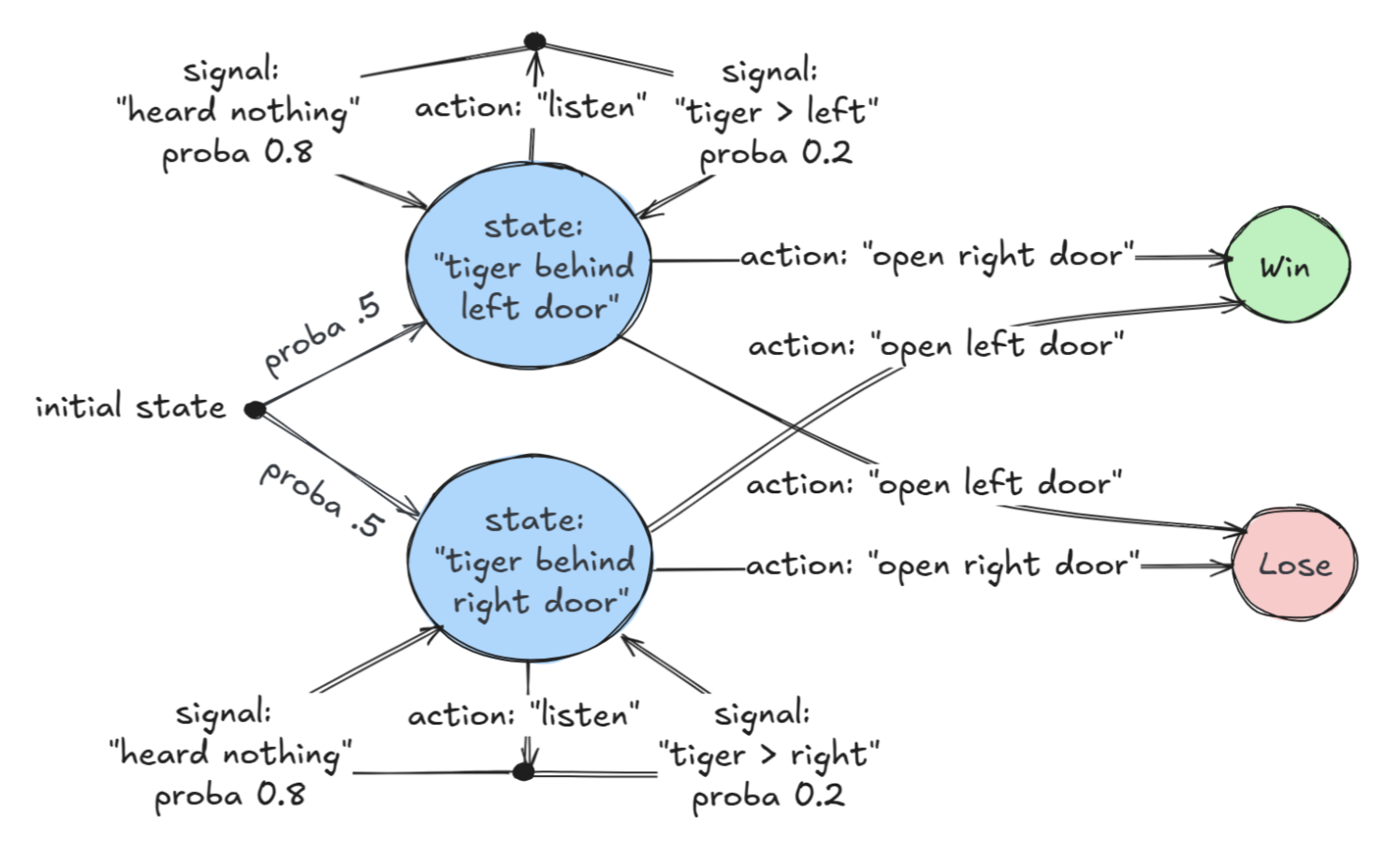

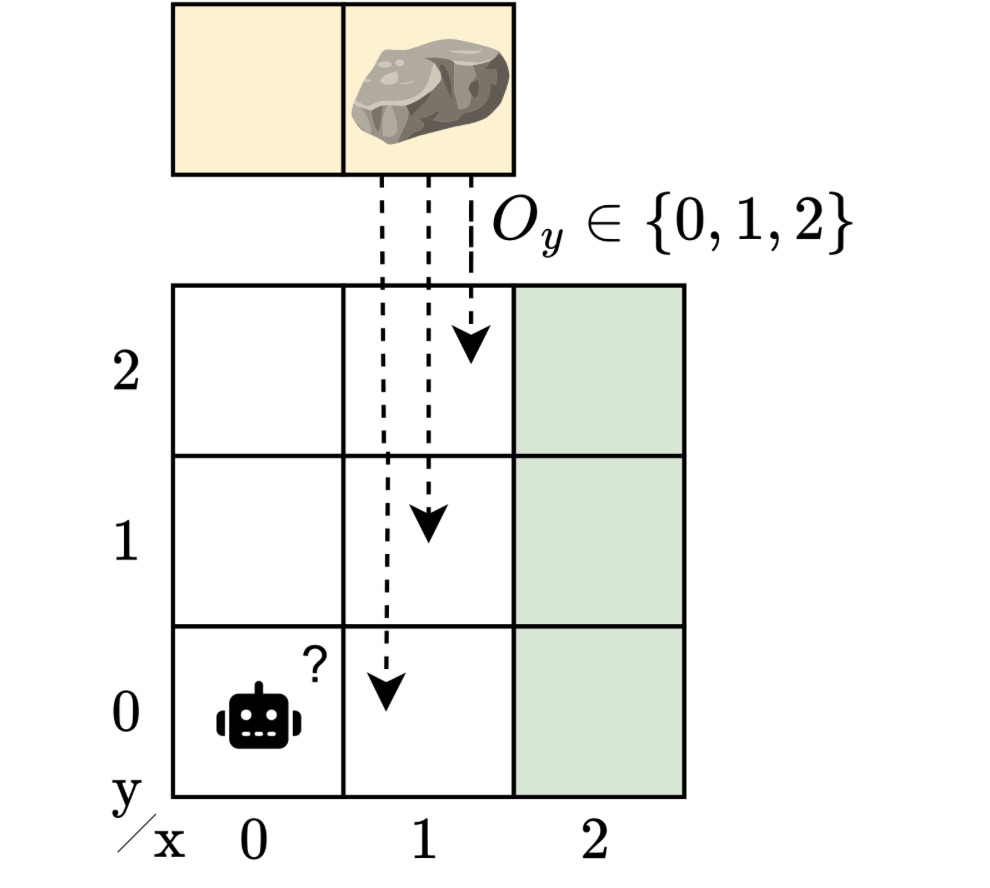

Learning robust controllers that work across many partially observable environments

By Maris Galesloot

In this blog post, Maris Galesloot summarizes work presented at IJCAI 2025, which explores designing controllers that perform reliably even when the environment may not be precisely known.