ΑΙhub.org

Utilizing generative adversarial networks for stable structure generation in Angry Birds

The popular physics-based puzzle game series Angry Birds has been played and enjoyed by millions of people since its original launch in 2009. However, while the game may seem somewhat simple and straightforward to play, with even very young children being able to quickly grasp its mechanics and strategies, artificial intelligence has so far failed to obtain human-level performance. Along with a lack of knowledge about the game’s internal physics engine and imprecise object detection algorithms, one of the core challenges to training better game-playing agents is the limited number and variety of available game levels.

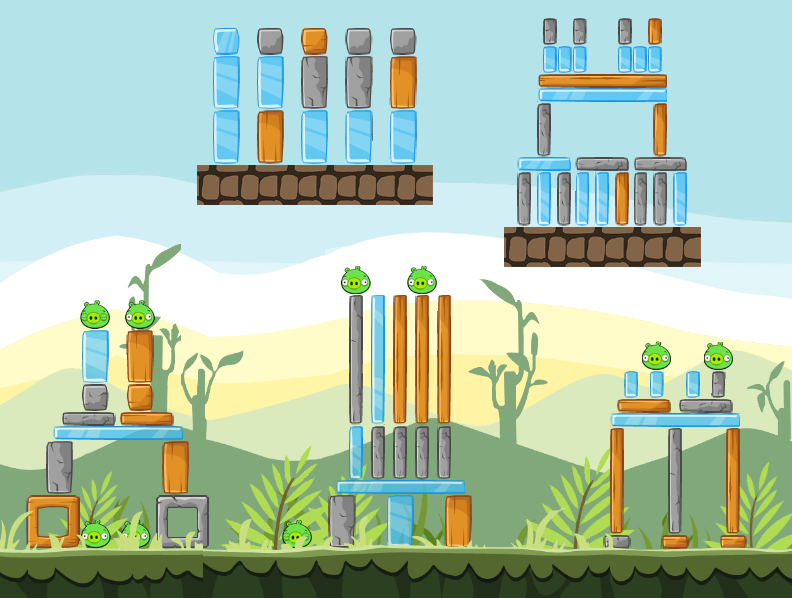

The levels in Angry Birds often contain individual structures that are made up of multiple rectangular 2D blocks, such as those shown in figure 1. While a handful of previous structure generators for Angry Birds exist, they often rely on hard-coded design constraints that limit the output diversity. In our paper we propose using a generative adversarial network (GAN) approach that can teach itself how to design Angry Birds structures. GANs are a recent machine learning technique that can produce highly photorealistic images, but which have also been applied to create tile-based content for games such as Super Mario Bros. and the Legend of Zelda. However, the continuous and physics-based environment presented by Angry Birds provides additional challenges when it comes to applying GANs.

Figure 1: An example Angry Birds level containing five distinct structures (produced by a level generation algorithm).

Figure 1: An example Angry Birds level containing five distinct structures (produced by a level generation algorithm).

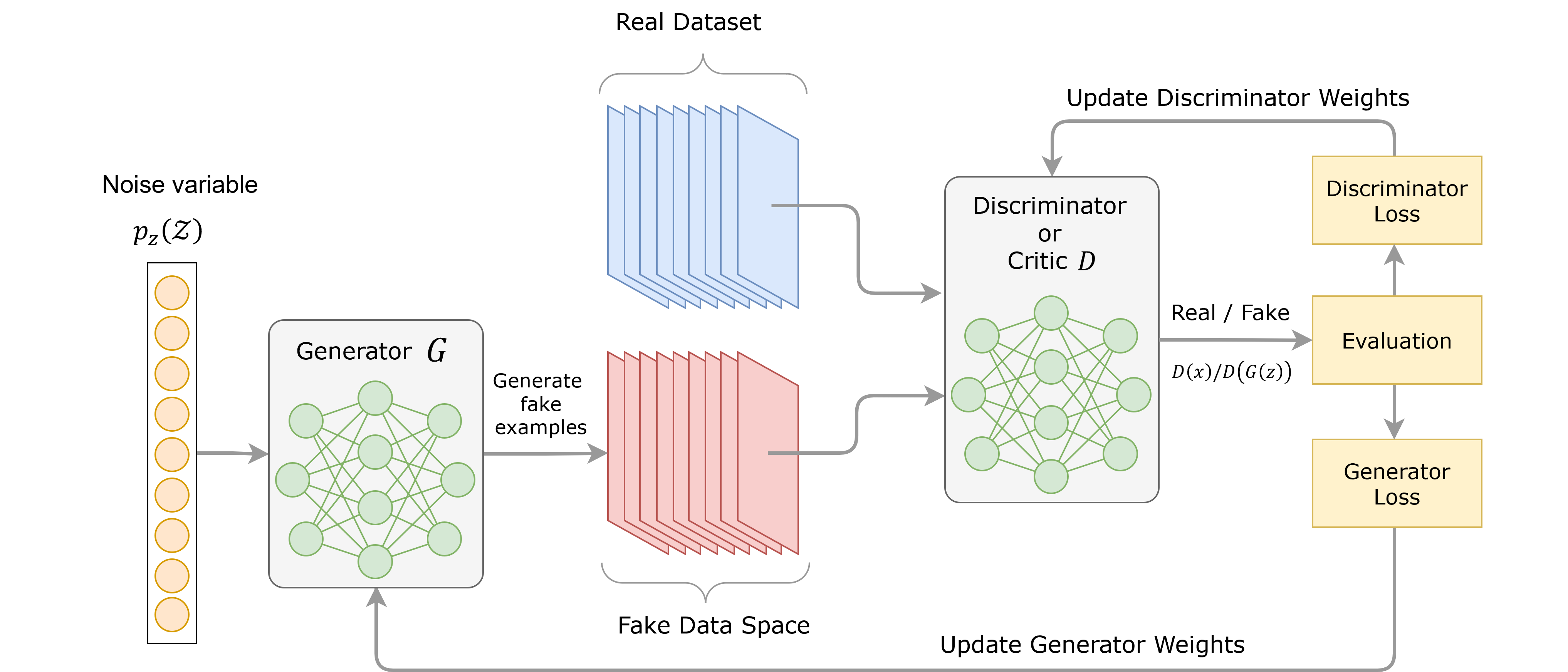

As a quick overview of how a GAN works, the system centres around two neural networks called the generator network and the discriminator network. The generator network is tasked with producing a set of “fake” generated examples, in our case Angry Birds structures. These generated structures are then provided to the discriminator network, along with a set of “real” structures sourced for a training data set. The discriminator network must then try to decide whether any given structure is real or fake. Based on the results, the weights for each network are then updated to improve their performance in the future. After multiple iterations, the generator network will ideally create structures that appear visually similar to those in the real dataset, and the discriminator network will be able to better differentiate between the real and fake (generated) structures. The trained generator network can then be used independently to produce Angry Birds structures as needed. Figure 2 provides a visual representation of this process.

Figure 2: GAN training process overview

Figure 2: GAN training process overview

While this GAN approach provides a mechanism for training a neural network to produce Angry Birds structures, it does have some requirements in terms of the input and output format. Namely, the input and output structures must be able to be represented as a matrix (i.e., a grid) of numerical values. As the structures for Angry Birds are made up of blocks with real-valued dimensions and positions, we must first encode these structures into a suitable grid-based representation before they can be used in our GAN. Likewise, we will also need to convert any generated structure from this grid-based representation back into a valid Angry Birds structure.

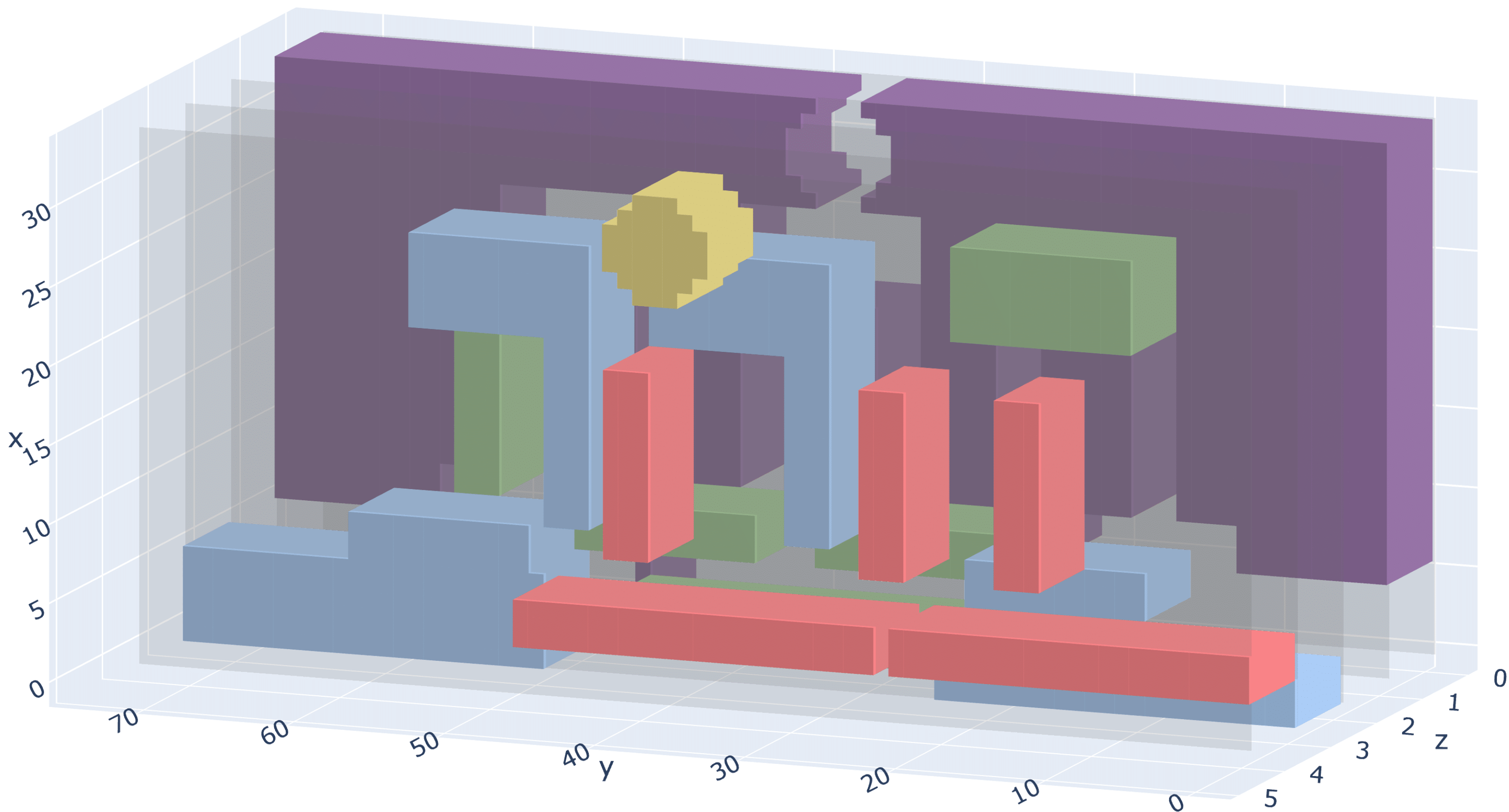

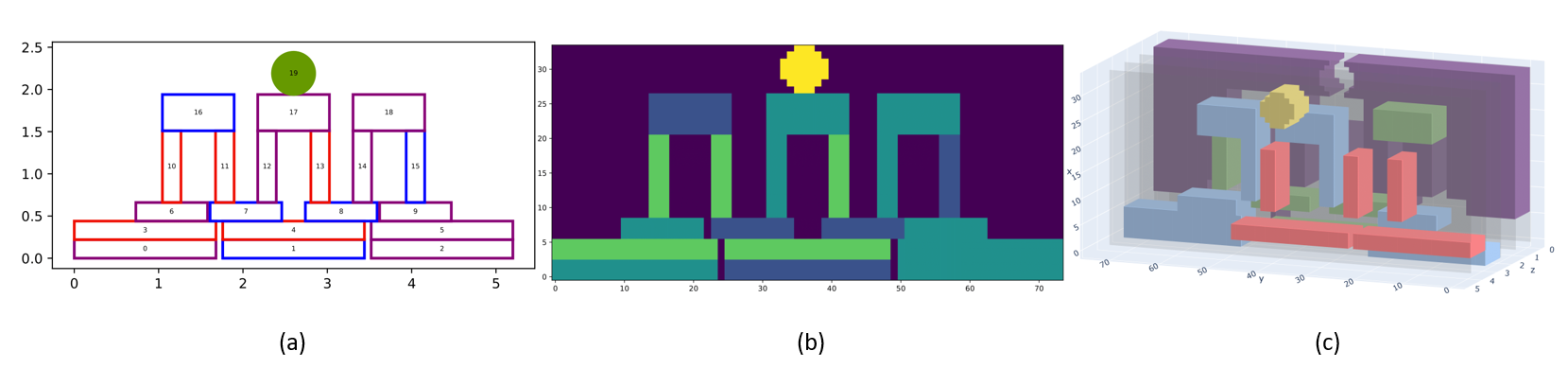

The structure encoding process works by placing a fixed size grid over the entire structure and filling any grid cells which have the majority of their area covered, converting the example structure shown in figure 3(a) to figure 3(b). After this, each of the five object types available in Angry Birds were split into their own layers, converting the single-layer representation in figure 3(b) into a multi-layer representation in figure 3(c). The five object types considered were wood, ice and stone blocks (the three possible block materials), pigs and empty space. This provides us with a 3-dimensional matrix representation of any Angry Birds structure, where a highlighted cell indicates the existence of the corresponding object type at this position. This process is used to encode our dataset of “real” Angry Birds structures that will then be used to train our GAN model.

Figure 3: Structure encoding process

Figure 3: Structure encoding process

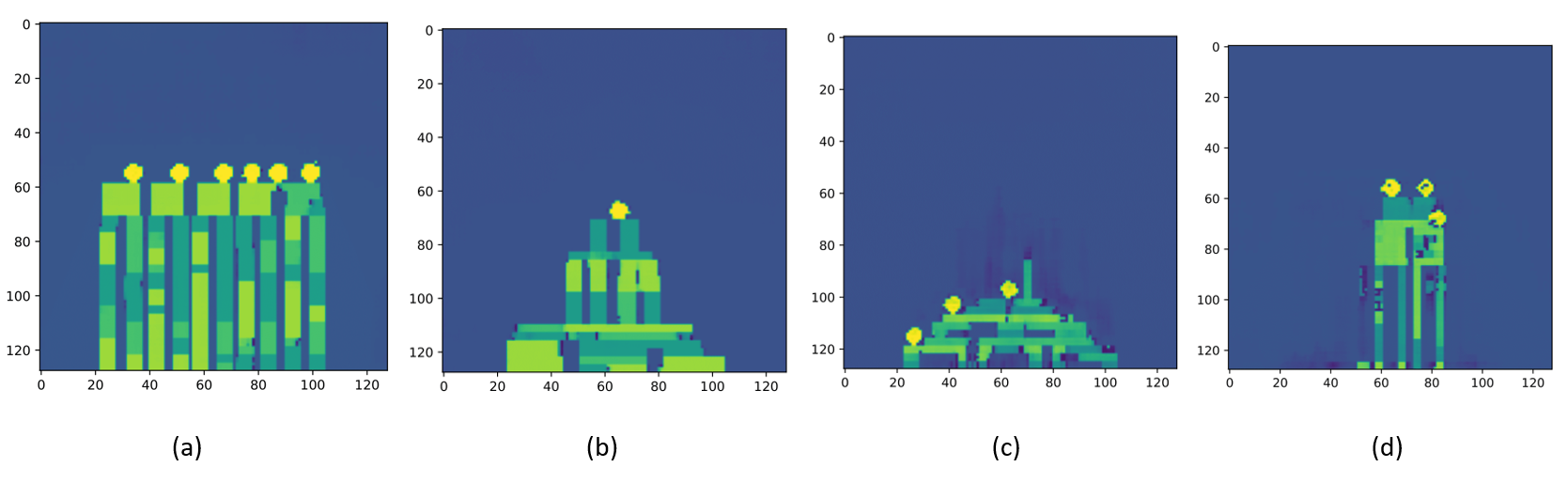

The GANs generator network will also be trained to output structures in the same encoded representation given in figure 3(c), but with each cell instead containing a probability between 0% and 100% that the corresponding object type is present. By flattening these layers back into a 2D image, we can produce heatmap-style representations of our generated Angry Birds structures, such as those shown in figure 4. However, we now need some way to decode these images back into valid Angry Birds structures.

Figure 4: Generated Angry Birds structures (encoded)

Figure 4: Generated Angry Birds structures (encoded)

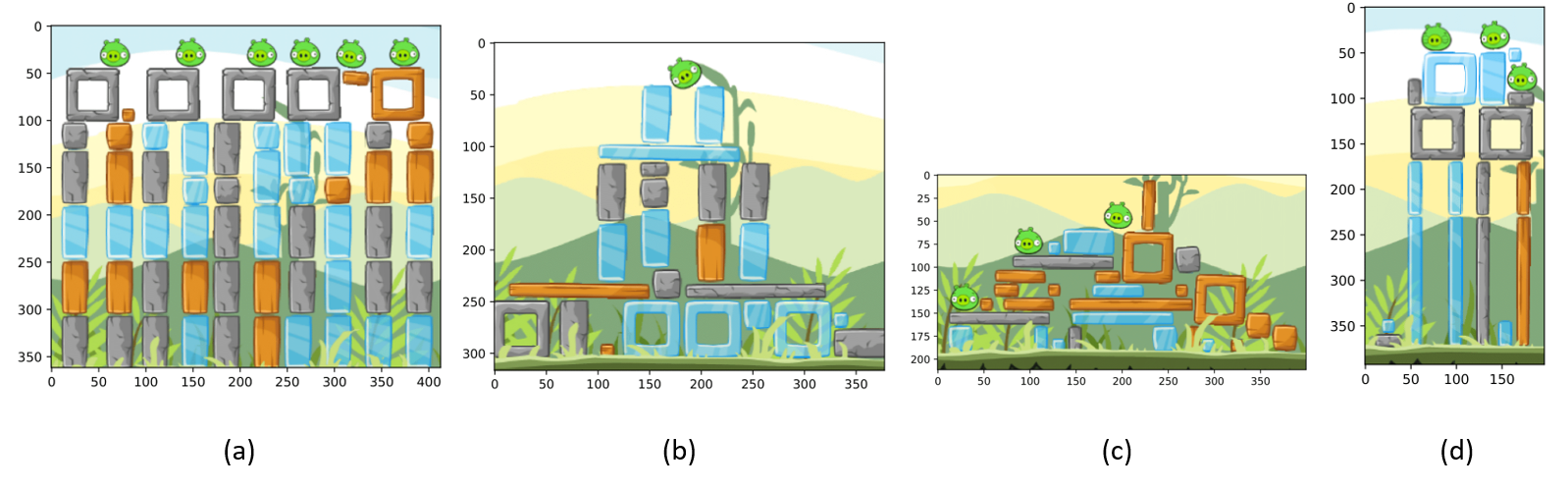

Our process for decoding these images works by calculating how well each possible block shape would match the encoded image at each possible position, with a higher weighting given to larger blocks and ignoring any cases where blocks would overlap. The block type and position with the highest value is then placed. This process then repeats until our encoded image has been fully covered with real Angry Birds blocks, resulting in the corresponding structures shown in figure 5.

Figure 5: Generated Angry Birds structures (decoded)

Figure 5: Generated Angry Birds structures (decoded)

While far from perfect, we believe that this approach opens a whole new avenue for GAN approaches to be applied across many types of games. This not only helps fill any content gaps that restrict agent development, but also provides a fun and novel way for players to create their own level designs. As part of this research, we have also published an interactive structure design tool that allows users to experiment with training different GAN architectures and refining the produced results. This application, along with all code and data used for this research, is available at the GitHub link below.

- Published paper: Utilizing Generative Adversarial Networks for Stable Structure Generation in Angry Birds, Frederic Abraham and Matthew Stephenson

- GitHub code

Note: This work won the award for Best Artifact at the Nineteenth AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment (AIIDE2023).

tags: AAAI, AIIDE2023