ΑΙhub.org

Interview with Aaquib Tabrez: explainability and human-robot interaction

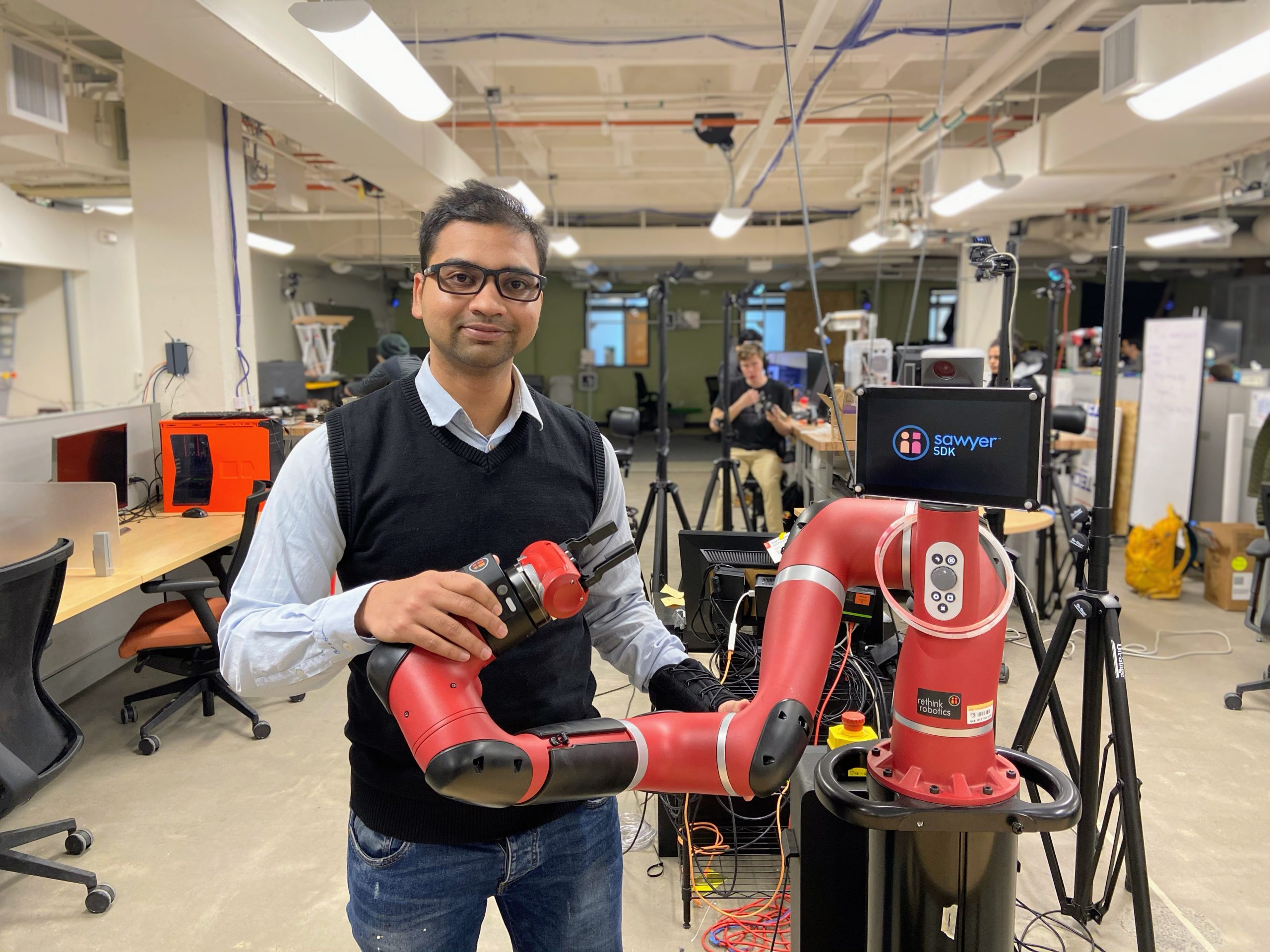

The AAAI/SIGAI Doctoral Consortium provides an opportunity for a group of PhD students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. This year, 30 students were selected for this programme, and we’ve been meeting them and talking about their research. In this interview, Aaquib Tabrez tells us about his work at the intersection of explainability and human-robot interaction.

Tell us a bit about your PhD – where are you studying, and what is the topic of your research?

I am doing my PhD at the University of Colorado Boulder in the Department of Computer Science, where I am advised by Professor Brad Hayes. My research focuses on teaching robots and autonomous systems to communicate more effectively with people through explainable AI. The aim is to make these systems more transparent, trustworthy, and reliable across various human-robot team settings, including manufacturing, autonomous driving, and search and rescue operations. Specifically, my work investigates how autonomous agents can convey their decision-making processes and guide or coach human behavior in joint tasks, especially in scenarios where there are expectation mismatches between humans and robots.

Could you give us an overview of the research you’ve carried out so far during your PhD?

My research revolves around two broad themes. The first involves designing novel explainable robotic coaching frameworks that infer the human collaborator’s mental model—how people make decisions and their understanding of the world—and provide corrective explanations. This includes developing algorithms for collaboration scenarios and validating them through user studies. The second theme focuses on the human-centric aspects of human-robot communication, drawing from interfacing, sociology, and psychology to determine what information should be shared, the most effective communication modalities, and the optimal timing for communication. I have explored natural language communication, augmented reality interfaces, and behavioral cues to calibrate trust and competence, adapting the communication modality to the application’s needs. Additionally, I investigated situations where people may over-trust or under-trust the recommendations and guidance provided by robots. My research explores various methods and techniques to mitigate these mismatches in trust by offering suitable explanations from the robots.

Is there an aspect of your research that has been particularly interesting?

What I find particularly interesting about my research is its people-centric approach, which emphasizes re-integrating human perspectives into AI development. We aim to design robots and autonomous systems with a focus on the people who will use them, making these technologies more transparent and understandable, especially for everyday users. Therefore, I conduct user studies with people and gather their feedback on these technologies. Working with people is challenging; you can’t have one model or solution that satisfies everyone. You have to identify the small things that people appreciate or need. By doing so, we can empower people with this technology, rather than having them be beholden to it.

What are your plans for building on your research so far during the PhD – what aspects will you be investigating next?

That’s an interesting question, one I’ve been thinking a lot recently. Some ideas that particularly fascinate me include making robotics technology more adaptable and resilient to changes, much like humans. I’m also focusing on LLM-driven technologies and assistive systems. We know that people can become overly reliant on this type of technology. I want to explore the ways to implement guardrails and frictions within these systems to prevent excessive dependence and ensure users can take control in suboptimal situations. Specifically, when deploying these technologies in robotics systems, I want to incorporate some verifiability and accountability, especially during safety-critical scenarios by leveraging new tools, such as virtual reality, to make these technologies safer and more transparent for end-users.

What made you want to study AI?

I was curious about how people think and make decisions. I thought replicating these qualities and deploying them into robots would be the best way to learn about human behavior and understand how these systems can actually help improve day-to-day life problems. Plus, I was a big fan of H. G. Wells growing up, and that also nudged me to explore how humans and robots can work together.

What advice would you give to someone thinking of doing a PhD in the field?

For anyone considering a PhD, clarity in your objectives and understanding why you want to do a PhD is important. It’s a journey filled with challenges but also deep connections and personal growth. One piece of advice would be to ensure you have a supportive circle and collaborate often, as it will help to have different perspectives and help you overcome challenges that you will face in your PhD. Also, make sure you have open and honest communication with your advisor. Be transparent about what is helping you and what isn’t. Don’t hesitate to ask for help when you’re not making progress.

Could you tell us an interesting (non-AI related) fact about you?

I love dancing, particularly swing dancing, and I’m currently learning salsa, rueda, and bachata. Besides dancing, I enjoy reading poetry and hiking. Colorado’s beautiful mountains provide the perfect backdrop for hiking, which I love to do in both summer and winter.

About Aaquib

|

Aaquib Tabrez is a Ph.D. candidate at the University of Colorado Boulder in the Computer Science Department, where he is advised by Professor Brad Hayes. Aaquib previously received a B.Tech. in Mechanical Engineering from NITK Surathkal (India). Aaquib works at the intersection of explainability and human-robot interaction, aiming to leverage and enhance multimodal human-machine communication for value alignment and fostering appropriate trust within human-robot teams. He’s an RSS and HRI Pioneer, and his work has earned best paper nomination awards from the HRI and AAMAS communities. Aaquib also enjoys philosophy, poetry, and swing dancing, along with running and hiking. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2024, ACM SIGAI