ΑΙhub.org

Interview with Bálint Gyevnár: Creating explanations for AI-based decision-making systems

In a series of interviews, we’re meeting some of the AAAI/SIGAI Doctoral Consortium participants to find out more about their research. The Doctoral Consortium provides an opportunity for a group of PhD students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. In this latest interview, we met Bálint Gyevnár and found out about his work creating explanations for AI-based decision-making systems.

Tell us a bit about your PhD – where are you studying, and what is the topic of your research?

I’m a PhD student nearing the end of my third year here at the University of Edinburgh. I was lucky enough to study at this university for the last eight years, so I’ve done my undergraduate and master’s here as well. I really enjoy Edinburgh – it’s a very stimulating environment and there’s a lot of academic discussion which prompted me to stay here. I actually have three supervisors: Stefano Albrecht, Chris Lucas and Shay Cohen. Stefano works in reinforcement learning and multi-agent systems, he leads the Autonomous Agents Research Group. Chris is a cognitive scientist interested in how people explain and infer explanations about the world. Shay is interested in natural language processing. My research is centred at the intersection of these three fields. If I had to use an umbrella term to describe what I’m working on, I would say I work on explainable AI. Specifically, I try to create explanations for AI-based decision-making systems in a way that is accessible to someone who is not involved in AI. I’m looking at sequential decision-making systems that have to decide what to do step-by-step and have to interact with their environment in various interesting ways.

Many of the AI systems today limit the decision-making agency that people previously had. Without having the necessary understanding of how these systems work, and having an idea of what they’re doing, it’s close to impossible to find out what has gone wrong when there is a problem with that decision making. This has implications for how we judge the safety of the systems, how we deal with them, and their public perception. So, the overall motivation of my work is really to try to improve the transparency of these systems by imbuing them with an ability to give explanations.

Is there a project in particular that’s been really interesting?

The most recent work that I’ve been doing has recently been accepted at the Autonomous Agents and Multi-Agent Systems Conference (AAMAS). I was working with autonomous vehicles. We had these scenarios where autonomous vehicles are interacting with other vehicles in the environment. And we tried to explain how and why they were behaving in certain ways.

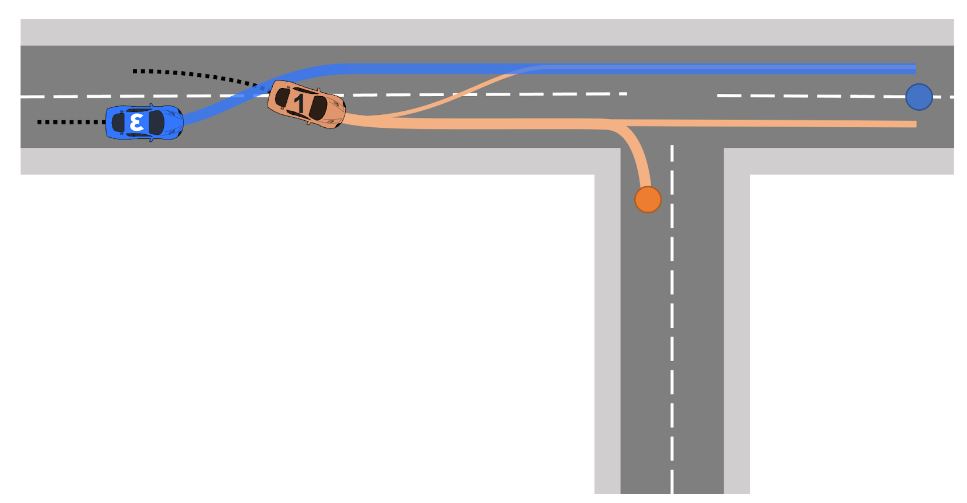

Figure 1. In this scenario the orange vehicle cuts in front of the blue self-driving car which needs to react to this behaviour safely and efficiently. The actions it takes might prompt people to ask questions about why it did that and we need to answer them in an intelligible and clear way.

Figure 1. In this scenario the orange vehicle cuts in front of the blue self-driving car which needs to react to this behaviour safely and efficiently. The actions it takes might prompt people to ask questions about why it did that and we need to answer them in an intelligible and clear way.

As an example, imagine that you are driving down a two-lane road, and there is a T-junction coming up, and someone cuts in front of you, and then they start to slow down. What we would observe an autonomous vehicle do, for example, is change into the other lane, so it can pass the vehicle that has just gone in front of it. The one question that might arise here from the person sitting in the car when they notice this sort of behaviour, is to wonder why the car took the action it did. What many current explainable AI systems give as an explanation is not necessarily useful. They might say something like “we’ve changed lanes because features X, Y and Z had importance of 5.2 -3.5, 15.6”. These are completely arbitrary numbers, but the point I’m trying to illustrate here is that they have these features, i.e. how far the vehicle in front is, how quickly the vehicle in front is going etc, and then they order them in some way that doesn’t really tell much about how these features impacted the decision of the system. A complicated system would have thousands of these features, and it would just give you a ranking of which ones had contributed to the decision. If you gave this explanation to a person, it wouldn’t be of any use.

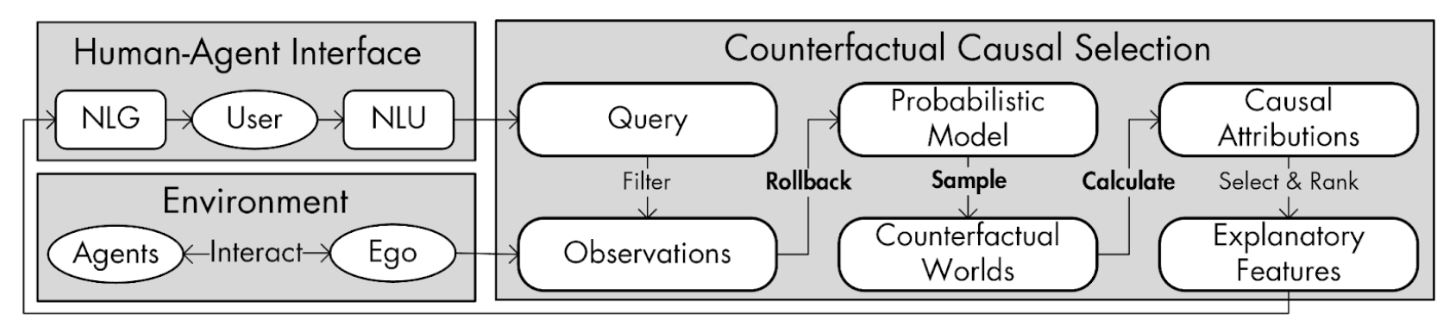

Figure 2. Our proposed causal explanation generation system called CEMA can create intelligible explanations within a conversational framework to users’ query about any multi-agent autonomous system.

Figure 2. Our proposed causal explanation generation system called CEMA can create intelligible explanations within a conversational framework to users’ query about any multi-agent autonomous system.

Instead, we would like to give them causal explanations, which means giving the explanation in terms of a cause-and-effect relationship. So, what we could say is “we changed lanes because the vehicle in front of us cut in”. You can also elaborate on that, and this is a core piece of what I’m doing. You can have this conversation with the user where you can refine the explanation step-by-step. And then, if they ask “OK, but why would you change lanes just because they cut in front of us?”, the explanation could say “they would have slowed us down because they were presumably turning right”, or “it was unsafe because they were too close to us, and so in order to be safer and maintain distance, we just changed to the left”. We argue that these sorts of explanations are more conducive to improving people’s understanding of how that system actually has made these decisions. What I find really cool about this is that the system that we are proposing is actually agnostic to the AI system that you’re using.

What are you planning to work on next?

One potential idea that we have been looking into is really focusing on this conversational aspect and refining the explanation goals with the user. This would involve taking people into the office and putting them down in front of a computer and getting them to chat with the system and seeing how they behave. This would be more human-agent interaction-related analysis, rather than just focusing on the computational aspect, which is very much what a lot of people in explainable AI do – they ignore the stakeholders and don’t focus on the usability aspect.

I wanted to ask about AAAI and the Doctoral Consortium. How was the experience in general?

This was the first time that I’d attended such a large-scale conference, and I really enjoyed it. I found the interactions with people were really stimulating, although it was also super tiring because the programme went from 8:30 to 21:00, so they were long days. Specifically for the doctoral consortium, I found that there were a lot of people working in similar areas to me. And without actually having ever talked to these people, there is an increasing sentiment that supports the ideas that I have been fostering as well, the sort of more human-oriented, human-centred explanation creation for AI systems. That seems to be an increasingly popular topic, and I saw this at the doctoral consortium as well. The interaction with fellow students was really interesting. I saw some really cool work which I’m reading more about, and I’m hoping to see more from these people in the future. The poster session was good too.

We also had an interesting talk from Professor Peter Stone, about academic job talks. I found that really useful because I am getting to the point now where I have to think about my future. Seeing the process of how the academic hiring process goes, it was enlightening and will help me formulate my decisions more in the future. There was a particular slide in Peter’s talk which caught my attention. He said that your job talk should be accessible to everyone, even those who don’t have the necessary understanding to clearly get the technicalities of your work, except for one slide where you should lose everyone so you seem smart!

What was it that made you want to study AI, and to go into this field in particular?

Well, I was interested in machine learning back in 2017. My first exposure to it was taking Andrew Ng’s deep learning course on Coursera, which back then was a lot smaller and a lot less fleshed out. Then I had an internship at a self-driving car company here in Edinburgh. That was during COVID, so it was all remote – unfortunately, I never got to see the office. But, we were working on a paper there, which is tangentially related to what I’m doing, and I really enjoyed the collaborative atmosphere and the critical thinking where people were open to sharing views. I didn’t feel like I had to hide my opinions from my supervisor if I disagreed with something. There was no toxic workplace politics. So, I think that really appealed to me. Also, the whole process of writing up the paper, doing the experiments, and getting the acceptance, all culminated in the decision to try to pursue a PhD. I really enjoyed the sort of academic life that I experienced doing that internship. And then, when I was doing my master’s in Edinburgh, I had a good rapport with my supervisor and so we decided to extend it for another four years [by doing the PhD]. It’s a four-year programme because I’m part of the Centre for Doctoral Training (CDT) programme.

If you were to give any potential PhD students some advice, what would you say about doing a PhD?

I would say to really try to find something that they find really interesting, and try to find a supervisor somewhere in the world that is interested in the same thing. What I’ve found is that people often look at the names of the supervisors and the publication counts, and they look for a prominent supervisor. They may not care about that field, but they just want to work with the person. Sometimes that may work out. But if there is something you are really passionate about, you should focus on that. Write a really good research proposal that shows your excitement for that and find a person that matches your interests.

Could you tell us an interesting fact about you?

I play the piano quite seriously. I used to take part in competitions back in my home country of Hungary. I still play a lot and I find that playing music is a really good way to remove some stress from your life and to distract yourself from the “real world”. You can get into this magical space where you can explore harmonies and I think that’s really beautiful and I intend to keep it up.

About Bálint

|

Bálint Gyevnár is a penultimate year PhD student at the University of Edinburgh supervised by Stefano Albrecht, Shay Cohen, and Chris Lucas. He focuses on building socially aligned explainable AI technologies for end users, drawing on research from multiple disciplines including autonomous systems, cognitive science, natural language processing, and the law. His goal is to create safer and more trustworthy autonomous systems that everyone can use and trust appropriately regardless of their knowledge of AI systems. He authored or co-authored several works at high-profile conferences such as ECAI, AAMAS, ICRA, and IROS, and has been the recipient of multiple early career research awards from Stanford, UK Research and Innovation, and IEEE Intelligent Transportation Systems Society. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2024, ACM SIGAI