ΑΙhub.org

New computer vision method helps speed up screening of electronic materials

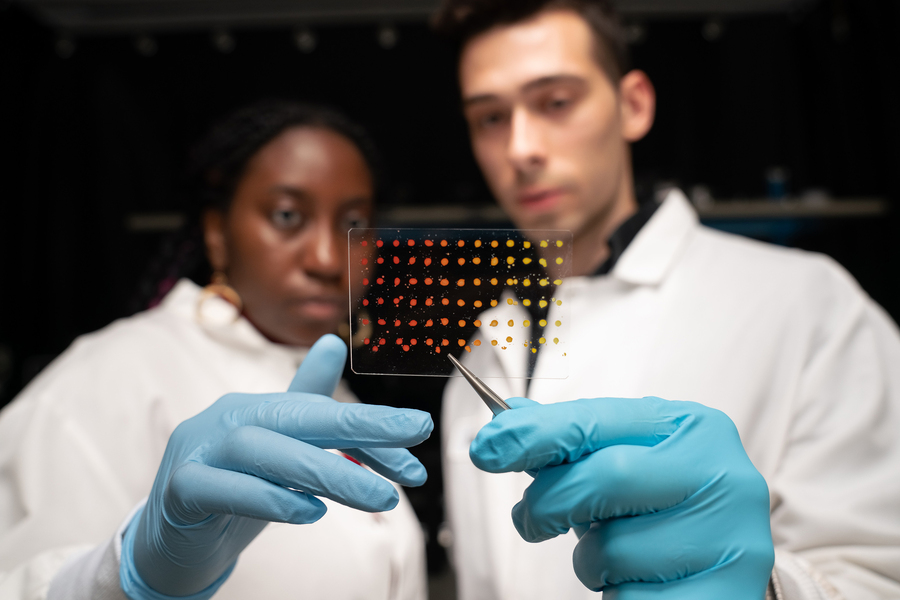

MIT graduate students Eunice Aissi, left, and Alexander Siemenn, have developed a technique that automatically analyzes visual features in printed samples (pictured) to quickly determine key properties of new and promising semiconducting materials. Credit: Bryce Vickmark.

MIT graduate students Eunice Aissi, left, and Alexander Siemenn, have developed a technique that automatically analyzes visual features in printed samples (pictured) to quickly determine key properties of new and promising semiconducting materials. Credit: Bryce Vickmark.

By Jennifer Chu

Boosting the performance of solar cells, transistors, LEDs, and batteries will require better electronic materials, made from novel compositions that have yet to be discovered.

To speed up the search for advanced functional materials, scientists are using AI tools to identify promising materials from hundreds of millions of chemical formulations. In tandem, engineers are building machines that can print hundreds of material samples at a time based on chemical compositions tagged by AI search algorithms.

But to date, there’s been no similarly speedy way to confirm that these printed materials actually perform as expected. This last step of material characterization has been a major bottleneck in the pipeline of advanced materials screening.

Now, a new computer vision technique developed by MIT engineers significantly speeds up the characterization of newly synthesized electronic materials. The technique automatically analyzes images of printed semiconducting samples and quickly estimates two key electronic properties for each sample: band gap (a measure of electron activation energy) and stability (a measure of longevity).

The new technique accurately characterizes electronic materials 85 times faster compared to the standard benchmark approach.

The researchers intend to use the technique to speed up the search for promising solar cell materials. They also plan to incorporate the technique into a fully automated materials screening system.

“Ultimately, we envision fitting this technique into an autonomous lab of the future,” says MIT graduate student Eunice Aissi. “The whole system would allow us to give a computer a materials problem, have it predict potential compounds, and then run 24-7 making and characterizing those predicted materials until it arrives at the desired solution.”

“The application space for these techniques ranges from improving solar energy to transparent electronics and transistors,” adds MIT graduate student Alexander (Aleks) Siemenn. “It really spans the full gamut of where semiconductor materials can benefit society.”

Aissi and Siemenn detail the new technique in a study in Nature Communications. Their MIT co-authors include graduate student Fang Sheng, postdoc Basita Das, and professor of mechanical engineering Tonio Buonassisi, along with former visiting professor Hamide Kavak of Cukurova University and visiting postdoc Armi Tiihonen of Aalto University.

Power in optics

Once a new electronic material is synthesized, the characterization of its properties is typically handled by a “domain expert” who examines one sample at a time using a benchtop tool called a UV-Vis, which scans through different colors of light to determine where the semiconductor begins to absorb more strongly. This manual process is precise but also time-consuming: A domain expert typically characterizes about 20 material samples per hour — a snail’s pace compared to some printing tools that can lay down 10,000 different material combinations per hour.

“The manual characterization process is very slow,” Buonassisi says. “They give you a high amount of confidence in the measurement, but they’re not matched to the speed at which you can put matter down on a substrate nowadays.”

To speed up the characterization process and clear one of the largest bottlenecks in materials screening, Buonassisi and his colleagues looked to computer vision — a field that applies computer algorithms to quickly and automatically analyze optical features in an image.

“There’s power in optical characterization methods,” Buonassisi notes. “You can obtain information very quickly. There is richness in images, over many pixels and wavelengths, that a human just can’t process but a computer machine-learning program can.”

The team realized that certain electronic properties — namely, band gap and stability — could be estimated based on visual information alone, if that information were captured with enough detail and interpreted correctly.

With that goal in mind, the researchers developed two new computer vision algorithms to automatically interpret images of electronic materials: one to estimate band gap and the other to determine stability.

The first algorithm is designed to process visual data from highly detailed, hyperspectral images.

“Instead of a standard camera image with three channels — red, green, and blue (RBG) — the hyperspectral image has 300 channels,” Siemenn explains. “The algorithm takes that data, transforms it, and computes a band gap. We run that process extremely fast.”

The second algorithm analyzes standard RGB images and assesses a material’s stability based on visual changes in the material’s color over time.

“We found that color change can be a good proxy for degradation rate in the material system we are studying,” Aissi says.

Material compositions

The team applied the two new algorithms to characterize the band gap and stability for about 70 printed semiconducting samples. They used a robotic printer to deposit samples on a single slide, like cookies on a baking sheet. Each deposit was made with a slightly different combination of semiconducting materials. In this case, the team printed different ratios of perovskites — a type of material that is expected to be a promising solar cell candidate though is also known to quickly degrade.

“People are trying to change the composition — add a little bit of this, a little bit of that — to try to make [perovskites] more stable and high-performance,” Buonassisi says.

Once they printed 70 different compositions of perovskite samples on a single slide, the team scanned the slide with a hyperspectral camera. Then they applied an algorithm that visually “segments” the image, automatically isolating the samples from the background. They ran the new band gap algorithm on the isolated samples and automatically computed the band gap for every sample. The entire band gap extraction process process took about six minutes.

“It would normally take a domain expert several days to manually characterize the same number of samples,” Siemenn says.

To test for stability, the team placed the same slide in a chamber in which they varied the environmental conditions, such as humidity, temperature, and light exposure. They used a standard RGB camera to take an image of the samples every 30 seconds over two hours. They then applied the second algorithm to the images of each sample over time to estimate the degree to which each droplet changed color, or degraded under various environmental conditions. In the end, the algorithm produced a “stability index,” or a measure of each sample’s durability.

As a check, the team compared their results with manual measurements of the same droplets, taken by a domain expert. Compared to the expert’s benchmark estimates, the team’s band gap and stability results were 98.5 percent and 96.9 percent as accurate, respectively, and 85 times faster.

“We were constantly shocked by how these algorithms were able to not just increase the speed of characterization, but also to get accurate results,” Siemenn says. “We do envision this slotting into the current automated materials pipeline we’re developing in the lab, so we can run it in a fully automated fashion, using machine learning to guide where we want to discover these new materials, printing them, and then actually characterizing them, all with very fast processing.”

Read the work in full

Using scalable computer vision to automate high-throughput semiconductor characterization, Alexander E. Siemenn, Eunice Aissi, Fang Sheng, Armi Tiihonen, Hamide Kavak, Basita Das & Tonio Buonassisi, Nature Communications (2024).