ΑΙhub.org

Interview with Pulkit Verma: Towards safe and reliable behavior of AI agents

In this interview series, we’re meeting some of the AAAI/SIGAI Doctoral Consortium participants to find out more about their research. The Doctoral Consortium provides an opportunity for a group of PhD students to discuss and explore their research interests and career objectives in an interdisciplinary workshop together with a panel of established researchers. In this latest interview, we hear from Pulkit Verma, PhD graduate from Arizona State University.

Tell us a bit about your PhD – where did you study, and what was the topic of your research?

I recently completed my PhD in Computer Science from School of Computing and Augmented Intelligence, Arizona State University. At ASU, I was working with Professor Siddharth Srivastava. My research focuses on safe and reliable behavior of AI agents. I investigate the minimal set of requirements in an AI system that would enable a user to assess and understand the limits of its safe operability.

Could you give us an overview of the research you carried out during your PhD?

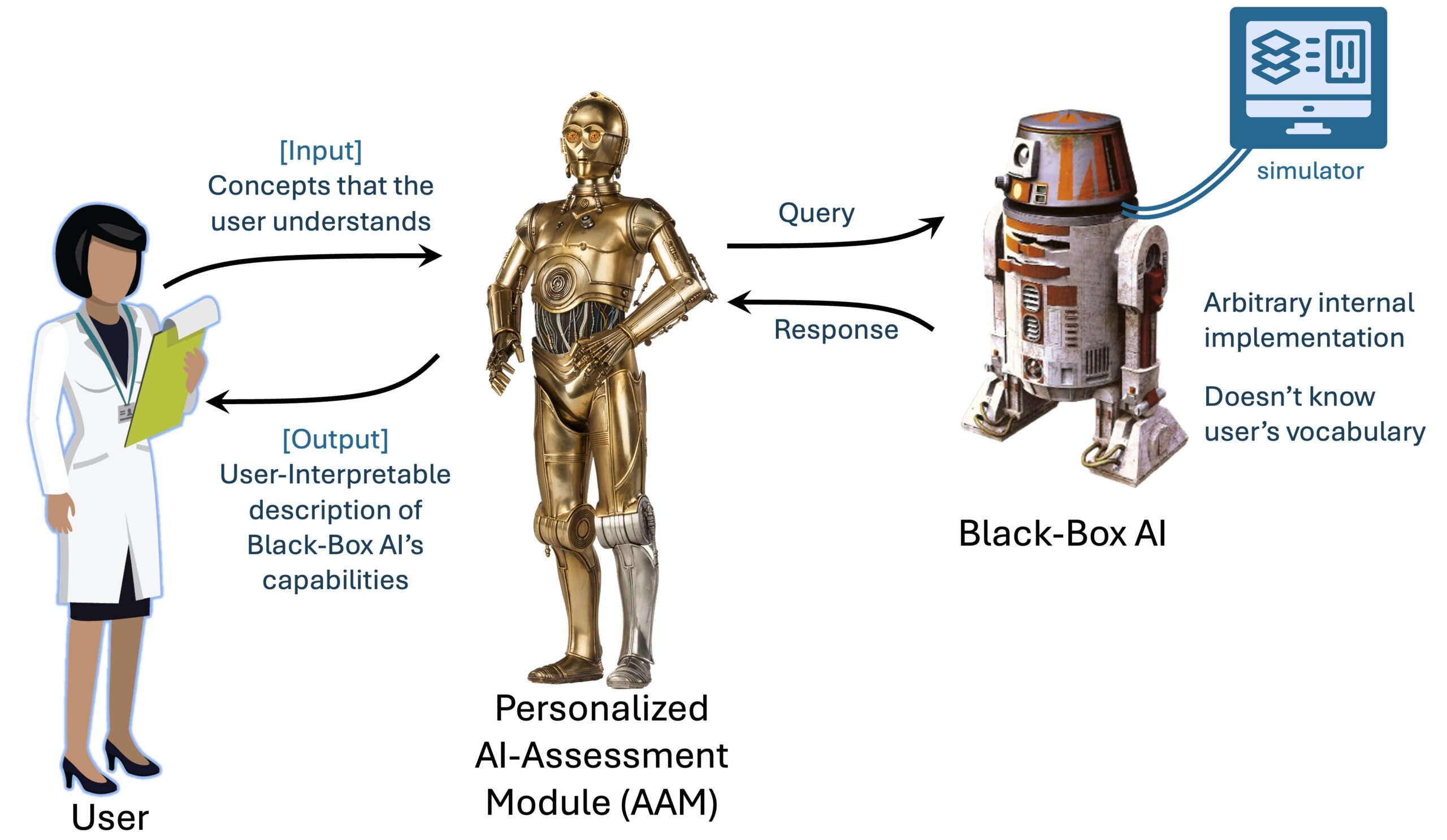

The growing deployment of AI systems presents a paramount challenge: ensuring safety and reliability in their use. Non-experts using black-box AI systems need to understand how these systems work and assess what they can and cannot do safely. One possible solution to this problem is to support a third-party assessment of AI systems. To this end, one major contribution of my research is to formally define the problem of third-party assessment of black-box taskable AI systems. This is significant as black-box AI systems are becoming standard, and manufacturers are often hesitant to share their internal workings. Consequently, it becomes imperative to assess these systems to reason about their behavior under various situations before deploying them in real-world settings. A formal definition of this problem will streamline efforts to solve it. My work also defines a set of requirements in terms of a minimal query-response interface that black-box AI systems should support for such assessments.

Is there an aspect of your research that has been particularly interesting?

An interesting aspect of my research is the personalization of the description of an AI system’s working that we can do in our work. Assume you want to explain to an automobile engineer, how an autonomous car works. The explanation that you give will be very different from the one you will give to a middle school student. The fact that we can do it for our settings, that too autonomously, is really important. So in addition to assessing a black-box AI system, we can also personalize the output of this assessment for a user is a really important step in increasing human trust on these AI systems. Ultimately, this personalization of AI explanations could be a key factor in accelerating the widespread adoption and acceptance of AI technologies in our society.

Do you have plans to build on this research following your PhD?

Yes, definitely. I believe this is an important research direction, and addressing the issue of third-party assessment is something that is gaining more traction. I also co-organized an AAAI Symposium on this topic this year: User-Aligned Assessment of Adaptive AI Systems. I have now joined the Interactive Robotics Group at CSAIL, MIT, where I am working with Professor Julie Shah on a closely related problem of explaining an AI system’s working to the human coworkers to enable an efficient human-AI collaboration. In the long term, I also plan to work on autonomous robot skill acquisition and lifelong learning for autonomous systems with human-in-the-loop.

What made you want to study AI?

Throughout my school, I have consistently been drawn to the field of engineering (like many students in India as one of the two prominent career choices), with a particular fascination for space science and technology. This interest manifested early on, as evidenced by my childhood diary filled with curated newspaper clippings on these subjects. The important moment in my career path came during my master’s at IIT Guwahati when I enrolled in a course on “Speech Processing.” As part of this course, I had the opportunity to develop a speech recognition system based on Rabiner’s seminal 1989 tutorial. This hands-on experience not only piqued my interest in artificial intelligence but also set me on a trajectory to explore the field more deeply. Consequently, I sought out and completed numerous AI-focused courses during my master’s. As I delved further into the subject, I found myself particularly intrigued by classical symbolic AI, which ultimately became the focus of my doctoral research. This progression from a general interest in engineering to a specialized focus on AI safety and planning was very gradual and I am quite happy where I ended up.

What advice would you give to someone thinking of doing a PhD in the field?

One should do a PhD because of the interest in research. PhD is a long journey and it has its share of ups and downs. Ensure you’re truly passionate about your research area. This passion will sustain you through challenging times. Also, one must choose their advisor wisely. A student’s relationship with their advisor is critical. I was very fortunate in this aspect, but have witnessed a few cases where things just did not work out. So look for someone whose research interests align with yours and who has a good mentorship track record.

Could you tell us an interesting (non-AI related) fact about you?

I love playing badminton and table tennis. In high-school I represented my school teams in these games. I also read a lot of (non-AI) books, mostly fiction though. My favorite books are Foundation series, The Godfather, The Kite Runner, and Godan (Hindi).

About Pulkit

|

Pulkit Verma is a Postdoctoral Associate at the Interactive Robotics Group at the Massachusetts Institute of Technology, where he works with Professor Julie Shah. His research focuses on the safe and reliable behavior of taskable AI agents. He investigates the minimal set of requirements in an AI system that would enable a user to assess and understand the limits of its safe operability. He received his Ph.D. in Computer Science from School of Computing and Augmented Intelligence, Arizona State University, where he worked with Professor Siddharth Srivastava. Before that, he completed his M.Tech. in Computer Science and Engineering at the Indian Institute of Technology Guwahati. He was awarded the Graduate College Completion Fellowship at ASU in 2023 and received the Best Demo Award at the International Conference on Autonomous Agents and Multiagent Systems (AAMAS) in 2022. |

tags: AAAI, AAAI Doctoral Consortium, AAAI2024, ACM SIGAI