ΑΙhub.org

Springing into conferences (at the AAAI spring symposium)

In March of this year I was lucky to travel to my first academic conference (thanks very much to EPSRC Rise and AAAI). Feeling a little bit nervous, extremely jet-lagged, and completely in awe of Stanford’s gorgeous campus, I attended the symposium on “Interpretable AI for Well-Being: Understanding Cognitive Bias and Social Embeddedness”.This was only one of nine symposia run during the 3-day event. My PhD research aims to create an educational framework to teach people about AI (particularly focused on those who tend to be missed by other initiatives such as mainstream education and workplace retraining). I chose this symposium based on a report by KPMG that people in the UK are most willing to share their personal data with the public health service, including for AI use to improve care. I was hoping to come out with a list of studies and ideas that could be used in my research on AI education. Despite not being what I had in mind (my thoughts were more mainstream – chatbots for depression and fitness trackers), the talks throughout the two and a half days were interesting, inspiring and provided lots of food for thought.

I was extremely thankful for the introduction by the symposium organiser, Takashi Kido. He provided useful definitions and rich examples which were paramount to my understanding of the key concepts. Some useful terms, that were used throughout the symposium:

Interpretable AI is AI whose actions can be easily understood by humans, rather than being the stereotypical ‘black-box’ neural network.

Well being AI is an AI that aims to promote psychological well-being (happiness) and maximise human potential.

Social embeddedness is a term taken from economic theory to describe how closely related the economy and social issues are. In AI, it refers to how much of a role AI will play in future economics.

Cognitive bias is a systematic error in thinking that affects the decisions and judgements that people make.

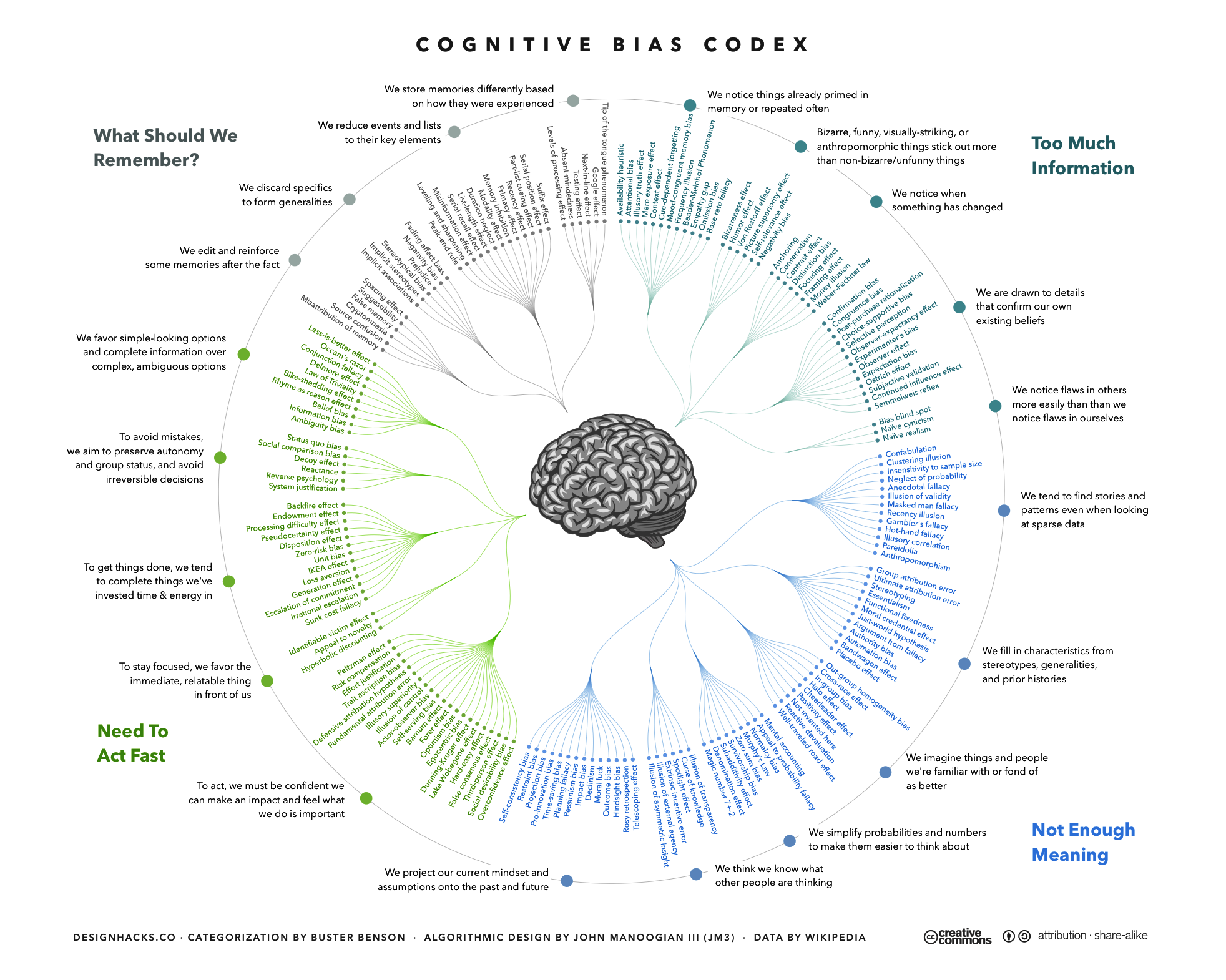

Cognitive bias is a very hot-topic in the AI world at the minute, and I thought the Cognitive Bias Codex (below) was extremely insightful into just how many types of cognitive bias exist. One newer type of cognitive bias caught my attention, Google Effects which is the belief (held by myself) that making good questions with key words is better than remembering.

Source: Wikipedia

A few of the conference highlights for me were:

A wonderful talk on whether AI can be used in health care, with the example of AI predicting sleep apnea syndrome. For AI to be trusted in healthcare it needs to overcome four problems: hard interpretation, inappropriate data, cognitive bias, and generality of outcomes. The talk discussed how all these can be addressed. My favourite points were how social embeddedness can overcome generality by using sensors to provide individualised data, and using decision trees rather than neural networks can make AI more interpretable.

A lively discussion on the concept of Universal Basic Property where every human would be given a property or some land on which to live. This concept would be dependant on strict population controls which spurred debate about humans needing to move to Mars! The talk on this covered some thought-provoking points on ‘robot taxes’ and Universal Basic Income.

Meeting someone I follow on Twitter in real life. A Developer Advocate for Kaggle was at the conference speaking at another symposium. After a quick online exchange, we met for a coffee which was lovely (and actually turned out to be useful for my PhD research!).

After this I was actually keen to present at a conference – being delightfully introverted, small talk makes me anxious so having something to talk about would make the networking part of conferences better for me. I have since submitted and will be presenting at the Doctoral Consortium at the 20th International Conference on Artificial Intelligence in Education in just a couple weeks.

tags: AAAI