ΑΙhub.org

Using machine learning to track greenhouse gas emissions

By Michelle Willebrands

PhD candidate Julia Wąsala searches for greenhouse gas emissions using satellite data. As a computer scientist, she bridges the gap between computer science and space research. “We really can’t do this research without collaboration.”

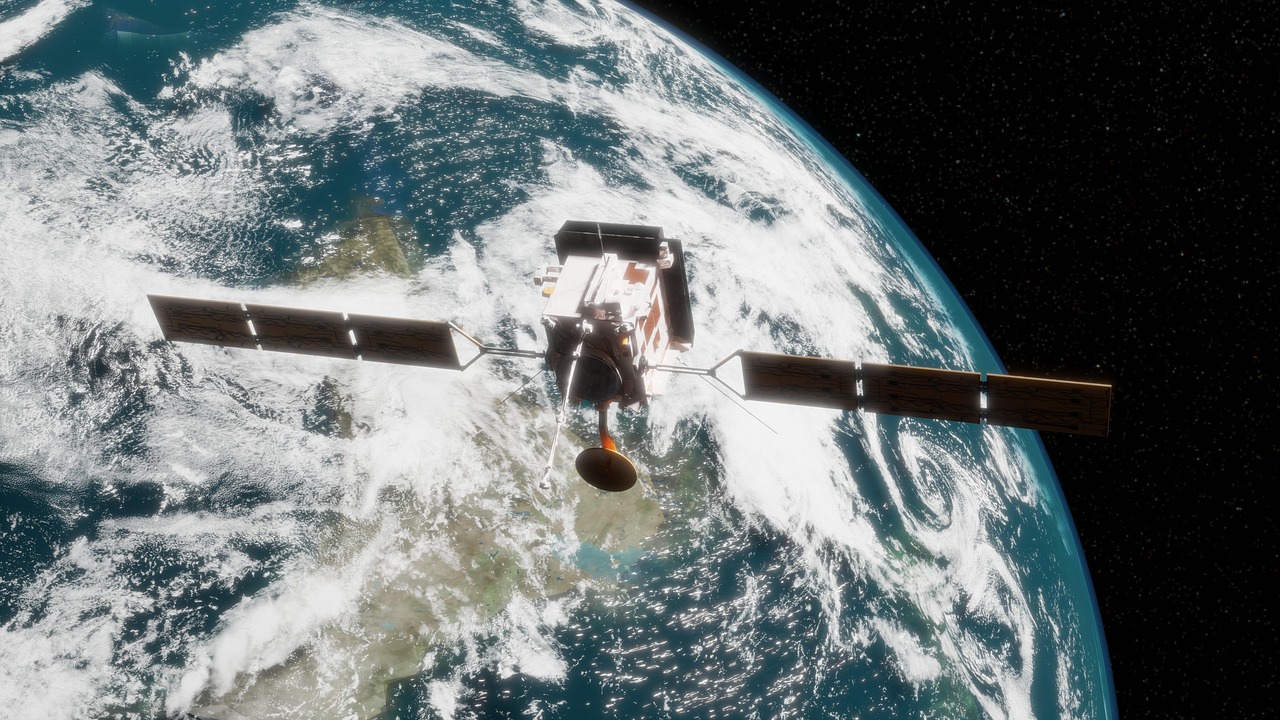

Wąsala collaborates with atmospheric scientists from SRON (Space Research Organisation Netherlands) on machine learning models that detect large greenhouse gas emissions from space. There is too much data to review manually, and such models offer a solution.

How much greenhouse gas do humans emit?

The machine learning method Wąsala refers to detects emissions in the form of a point source: plumes. “That project gives a better picture of how much methane humans emit,” she says. “This allows us to contribute to detecting leaks in gas pipes or emissions from landfills, for example, and then solve them.”

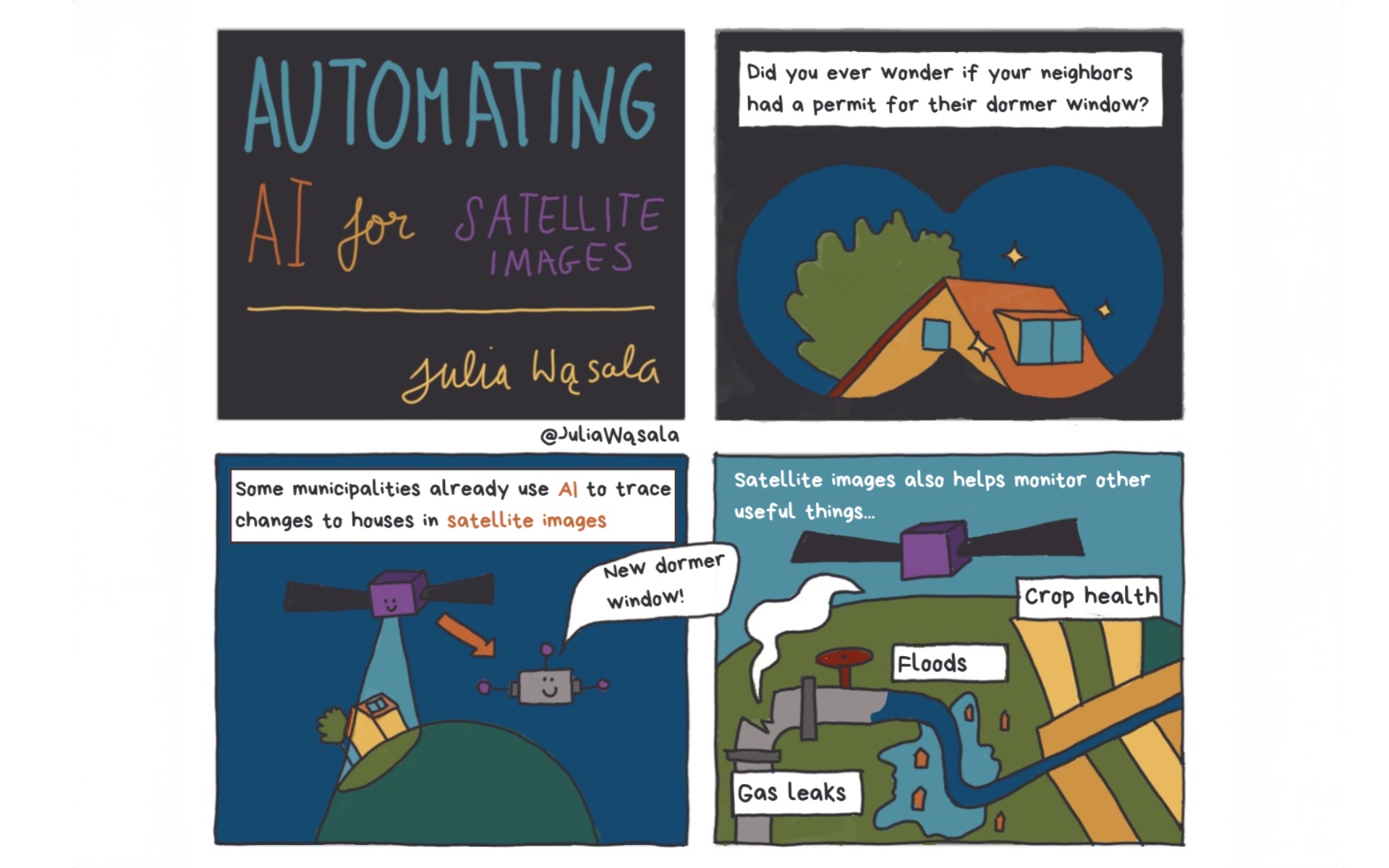

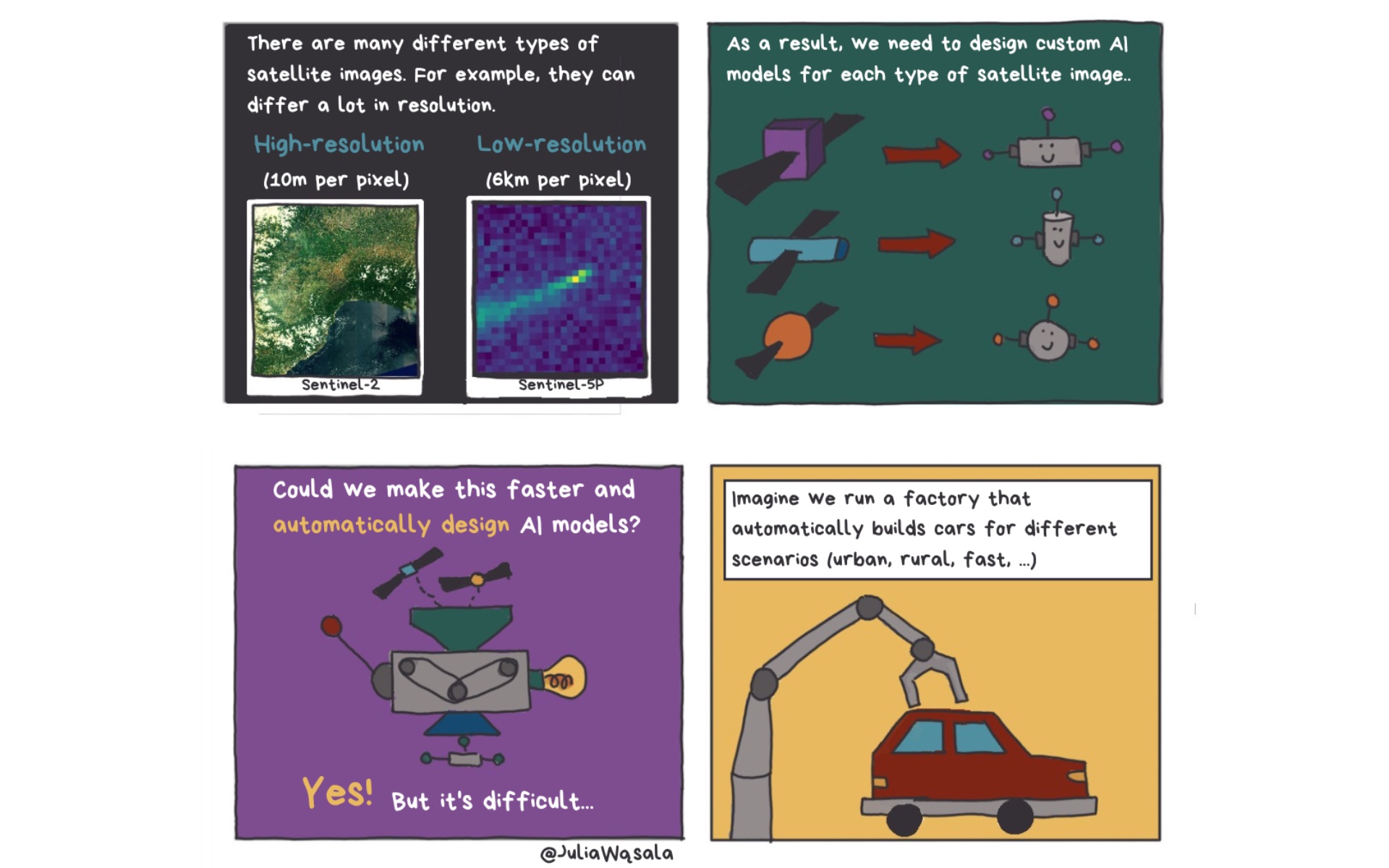

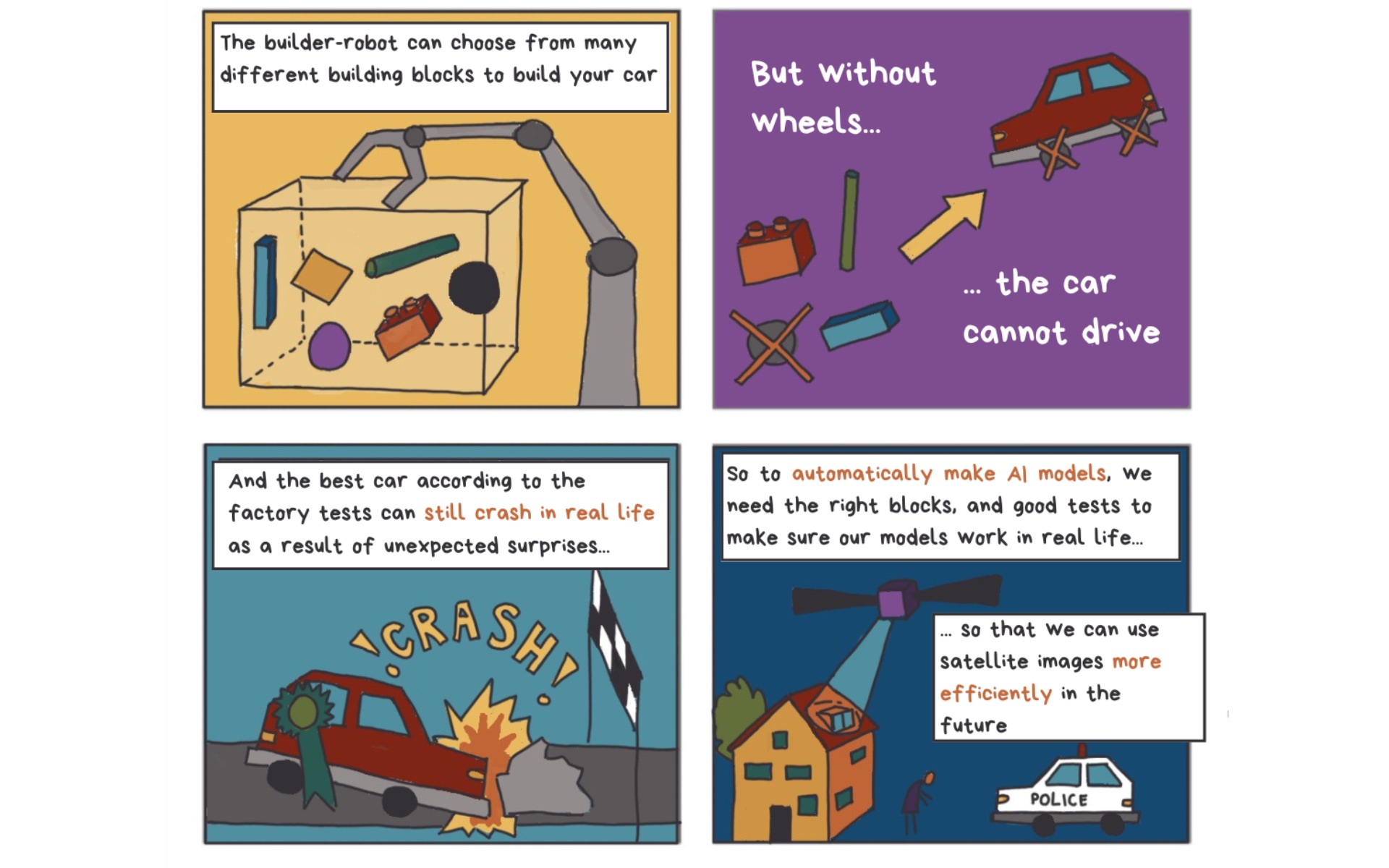

The PhD candidate designed a method that can also detect plumes of other gases. “My most important contribution is that this is done completely automatically. This method automatically adjusts the model for different gases, such as methane, carbon monoxide and, in the future, nitrogen dioxide.”

Not only the model has to be good, but so does the training data

It comes as no surprise that the model must be well constructed, but the training data is just as important, explains Wąsala. The vast majority of satellite images do not contain gas plumes, but the model must still have enough examples to learn from. This means that someone has to manually label hundreds of training images. “That’s a huge job. We’re very happy that someone from SRON was able to do it,” she adds.

Wąsala talks to kids about science during teach-out

Wąsala talks to kids about science during teach-out

Clouds throw a spanner in the works

However, the biggest challenge lies in unexpected biases in the data. “Many people know that AI models can be biased, but we usually associate such bias with people: skin colour, gender or socio-economic status,” says Wąsala. “We also see bias in satellite data – it just looks different.”

Many satellite images have missing pixels, for example due to clouds obscuring the Earth’s surface. Often, these missing pixels do not occur randomly but follow a pattern. For example, there are more clouds above the equator. “The result was that my model learned to predict that a plume was present when there were few missing pixels in an image. But that’s not related at all.”

Collaboration between computer science and earth sciences

As a computer scientist Wąsala works “under the bonnet”, as she puts it. She writes the code but needs the earth scientists to interpret the data – and thus the results of her model. Fortunately, she easily crosses the boundaries of her field. “I enjoy working together. It’s also necessary: I don’t have the expertise to analyse the satellite images myself,” says Wąsala. “But sometimes it can be a challenge. We all speak our own language.”

“I want to show how much fun this research is”

The PhD candidate does a lot to make AI more accessible. She has a blog in which she informs earth scientists about the possibilities of machine learning. She appeared at the Weekend of Science event and is a member of the “Ask it Leiden” panel, where she answers children’s questions about AI. “I want to show how much fun this research is.”

Wąsala made a comic about her research