ΑΙhub.org

Hot papers on arXiv from the past month: September 2021

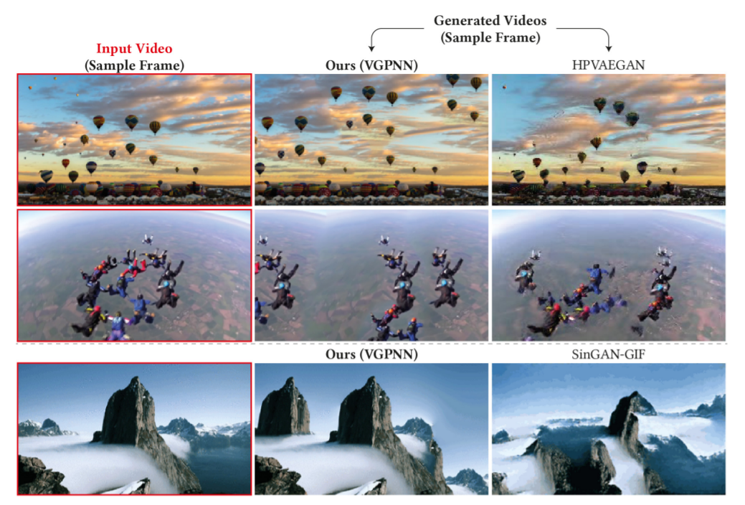

Comparing the visual quality of generated frames. From Diverse Generation from a Single Video Made Possible. Reproduced under a CC BY 4.0 license.

What’s hot on arXiv? Here are the most tweeted papers that were uploaded onto arXiv during September 2021.

Results are powered by Arxiv Sanity Preserver.

Eyes Tell All: Irregular Pupil Shapes Reveal GAN-generated Faces

Hui Guo, Shu Hu, Xin Wang, Ming-Ching Chang, Siwei Lyu

Submitted to arXiv on: 1 September 2021

Abstract: Generative adversary network (GAN) generated high-realistic human faces have been used as profile images for fake social media accounts and are visually challenging to discern from real ones. In this work, we show that GAN-generated faces can be exposed via irregular pupil shapes. This phenomenon is caused by the lack of physiological constraints in the GAN models. We demonstrate that such artifacts exist widely in high-quality GAN-generated faces and further describe an automatic method to extract the pupils from two eyes and analysis their shapes for exposing the GAN-generated faces. Qualitative and quantitative evaluations of our method suggest its simplicity and effectiveness in distinguishing GAN-generated faces.

597 tweets

Datasets: A Community Library for Natural Language Processing

Quentin Lhoest, Albert Villanova del Moral, Yacine Jernite, Abhishek Thakur, Patrick von Platen, Suraj Patil, Julien Chaumond, Mariama Drame, Julien Plu, Lewis Tunstall, Joe Davison, Mario Šaško, Gunjan Chhablani, Bhavitvya Malik, Simon Brandeis, Teven Le Scao, Victor Sanh, Canwen Xu, Nicolas Patry, Angelina McMillan-Major, Philipp Schmid, Sylvain Gugger, Clément Delangue, Théo Matussière, Lysandre Debut, Stas Bekman, Pierric Cistac, Thibault Goehringer, Victor Mustar, François Lagunas, Alexander M. Rush, Thomas Wolf

Submitted to arXiv on: 7 September 2021

Abstract: The scale, variety, and quantity of publicly-available NLP datasets has grown rapidly as researchers propose new tasks, larger models, and novel benchmarks. Datasets is a community library for contemporary NLP designed to support this ecosystem. Datasets aims to standardize end-user interfaces, versioning, and documentation, while providing a lightweight front-end that behaves similarly for small datasets as for internet-scale corpora. The design of the library incorporates a distributed, community-driven approach to adding datasets and documenting usage. After a year of development, the library now includes more than 650 unique datasets, has more than 250 contributors, and has helped support a variety of novel cross-dataset research projects and shared tasks. The library is available at this https URL.

175 tweets

Exact Learning of Qualitative Constraint Networks from Membership Queries

Malek Mouhoub, Hamad Al Marri, Eisa Alanazi

Submitted to arXiv on: 23 September 2021

Abstract: A Qualitative Constraint Network (QCN) is a constraint graph for representing problems under qualitative temporal and spatial relations, among others. More formally, a QCN includes a set of entities, and a list of qualitative constraints defining the possible scenarios between these entities. These latter constraints are expressed as disjunctions of binary relations capturing the (incomplete) knowledge between the involved entities. QCNs are very effective in representing a wide variety of real-world applications, including scheduling and planning, configuration and Geographic Information Systems (GIS). It is however challenging to elicit, from the user, the QCN representing a given problem. To overcome this difficulty in practice, we propose a new algorithm for learning, through membership queries, a QCN from a non expert. In this paper, membership queries are asked in order to elicit temporal or spatial relationships between pairs of temporal or spatial entities. In order to improve the time performance of our learning algorithm in practice, constraint propagation, through transitive closure, as well as ordering heuristics, are enforced. The goal here is to reduce the number of membership queries needed to reach the target QCN. In order to assess the practical effect of constraint propagation and ordering heuristics, we conducted several experiments on randomly generated temporal and spatial constraint network instances. The results of the experiments are very encouraging and promising.

163 tweets

Primer: Searching for Efficient Transformers for Language Modeling

David R. So, Wojciech Mańke, Hanxiao Liu, Zihang Dai, Noam Shazeer, Quoc V. Le

Submitted to arXiv on: 17 September 2021

Abstract: Large Transformer models have been central to recent advances in natural language processing. The training and inference costs of these models, however, have grown rapidly and become prohibitively expensive. Here we aim to reduce the costs of Transformers by searching for a more efficient variant. Compared to previous approaches, our search is performed at a lower level, over the primitives that define a Transformer TensorFlow program. We identify an architecture, named Primer, that has a smaller training cost than the original Transformer and other variants for auto-regressive language modeling. Primer’s improvements can be mostly attributed to two simple modifications: squaring ReLU activations and adding a depthwise convolution layer after each Q, K, and V projection in self-attention. Experiments show Primer’s gains over Transformer increase as compute scale grows and follow a power law with respect to quality at optimal model sizes. We also verify empirically that Primer can be dropped into different codebases to significantly speed up training without additional tuning. For example, at a 500M parameter size, Primer improves the original T5 architecture on C4 auto-regressive language modeling, reducing the training cost by 4X. Furthermore, the reduced training cost means Primer needs much less compute to reach a target one-shot performance. For instance, in a 1.9B parameter configuration similar to GPT-3 XL, Primer uses 1/3 of the training compute to achieve the same one-shot performance as Transformer. We open source our models and several comparisons in T5 to help with reproducibility.

133 tweets

Finetuned Language Models Are Zero-Shot Learners

Jason Wei, Maarten Bosma, Vincent Y. Zhao, Kelvin Guu, Adams Wei Yu, Brian Lester, Nan Du, Andrew M. Dai, Quoc V. Le

Submitted to arXiv on: 3 September 2021

Abstract: This paper explores a simple method for improving the zero-shot learning abilities of language models. We show that instruction tuning — finetuning language models on a collection of tasks described via instructions — substantially boosts zero-shot performance on unseen tasks. We take a 137B parameter pretrained language model and instruction-tune it on over 60 NLP tasks verbalized via natural language instruction templates. We evaluate this instruction-tuned model, which we call FLAN, on unseen task types. FLAN substantially improves the performance of its unmodified counterpart and surpasses zero-shot 175B GPT-3 on 19 of 25 tasks that we evaluate. FLAN even outperforms few-shot GPT-3 by a large margin on ANLI, RTE, BoolQ, AI2-ARC, OpenbookQA, and StoryCloze. Ablation studies reveal that number of tasks and model scale are key components to the success of instruction tuning.

94 tweets

Torch.manual_seed(3407) is all you need: On the influence of random seeds in deep learning architectures for computer vision

David Picard

Submitted to arXiv on: 16 September 2021

Abstract: In this paper I investigate the effect of random seed selection on the accuracy when using popular deep learning architectures for computer vision. I scan a large amount of seeds (up to 104) on CIFAR 10 and I also scan fewer seeds on Imagenet using pre-trained models to investigate large scale datasets. The conclusions are that even if the variance is not very large, it is surprisingly easy to find an outlier that performs much better or much worse than the average.

106 tweets

Diverse Generation from a Single Video Made Possible

Niv Haim, Ben Feinstein, Niv Granot, Assaf Shocher, Shai Bagon, Tali Dekel, Michal Irani

Submitted to arXiv on: 17 September 2021

Abstract: Most advanced video generation and manipulation methods train on a large collection of videos. As such, they are restricted to the types of video dynamics they train on. To overcome this limitation, GANs trained on a single video were recently proposed. While these provide more flexibility to a wide variety of video dynamics, they require days to train on a single tiny input video, rendering them impractical. In this paper we present a fast and practical method for video generation and manipulation from a single natural video, which generates diverse high-quality video outputs within seconds (for benchmark videos). Our method can be further applied to Full-HD video clips within minutes. Our approach is inspired by a recent advanced patch-nearest-neighbor based approach [Granot et al. 2021], which was shown to significantly outperform single-image GANs, both in run-time and in visual quality. Here we generalize this approach from images to videos, by casting classical space-time patch-based methods as a new generative video model. We adapt the generative image patch nearest neighbor approach to efficiently cope with the huge number of space-time patches in a single video. Our method generates more realistic and higher quality results than single-video GANs (confirmed by quantitative and qualitative evaluations). Moreover, it is disproportionally faster (runtime reduced from several days to seconds). Other than diverse video generation, we demonstrate several other challenging video applications, including spatio-temporal video retargeting, video structural analogies and conditional video-inpainting.

76 tweets

Knowledge is reward: Learning optimal exploration by predictive reward cashing

Luca Ambrogioni

Submitted to arXiv on: 17 September 2021

Abstract: There is a strong link between the general concept of intelligence and the ability to collect and use information. The theory of Bayes-adaptive exploration offers an attractive optimality framework for training machines to perform complex information gathering tasks. However, the computational complexity of the resulting optimal control problem has limited the diffusion of the theory to mainstream deep AI research. In this paper we exploit the inherent mathematical structure of Bayes-adaptive problems in order to dramatically simplify the problem by making the reward structure denser while simultaneously decoupling the learning of exploitation and exploration policies. The key to this simplification comes from the novel concept of cross-value (i.e. the value of being in an environment while acting optimally according to another), which we use to quantify the value of currently available information. This results in a new denser reward structure that “cashes in” all future rewards that can be predicted from the current information state. In a set of experiments we show that the approach makes it possible to learn challenging information gathering tasks without the use of shaping and heuristic bonuses in situations where the standard RL algorithms fail.

62 tweets

Relating Graph Neural Networks to Structural Causal Models

Matej Zečević, Devendra Singh Dhami, Petar Veličković, Kristian Kersting

Submitted to arXiv on: 9 September 2021

Abstract: Causality can be described in terms of a structural causal model (SCM) that carries information on the variables of interest and their mechanistic relations. For most processes of interest the underlying SCM will only be partially observable, thus causal inference tries to leverage any exposed information. Graph neural networks (GNN) as universal approximators on structured input pose a viable candidate for causal learning, suggesting a tighter integration with SCM. To this effect we present a theoretical analysis from first principles that establishes a novel connection between GNN and SCM while providing an extended view on general neural-causal models. We then establish a new model class for GNN-based causal inference that is necessary and sufficient for causal effect identification. Our empirical illustration on simulations and standard benchmarks validate our theoretical proofs.

56 tweets

ConvMLP: Hierarchical Convolutional MLPs for Vision

Jiachen Li, Ali Hassani, Steven Walton, Humphrey Shi

Submitted to arXiv on: 9 September 2021

Abstract: MLP-based architectures, which consist of a sequence of consecutive multi-layer perceptron blocks, have recently been found to reach comparable results to convolutional and transformer-based methods. However, most adopt spatial MLPs which take fixed dimension inputs, therefore making it difficult to apply them to downstream tasks, such as object detection and semantic segmentation. Moreover, single-stage designs further limit performance in other computer vision tasks and fully connected layers bear heavy computation. To tackle these problems, we propose ConvMLP: a hierarchical Convolutional MLP for visual recognition, which is a light-weight, stage-wise, co-design of convolution layers, and MLPs. In particular, ConvMLP-S achieves 76.8% top-1 accuracy on ImageNet-1k with 9M parameters and 2.4G MACs (15% and 19% of MLP-Mixer-B/16, respectively). Experiments on object detection and semantic segmentation further show that visual representation learned by ConvMLP can be seamlessly transferred and achieve competitive results with fewer parameters. Our code and pre-trained models are publicly available at this https URL.

56 tweets

tags: arXiv