ΑΙhub.org

Decoding brain activity into speech

A recent paper in Nature reports on a new technology created by UC San Francisco neuroscientists that translates neural activity into speech. Although the technology was trialled on participants with intact speech, the hope is that it could be transformative in the future for people who are unable to communicate as a result of neurological impairments.

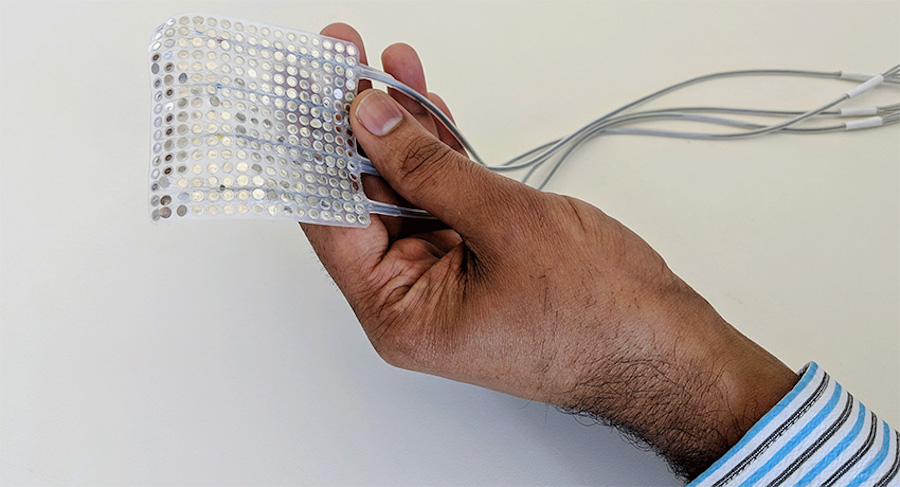

The researchers asked five volunteers being treated at the UCSF Epilepsy Center, with electrodes temporarily implanted in their brains, to read several hundred sentences aloud while their brain activity was recorded.

Based on the audio recordings of participants’ voices, the researchers used linguistic principles to reverse engineer the vocal tract movements needed to produce those sounds: pressing the lips together, tightening vocal cords, shifting the tip of the tongue to the roof of the mouth, then relaxing it, and so on.

This detailed mapping of sound to anatomy allowed the scientists to create a realistic virtual vocal tract for each participant that could be controlled by their brain activity. This included two neural networks: a decoder that transforms brain activity patterns produced during speech into movements of the virtual vocal tract, and a synthesizer that converts these vocal tract movements into a synthetic approximation of the participant’s voice.

A video of the resulting brain-to-speech synthesis can be found below.

You can read the UC San Francisco press release on which this news highlight is based here.