ΑΙhub.org

An AI computer lab using medical images from SCAPIS

By Karin Söderlund Leifler

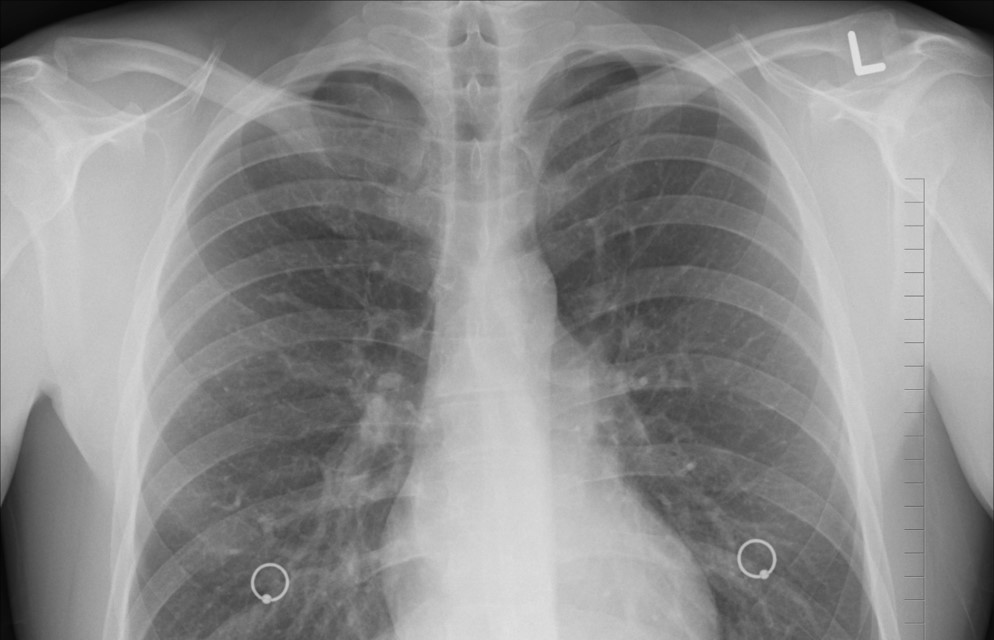

Scientists plan to use the same technology as that used to create deepfake videos to build AI-generated medical images. The synthesised images will present data from the SCAPIS study into heart and lung disease, and will be used for research into AI solutions in healthcare.

Presidents making statements they never actually made, and the faces of famous actors on the bodies of other people – these are two of many examples of deepfake videos. They are created using AI, which can also create voices and human faces that never actually existed, or even pieces of art that appear to have been painted by Picasso or Rembrandt. The technology behind the process is known as generative adversarial networks (GANs), and can also be used to benefit research.

“The method most often used for synthesis, GAN, is one of the hottest topics within AI research at the moment. The images that are created are realistic, which means that the method can also generate realistic medical images of things that have never existed”, says Claes Lundström, adjunct professor at Linköping University in the Department of Science and Technology (ITN), and working at the Center for Medical Image Science and Visualization (CMIV).

Claes Lundström is head of the Analytic Imaging Diagnostic Arena (AIDA), a national initiative hosted by CMIV. AIDA is one of the partners in a project to establish a SCAPIS-based computer lab. The project is being coordinated by the University of Gothenburg. SCAPIS is a huge Swedish study into heart and lung disease, during which 30,000 middle-aged people have been thoroughly investigated for the health of their heart, blood vessels and lungs. The scientists will use the computer lab to create synthesised images based on images from the SCAPIS investigations. It will not be possible to link these images to an individual, and they can be regarded as anonymous, while at the same time containing authentic medical data. It is intended that the synthesised images will be made available for more researchers, to be used in developing AI solutions.

“SCAPIS is an amazing project. Huge amounts of high-quality data have been collected and the team running SCAPIS is eager to ensure that the material collected can be used by researchers across a broad range of projects. Successful AI research requires large amounts of high-quality training data, and we are trying to lower the thresholds to make it easier to share material in an ethical and legal manner”, says Claes Lundström.

This is where AIDA comes into the picture. AIDA already contains a data hub at which researchers, caregivers and companies together collect large anonymous datasets that contain training data for AI in medical image diagnosis. Guidelines have been drawn up for how this type of data is to be handled, and AIDA will now contribute expertise in the design of the technical platform of the SCAPIS-based computer lab.

Competing AI networks

AIDA is also responsible for developing methods for the synthesis of anonymous data. Researchers in Jonas Unger’s group at ITN have long worked on the synthesis of data, such as traffic situations used in the AI training of autonomous vehicles. They will now get to grips with medical images from SCAPIS, with Gabriel Eilertsen taking the lead. The images may be from, for example, 3-dimensional computer tomography investigations in which the heart and blood vessels were imaged.

The synthesised images created by AI are formed in neural networks, which, in this case, are self-teaching systems that become better at solving a certain task by repeating it many times. The scientists allow two neural networks to compete against each other (this is the heart of the GAN method). The task of one of the networks is to create a synthesised image that appears as realistic as possible. The task of the other network, “the opponent”, is to determine whether the image generated is an authentic image or not. Both networks initially perform poorly. But the network that is generating images uses feedback about the images that defeat the other network to learn and become more successful. At the same time, the assessing network uses feedback about when it is successful in distinguishing between authentic and synthesised images. The two AI networks gradually become increasingly skilled.

“This method based on competition has proved to be extremely effective in training AI networks to solve specific tasks”, says Claes Lundström.

The project to establish a SCAPIS-based computer lab has been given funding by VINNOVA for a two year period. During this time, the scientists plan to conduct a couple of pilot projects in which the synthesised images are used for AI training. It may be, for example, a case of developing a model to predict how a certain disease will progress, or one to identify new groups of disease. The work will not only give answers to medical questions: it will also validate whether the computer lab functions as intended.

The reality of AI in healthcare

Few people can be unaware of the hype surrounding AI. Many claim that AI will revolutionise the healthcare system. At the same time, a survey carried out by the National Board of Health and Welfare in the autumn of 2019 showed that – while it is true that much AI research is being carried out in Sweden – few AI-based tools are currently in use in healthcare. The aim of AIDA is to bring the academic world, healthcare system and industry together to translate technological advances in AI into practical tools in medical imaging. Claes Lundström believes that the largest challenge with respect to AI in medicine is in creating AI solutions that are compatible with clinical workflows and that bring true improvement. Increasing numbers of AI networks are now available that are at least as good as an expert at a particular task. The problem is that they are extremely specific in what they can do.

“The idea is often to increase efficiency by using AI support in a diagnostic step, without the doctor needing to look at the images. But if the doctor must examine the images for other parts of the assessment, it’s difficult to save any time. In contrast, if the AI support can eliminate manual steps, even very specialised solutions can have a huge positive effect. We who are developing AI support for healthcare must know what the conditions are like in the clinic, and the ways in which we can help”, says Claes Lundström.

He states that there is often an unconscious assumption that AI support is a black box that works completely independently.

“If we instead take as a starting point that the healthcare personnel will work together with the AI systems, we must put some thought into designing the human-machine interaction. It is always a person who receives the conclusion that the AI system reaches”, says Claes Lundström.

It is important that the user interface can inform the user about how uncertain the conclusion is, and that people can pose critical questions that help them understand the result that the AI support has reached.

“It may be one fundamental aspect of medical image analysis to know which parts of the image the AI model has based its answers on. An example is to know why the AI system has concluded that a specimen is cancerous, and whether the human pathologist agrees with it.”

Claes Lundström is an Adjunct Professor in the Department of Science and Technology (ITN), Linköping University. His primary research focus is developing methods that enable new levels of accuracy and efficiency within medical imaging. His efforts are concentrated at the crossroads of machine learning, visualization and human-computer interaction in demanding clinical settings.

Note: this article was translated by George Farrants. It originally appeared on the Linköping University webpage.