ΑΙhub.org

AlphaZero learns to solve quantum problems

By Mogens Dalgaard, Felix Motzoi, and Jacob Sherson

Technologies based on quantum physics, such as the quantum computer, have the potential to revolutionize our society. However, realizing these technologies still requires huge improvements in our ability to manipulate and control the associated quantum systems. Artificial intelligence (AI) could help close this gap and launch a new era of quantum technology. With our latest work using Deepmind’s AlphaZero algorithm, we hope to have taken initial steps in this direction.

What are quantum systems and why should I care?

Quantum physics deals with the smallest building blocks of our universe. Since human beings cannot see individual atoms or subatomic particles, our intuition about the quantum world is rather limited. In fact, the quantum world is much more exotic than our regular world and the behavior of individual particles is remarkably different. The reason why we should care about quantum physics is that our ability to both understand and control nature powers the technological evolution. Take for instance electricity, which we have both learned to understand and to manipulate at our convenience. This has brought us an incredible amount of technologies ranging from the electric light bulb to the modern computer.

Since its emergence in the beginning of the previous century, quantum mechanics has become a well-established field of physics and the theoretical foundation quite extensive. In other words, physicists understand the laws of quantum physics, but when it comes to controlling the quantum world for concrete applications, there is still a lot of room for improvement. Over the last couple of decades, physicists and computer scientists have speculated if one could build entirely new technologies based on quantum physics. This has resulted in a wide range of proposals with the most notable being the quantum computer. A regular computer uses bits i.e. binary numbers that can be either 0 or 1. In contrast, a quantum computer uses quantum bits or qubits, which due to the very exotic nature of quantum physics can exist in a simultaneous combination of 0 and 1.

This allows for efficient parallelization, which gives a massive increase in speed relative to regular computers. Quantum computers could even solve certain problems which current technology cannot solve, even if you combined all existing computers. However, no one has so far been able to build a fully functional quantum computer. This requires, among other things, huge improvements in our ability to control these quantum systems. This is where AI could play a significant role in the coming years.

Why is AlphaZero different?

AlphaZero was originally made for playing the ancient Chinese game GO. Despite the relatively simple playing rules, GO was hard to master for playing software due to the extremely large amount of possible board positions, which is estimated to be ![]() . In comparison, Chess only has around

. In comparison, Chess only has around ![]() possible board positions and there are roughly

possible board positions and there are roughly ![]() atoms in the universe. Keeping track of that many possible board configurations is quite impossible. Therefore AlphaZero used a deep neural network which learned to evaluate how likely it were to win starting from a specific board configuration. In order to reach the “winning combinations” on the board they supplied AlphaZero with a Monte-Carlo tree search (MCTS), which is a systematic way of looking ahead in the game.

atoms in the universe. Keeping track of that many possible board configurations is quite impossible. Therefore AlphaZero used a deep neural network which learned to evaluate how likely it were to win starting from a specific board configuration. In order to reach the “winning combinations” on the board they supplied AlphaZero with a Monte-Carlo tree search (MCTS), which is a systematic way of looking ahead in the game.

Because the sampling is necessarily very sparse in the total space of possible strategies and the neural network is very approximate in its evaluation, especially during its training phase, the tree is able to drastically improve the accuracy of the game play as well as the learning rate.

This is similar to how professional Chess and Go players learn to look several steps ahead when playing. The results were quite staggering; AlphaZero quickly demolished expert playing software as well as professional human players. For instance after just four hours of playing against itself, AlphaZero beat the leading playing software Stockfish in Chess. This was starting from scratch i.e. AlphaZero did not even known the playing rules. AlphaZero was so impressive that Danish grandmaster Peter Heine Nielsen compared its play to a superior alien species, that had visited earth just beat us in Chess.

AlphaZero on quantum problems

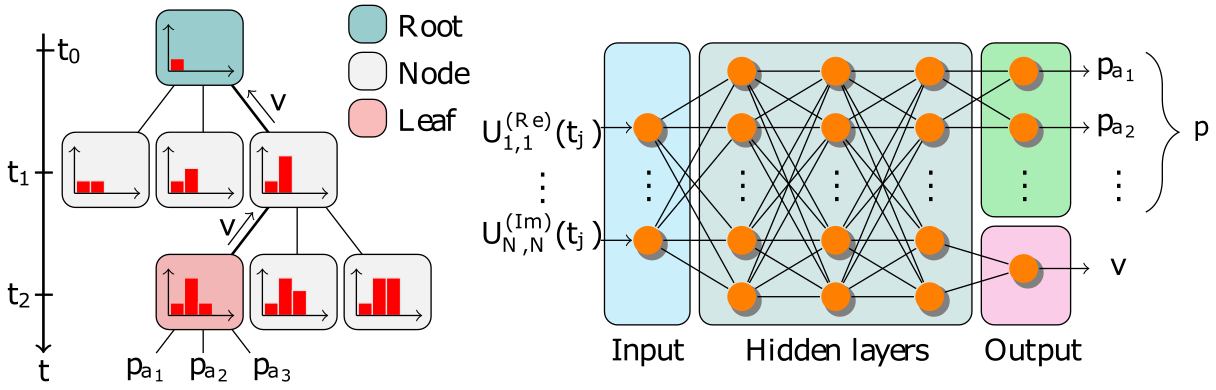

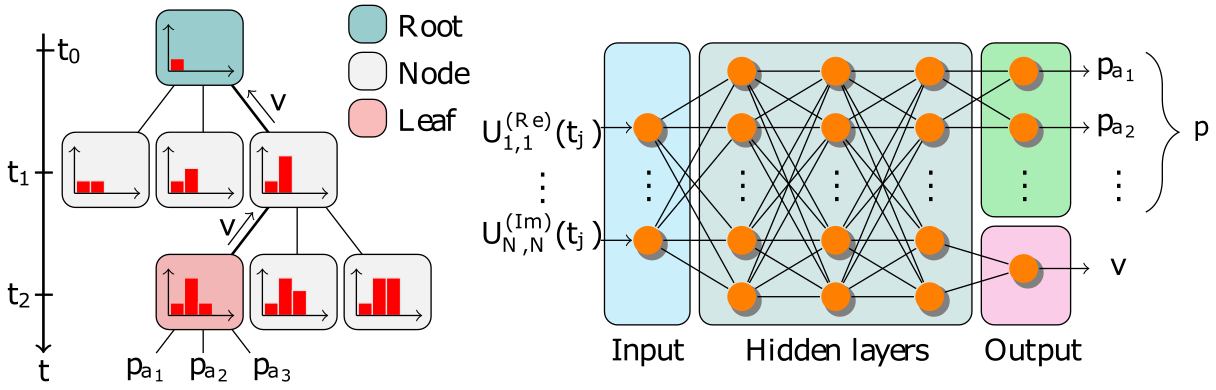

Similar to an ordinary computer, a quantum computer uses gate operations to manipulate its qubits. We sought to realize a specific gate operation by constructing a piecewise constant pulse sequence i.e. AlphaZero had to choose a pulse amplitude for each time-step. The physical system is at each time step ![]() mathematically described by a

mathematically described by a ![]() complex matrix

complex matrix ![]() , which we fold into a real 32-long vector. This is the input to the neural network as illustrated in Figure 1. Once a pulse sequence is completed, one can map the complex matrix

, which we fold into a real 32-long vector. This is the input to the neural network as illustrated in Figure 1. Once a pulse sequence is completed, one can map the complex matrix ![]() into a real number termed the fidelity

into a real number termed the fidelity ![]() , which has values between 0 and 1. Essentially the fidelity is a probability measure, with 1 denoting a 100% success.

, which has values between 0 and 1. Essentially the fidelity is a probability measure, with 1 denoting a 100% success.

The neural network outputs a value, which is an estimate of the final fidelity ![]() and some move probabilities

and some move probabilities ![]() . Both are used in the Monte-Carlo tree search. A tree consists of nodes (states) and edges (state-action pairs). The tree search starts with the root node and traverses down the tree by selecting actions at each step. Which action is selected is chosen by comparing properties intrinsic to each edge in a manner that balances exploration and exploitation. Once an edge is explored, its intrinsic properties are updated based on the results of the search. The forward search in the tree continues until it encounters a not-previously visited node, then the node is added to the tree and its edges initialized using

. Both are used in the Monte-Carlo tree search. A tree consists of nodes (states) and edges (state-action pairs). The tree search starts with the root node and traverses down the tree by selecting actions at each step. Which action is selected is chosen by comparing properties intrinsic to each edge in a manner that balances exploration and exploitation. Once an edge is explored, its intrinsic properties are updated based on the results of the search. The forward search in the tree continues until it encounters a not-previously visited node, then the node is added to the tree and its edges initialized using ![]() . All the visited edges in the search are updated in a back pass using

. All the visited edges in the search are updated in a back pass using ![]() . Once a number of such searches have been conducted, AlphaZero determines an action and updates the root node, while discarding the remainder of the tree. At the end, the neural network is updated based on data generated from the tree search such that v approaches the fidelity, and that the move probabilities increase the chance of selecting more promising actions. In short: the Monte-Carlo tree search allows AlphaZero to look several steps ahead, which allows for a more global search in the solution space. This gives AlphaZero an advantage over most other Reinforcement Learning methods for complicated tasks, where long-term strategies are crucial.

. Once a number of such searches have been conducted, AlphaZero determines an action and updates the root node, while discarding the remainder of the tree. At the end, the neural network is updated based on data generated from the tree search such that v approaches the fidelity, and that the move probabilities increase the chance of selecting more promising actions. In short: the Monte-Carlo tree search allows AlphaZero to look several steps ahead, which allows for a more global search in the solution space. This gives AlphaZero an advantage over most other Reinforcement Learning methods for complicated tasks, where long-term strategies are crucial.

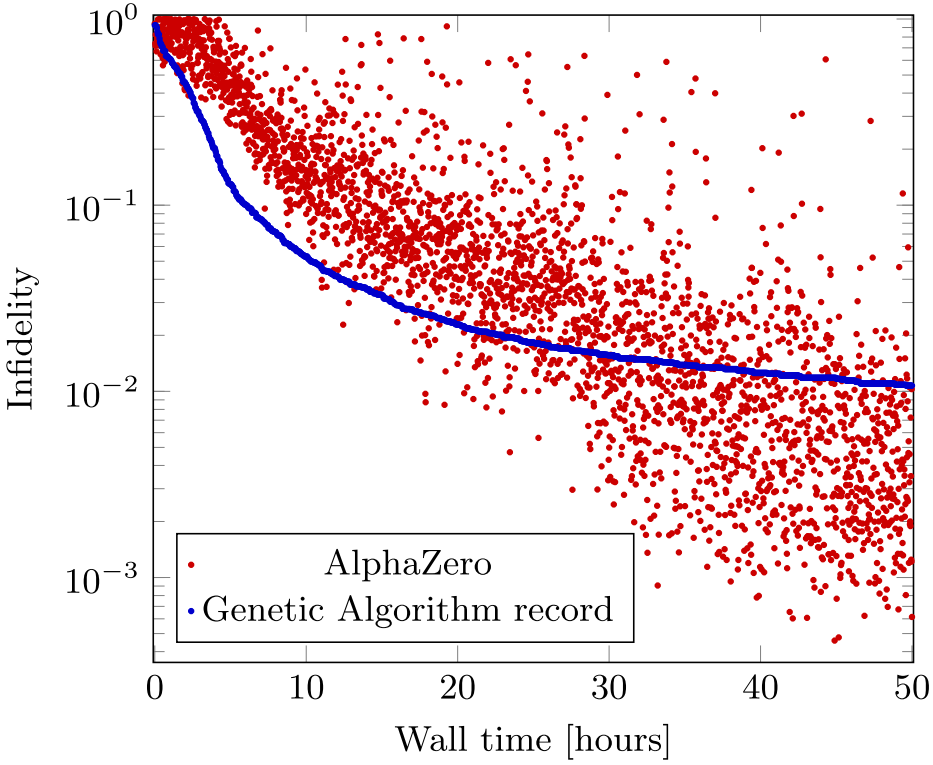

After we had successfully implemented AlphaZero, we utilized it on three different quantum control problems using the same algorithmic hyperparameters. For each problem, we compared AlphaZero to more-conventional algorithms. For instance in Figure 2 we compare AlphaZero with a genetic algorithm for the task of creating binary pulses during a 50 hour run. On the y-axis we plot the infidelity ![]() , which essentially is the error rate (i.e. lower is better). Initially, AlphaZero underperforms the genetic algorithm as it learns the quantum mechanical correlations, however this learning phase is quite short. Within 30 hours, we see that AlphaZero outperforms the genetic algorithm by over an order of magnitude improvement, with a large number of unique high-fidelity pulse sequences.

, which essentially is the error rate (i.e. lower is better). Initially, AlphaZero underperforms the genetic algorithm as it learns the quantum mechanical correlations, however this learning phase is quite short. Within 30 hours, we see that AlphaZero outperforms the genetic algorithm by over an order of magnitude improvement, with a large number of unique high-fidelity pulse sequences.

The AlphaZero Hybrid algorithm

Although AlphaZero worked particularly well on its own, it did meet its match on one particular task where gradient-based optimization methods are applicable and the gradients relatively cheap to calculate. Perhaps it is not surprising that AlphaZero would lose to a highly quantum-specialized optimization algorithm that physicist have spent the last 15 years on perfecting. However, we were sad if this was the hill that AlphaZero came to die on. In particular, because the gradient optimization algorithm in question had no learning incorporated into it. This implies that there is no gradual improvements in its performance and that all generated data is discarded instead of being used for subsequent learning. For this reason, we sought to make a Hybrid algorithm combining the best of both worlds: AlphaZero generated promising candidates by a wide exploration and these were subsequently efficiently optimized via gradient-based optimization. This led to huge improvement in both the quantity and quality of good solutions. In effect, AlphaZero and gradient optimization solve different problems: AlphaZero learns the underlying structure to the solution, gradient optimization optimizes in the local space around a seed solution. By only using gradient-optimization we may have two or three promising solutions after a 50-hour simulation. With our hybrid algorithm, we obtain a thousand.

In our opinion, this illustrates that an exciting path forward in the field of artificial intelligence is the combination of powerful, domain agnostic machine learning methods with both human expertise and domain specific brute calculation. First steps in this development have been seen for Chess, in which hybrid human-computer teams combining expert knowledge with the power of e.g. the Stockfish engine can efficiently outperform both humans and algorithms separately. It will be interesting to see how a team that also incorporates the AlphaZero zero-knowledge approach will fare in the coming years. While the vast majority of moves are best predicted by AlphaZero, nearing the end of episodes (i.e. the end of simulated games) becomes very calculational, and the specialized engine will gain an advantage.

The combination of domain specific and domain general methods with hierarchical, human-inspired, decision processes is one of the central elements of the vision for a future robust and powerful AI-approach that was recently presented by Gary Marcus and Ernest David in their book entitled Rebooting AI: Building Artificial Intelligence We Can Trust. This could mediate one of the most immediate drawbacks of the AlphaZero approach: its power lies in the fine-tuning of some tens of hyperparameters that, although powerful, will only be useful within a limited regime of validity. In our case, we did observe that the same set of hyperparameters worked well for three different quantum scenarios. If we had, however, changed the problem setting more dramatically, this would have broken down. Quantum computers utilize quantum parallelism to calculate multi-variate dynamics more efficiently. However, the same issue re-occurs as in the classical domain: the search space is exponential in the number of controls. Our AlphaZero implementation demonstrates that the approximate and imperfect solutions provided by neural networks can serve as a powerful seed generator for local, brute-force heuristics.

Future Work

One domain in which the machine learning approach is particularly challenged is in cases of incomplete or noisy information. This will also be the case for actual quantum experiments that are influenced by numerous subtle background environmental factors. It will thus be interesting to see whether quantum experiments could provide a fertile testing ground for AI algorithmic development and tests in the upcoming years.

Our work was published in the Nature Physics Journal, Quantum Information and is publicly available here.

Since its publication, we have been contacted by several research groups around the world including some larger tech companies also engaged with quantum computers. Therefore, we hope that our work will soon find its use in real experiments as well.