ΑΙhub.org

BADGR: the Berkeley autonomous driving ground robot

By Greg Kahn

Look at the images above. If I asked you to bring me a picnic blanket in the grassy field, would you be able to? Of course. If I asked you to bring over a cart full of food for a party, would you push the cart along the paved path or on the grass? Obviously the paved path.

Prior navigation approaches based purely on geometric reasoning incorrectly think that tall grass is an obstacle (above) and don’t understand the difference between a smooth paved path and bumpy grass (below).

While the answers to these questions may seem obvious, today’s mobile robots would likely fail at these tasks: they would think the tall grass is the same as a concrete wall, and wouldn’t know the difference between a smooth path and bumpy grass. This is because most mobile robots think purely in terms of geometry; they detect where obstacles are, and plan paths around these perceived obstacles in order to reach the goal. This purely geometric view of the world is insufficient for many navigation problems. Geometry is simply not enough.

Can we enable robots to reason about navigational affordances directly from images? We developed a robot that can autonomously learn about physical attributes of the environment through its own experiences in the real-world, without any simulation or human supervision. We call our robot learning system BADGR: the Berkeley Autonomous Driving Ground Robot.

BADGR works by:

- autonomously collecting data

- automatically labelling the data with self-supervision

- training an image-based neural network predictive model

- using the predictive model to plan into the future and execute actions that will lead the robot to accomplish the desired navigational task

(1) Data Collection

BADGR autonomously collecting data in off-road (left) and urban (right)

environments.

BADGR needs a large amount of diverse data in order to successfully learn how to navigate. The robot collects data using a simple time-correlated random walk controller. As the robot collects data, if it experiences a collision or gets stuck, the robot executes a simple reset controller and then continues collecting data.

(2) Self-Supervised Data Labelling

BADGR then goes through the data and calculates labels for specific navigational events, such as the robot’s position and if the robot collided or is driving over bumpy terrain, and adds these event labels back into the dataset. These events are labelled by having a person write a short snippet of

code that maps the raw sensor data to the corresponding label. As an example, the code snippet for determining if the robot is on bumpy terrain looks at the IMU sensor and labels the terrain as bumpy if the angular velocity magnitudes are large.

We describe this labelling mechanism as self-supervised because although a person has to manually write this code snippet, the code snippet can be used to label all existing and future data without any additional human effort.

(3) Neural Network Predictive Model

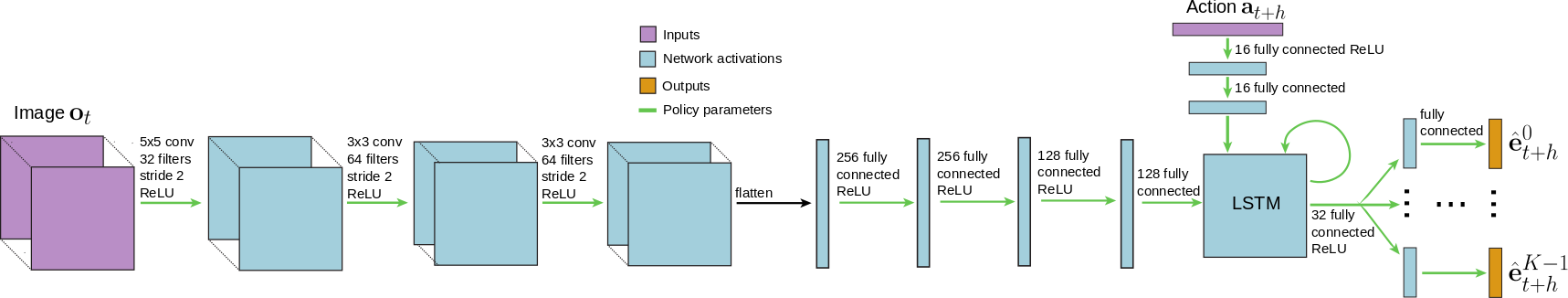

The neural network predictive model at the core of BADGR.

BADGR then uses the data to train a deep neural network predictive model. The neural network takes as input the current camera image and a future sequence of planned actions, and outputs predictions of the future relevant events (such as if the robot will collide or drive over bumpy terrain). The neural network predictive model is trained to predict these future events as accurately as possible.

(4) Planning and Navigating

BADGR predicting which actions lead to bumpy terrain (left) or collisions

(right).

When deploying BADGR, the user first defines a reward function that encodes the specific task they want the robot to accomplish. For example, the reward function could encourage driving towards a goal while discouraging collisions or driving over bumpy terrain. BADGR then uses the trained predictive model, current image observation, and reward function to plan a sequence of actions that maximize reward. The robot executes the first action in this plan, and BADGR continues to alternate between planning and executing until the task is complete.

In our experiments, we studied how BADGR can learn about physical attributes of the environment at a large off-site facility near UC Berkeley. We compared our approach to a geometry-based policy that uses LIDAR to plan collision-free paths. (But note that BADGR only uses the onboard camera.)

BADGR successfully reaches the goal while avoiding collisions and bumpy terrain, while the geometry-based policy is unable to avoid bumpy terrain.

We first considered the task of reaching a goal GPS location while avoiding collisions and bumpy terrain in an urban environment. Although the geometry-based policy always succeeded in reaching the goal, it failed to avoid the bumpy grass. BADGR also always succeeded in reaching the goal, and succeeded in avoiding bumpy terrain by driving on the paved paths. Note that we never told the robot to drive on paths; BADGR automatically learned from the onboard camera images that driving on concrete paths is smoother than driving on the grass.

BADGR successfully reaches the goal while avoiding collisions, while the geometry-based policy is unable to make progress because it falsely believes the grass are untraversable obstacles.

We also considered the task of reaching a goal GPS location while avoiding both collisions and getting stuck in an off-road environment. The geometry-based policy nearly never crashed or became stuck on grass, but sometimes refused to move because it was surrounded by grass which it incorrectly labelled as untraversable obstacles. BADGR almost always succeeded in reaching the goal by

avoiding collisions and getting stuck, while not falsely predicting that all grass was an obstacle. This is because BADGR learned from experience that most grass is in fact traversable.

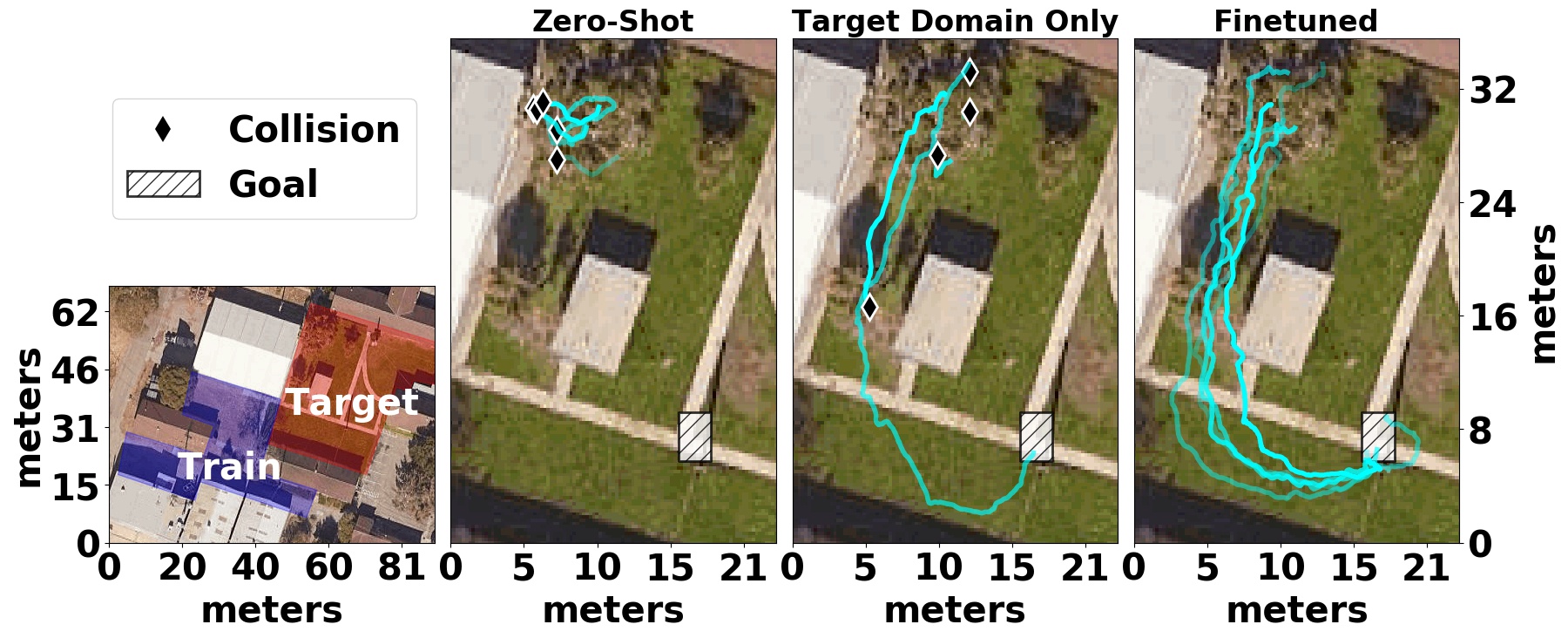

BADGR improving as it gathers more data.

In addition to being able to learn about physical attributes of the environment, a key aspect of BADGR is its ability to continually self-supervise and improve the model as it gathers more and more data. To demonstrate this capability, we ran a controlled study in which BADGR gathers and trains on data from one area, moves to a new target area, fails at navigating in this area, but then eventually succeeds in the target area after gathering and training on additional data from that area.

This experiment not only demonstrates that BADGR can improve as it gathers more data, but also that previously gathered experience can actually accelerate learning when BADGR encounters a new environment. And as BADGR autonomously gathers data in more and more environments, it should take less and less time to successfully learn to navigate in each new environment.

BADGR navigating in novel environments.

We also evaluated how well BADGR navigates in novel environments—ranging from a forest to urban buildings—not seen in the training data. This result demonstrates that BADGR can generalize to novel environments if it gathers and trains on a sufficiently large and diverse dataset.

The key insight behind BADGR is that by autonomously learning from experience directly in the real world, BADGR can learn about navigational affordances, improve as it gathers more data, and generalize to unseen environments. Although we believe BADGR is a promising step towards a fully automated, self-improving navigation system, there are a number of open problems which remain: how can the robot safely gather data in new environments? adapt online as new data streams in? cope with non-static environments, such as humans walking around? We believe that solving these and other challenges is crucial for enabling robot learning platforms to learn and act in the real world.

This post is based on the following paper:

- Gregory Kahn, Pieter Abbeel, Sergey Levine

BADGR: An Autonomous Self-Supervised Learning-Based Navigation System

Website

Video

Code

I would like to thank Sergey Levine for feedback while writing this blog post.

This article was initially published on the BAIR blog, and appears here with the authors’ permission.