ΑΙhub.org

The usefulness of useless AI

In every crisis since the fifties at least one article emerges to ask: where are the robots to save us? No robots are seen en masse collecting garbage, administering Covid-19 tests, or farming the fields. What we want is a warehouse full of useful tools and devices to pull out when the going gets tough. What we have are endless grant applications for studying theoretical situations and university halls filled with dithering professors. What do they even do, but teach the 2 hours per week? And yet, look closely and our world is not the world of ten years ago – aspects of automated cognition are all around us doing things we do not even realise are automated, adding gains we did not even think to ask for. The one consistent thing about computing sciences is that they do come to our rescue1 but almost never in the shape in which we envisioned it – consider Rick Deckard in Blade Runner going out to use a payphone.

If you have ever studied artificial intelligence you know that its development cannot be separated from computer science and from math. To the outsider, there are those things that are definitely AI: a humanoid robot maid, recognising something in an image, answering a voiced question, calculating predictions with a precise probability after sifting through a lot of documents (visually mind!), defeating people at a game of chess. If what you research cannot be directly connected to recognisable, and therefore useful AI, then why should taxpayer money be spent on it? When theory researchers are asked to explain why they are pursuing a particular problem, they tend to answer with “because it is interesting” or “we aimed for space exploration but we discovered Teflon and how cool is Teflon huh?” Such answers do not help justify the funding of theoretical research. The true justification sadly does not come in a convenient and simple if-then argument.

A lot of “useful” knowledge in AI, as in many other disciplines, is not reached by direct top-down problem solving. For a problem to be solved, we necessarily need to know what the problem is. We also need to know how things relate to each other to know what its solution should even look like. Say you want to build a house and you know somehow that the house has walls. So you need to build walls, but what is a wall? Well you think about it a little bit and realise that constructing the entire wall as units you bind together would be easier (which by the way also would imply that it is easier to build straight walls). Now you need to construct a brick. To invent a brick you need to know what materials are available but also how these materials behave in different weather conditions, can their properties be changed, how malleable they are, etc. If no materials we already have will do, we need to know how to make new materials. We need some curious person to have noticed that some mud is different than other mud and to have played with it observing how it can be stretched and moulded. Having thrown it into the fire, we would have needed them to smash it when it was cold, watch it in the rain, and eventually tell people all about this weird little thing. If there is no research already available on materials and their properties, there will be no bricks, no walls, and no houses. If you were to ask this mud-curious person why they studied clay they would likely say: because it was interesting.

One way to define artificial intelligence is as the discipline that is interested in finding out how to automate cognition. Cognition is a collection of individual tasks, making decisions, recognising a cat behind the chair, summing up numbers. Cognition is also a single process that supports agency by “acquiring knowledge and understanding through thought, experience, and the senses”2. Automation3 predates computation and artificial intelligence. Automation is the study of replacing human activities with technology, typically for the purpose of production. Some activities can be automated without requiring cognition by cleverly arranging the work environment and replacing a human limb movement with a robot limb movement.

Some of what computers do is a cognitive task, just not exactly computation. A computer, to agree with Richard Feynman4, is a misleading term because computers do not really do calculations, they actually manage data. To be even more precise, data management is executed by comparing two pieces of material and doing actions based on what that comparison and those before it showed. This is not much different than what that assembly line robotic arm does. The “big trick” in computer science is leveraging that “assembly line” level of power into doing actual computation, data organisation, image processing. The “big trick” in artificial intelligence is doing the same for cognitive tasks, one by one and all of them.

After you have experienced a house, you understand what it is, how it works and you can more or less be able to construct one5. Experiencing cognition does not enable us to understand it. Furthermore, understanding cognition does not guarantee us an ability to create a cognition-able entity. There is no reason to believe that we would be able to recreate even a single cognitive process fully by using anything else than the human body. We do understand how birds fly, but we use completely different properties of matter and energy to construct airplanes. In automation, accomplishing a task in an efficient way is the goal not the replication of how a human would do it.

The question then is: what set of actions are there out in the world that when performed in the right order give us “there is a cat in that photo”? We can ask this same question, to state the obvious, for every single individual cognitive task that we would like to automate. A person seeking to solve such a problem will need to rely on a lot of understanding of the basic material available, here abstract concepts.

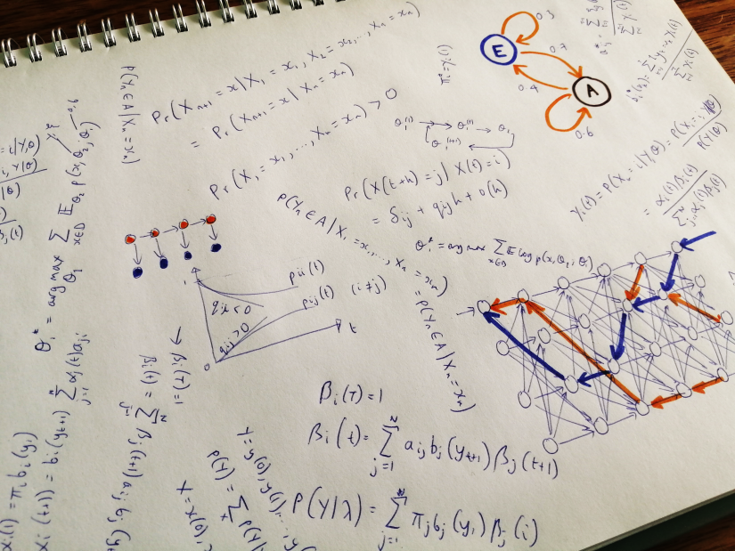

Computer science was developed on the back of mathematics. In the forties when the first computers were being built, there were centuries of research in mathematical logic, graph theory, set theory, linear algebra to help guide the process of building programming from comparing two pieces of material. Artificial intelligence needs that math and computer science but also more: psychology, social science, decision theory, law, philosophy. In AI we sometimes also need to extend these disciplines further by asking: what would happen to this theory or observation if the agent were not human?

The usefulness of useless knowledge is an article by Abraham Flexner published in Harpers in 19396 which recently got extended into a book of the same title by Robbert Dijkgraaf. This must-read short piece argues across time why there is only not-yet useful knowledge and why it is worth investing in it. This work is also useful to remind us of all the humanity altering solutions to problems we have built using existing understanding of peculiar little events in the world. The gap between a work of theory and its use in an application seems to rarely be less than twenty years.

Heinrich Hertz was a physicist of the late 19th century who first conclusively proved the existence of the electromagnetic waves predicted by James Clerk Maxwell’s equations of electromagnetism. He accomplished this by conducting numerous radio wave experiments. When asked about the applications of his discoveries, Hertz replied7: “Nothing, I guess.” We have no similar statement on record from Andrey Markov who worked in stochastic theory in the beginning of the 20th century. His discoveries fuel many practical achievements of AI such as machine translation8 and reinforcement learning, to name two. It is safe to say that usefulness for AI was not on Professor Markov’s mind when he developed his theories.

The history of AI is filled with examples of theoretical work fuelling applications that improve our lives. To outline all examples one has to go by individual sub-disciplines. This is a task we should perhaps do regularly since it is very easy to get caught up in the spectacle that is an AI player of games without asking what materials from the warehouse of theoretical knowledge did this master player use. There is also the much harder task of cataloguing what is in the warehouse but not yet used. Survivors bias weighs our judgement on what is useful by considering what was used. To justify new theoretical work based only on similarity to used theoretical work is to deprive ourselves of potentially critical future components of applications.

When we face a problem, we look for scientists and engineers to apply their skills and propose a solution. Scientists and engineers do different things. To use an old anecdote, an engineer presented with a problem asks “What kind of a solution do you want?” and a scientist asks “Does this problem have a solution?”. Engineers look for solutions, but for them to be able to do their job, we need a scientist to have identified and defined the problem. Problems are theoretical, until they are not. When the real need arises, it is nice to have a full warehouse of options collected by the proverbial mud-curious. Everything eventually gets used, there is no expiration date on knowledge.

[1] They seem to shyly appear already, see The five: robots helping to tackle coronavirus.

[2] https://www.lexico.com/definition/cognition

[3] I highly recommend the “Automation, friend or foe” by R.H. Macmillan published by Cambridge University Press in 1956 for a blast to the automation past.

[4] From a talk Richard Feynman gave in 1985 on computer heuristics. Can be watched here.

[5] Please do not though, unless you are a civil engineer.

[6] Access it here.

[7] Norton, Andrew (2000). Dynamic Fields and Waves. CRC Press. p. 83. ISBN 0750307196.

[8] See for example the Wikipedia page on Machine Translation.