ΑΙhub.org

Interview with Falaah Arif Khan – talking security, comics and demystifying the hype surrounding AI

Falaah Arif Khan is the creator of “Meet AI” – a scientific comic strip about the human-AI story. She currently works as a Research Engineer at Dell EMC, Bangalore, but will shortly will be heading to New York University’s Center for Data Science to pursue a Master’s in Data Science. We talked about some of the machine learning projects she’s worked on, her comic book creations, and the need for clear and accurate communication in the field of AI.

Could you tell us about the area you are currently working in?

I like to describe my research area as meta-security. When customers come to us it is to enhance the security of their product through access management, service authorization, session management and/or authentication. My role within the team is to use data-driven insights to build features that will bolster the security of our Identity and Access Management (IAM) product. I have been doing this for about two years, since graduating. As a domain I think of my work more in terms of human-computer interaction. I do a lot of behavioural profiling on log-in pages, trying to understand the best way to distinguish a genuine user from a malicious user.

We do a lot of user profile modelling. If you look at data, we get many labelled samples for genuine users but very few for malicious users. There is much work profiling the ‘good’ side. Then you can look at how far from this behaviour the incoming user is to identify potential attackers. I work a lot with auto associative models and it carries into human-computer interactions.

Do you have an example of an interesting project you’ve worked on?

One of the products I have worked on is behaviour-based authentication. So, a conventional authentication is based on a token or a username and password. But, if you lose those then any bad actor could access the system. The work we did was to use a behavioural log-in page: our system would study the user’s behaviour while typing their username and password and create a profile unique to that person. Currently, our work is profiling human behaviour versus bot behaviour and finding the distinction between them to make a classification. In the long-run you could imagine this becoming a password-less system, whereby the way in which you type your username can be used to authenticate you. So, this isn’t just human versus bot, it’s human versus human. So, I could have your credentials but if I don’t behave like you then I can’t hijack your account.

There are a lot of interesting insights that emerge from this work. One especially interesting paper published on this topic makes a brilliant observation that similar to the different blood types humans have, we also have different typing types.

Are there any other projects that you’ve found particularly interesting?

I work a lot with online learning and reinforcement learning algorithms because they tie into the idea that you can’t make a model and use it forever. Your model influences behaviour on your platform, which will in turn affect the data that your model was made from. I have been designing an adaptive CAPTCHA (completely automated public Turing test to tell computers and humans apart) mechanism. This enables you to learn user preferences from the way they interact with the CAPTCHA; for example, are they able to solve a certain challenge or not? Similarly, you can learn malicious user behaviour by looking at deep learning models that perform optical character recognition. This is a bit like inverse adversarial learning. What the industry is trying to do now is find samples that machine learning models are bad on; we use those samples (based on what humans are good at and what bots are bad at) to make an adaptive mechanism that will generate batches.

Out of interest, how long, roughly, do you think it takes for a typical CAPTCHA to be “cracked”?

That actually motivated a lot of the design of our solution. Historically, what we’ve seen is that a security expert would design a CAPTCHA scheme, for example, we started with character CAPTCHA. Then, there were a ton of papers about how to break that CAPTCHA and then everybody moved to image CAPTCHA. I would say it’s a matter of months, on average, before we need a new scheme. And that change required a manual redesign so the CA (completely automated) in CAPTCHA is not really accurate!

How our system overcomes this is, there is no manual redesign. It’s an adaptive mechanism – we don’t design the new CAPTCHA. The model looks at the parameters it was using to generate challenges the previous time and it optimises those parameters that bots have historically failed on. For example, in a character CAPTCHA the parameters could be characters, font size or font. Instead of the designer having to manually redesign the character set, I start with the largest set of parameters and put it out into a test environment, and I get feedback on which points/configurations are being broken easily. Then the model itself learns what the optimal weight for each parameter should be.

A lot of literature today is on how to break CAPTCHA. We tried to flip it around and actually try to strengthen the CAPTCHA. The idea was to take all the information out there about the best adversary to a CAPTCHA, build that adversary, and use feedback from that adversary to keep optimising the generation mechanism. So, to link back to your question, if you don’t do that then every two months, no matter how well we think we’ve adapted to the current landscape, we’d have to redesign anyway.

What is it you particularly like about working in the field of security?

Security is a very challenging, hard problem. I’m an extremely competitive person so I like working with domains that are fundamentally imbalanced. When you are designing security you need a fix for all the attacks you have seen (which may not even be all of the attacks out there). However, in order to break a system you only need to find one weakness. I like the fact that this problem is fundamentally asymmetric. It’s a huge challenge. The reason I feel that machine learning is the best fit for this domain is that you are actually out of your depth – it’s impossible for a human to look at the volume of data and figure out the 0.1 or 0.01% of bad actors. Using machine learning to help find patterns in this large volume of data is the perfect fit.

Because security is such a difficult domain we don’t expect to completely automate. When a model is doing pattern recognition and flags a potential bad actor, in the domain of security you won’t just rely on that – you will still go back to a domain expert. This is what a lot of other domains also need to do. In situations where the reason for a decision is transparent then you tend to trust the machine more. In some domains you can get away with blindly trusting a model, with your prediction driving a policy. However, in security you can’t afford to do that so you are forced to be more vigilant. You are forced to look at exactly what the model is doing, and what are the limits of what it’s telling you.

Moving on to talk about your comic, “Meet AI”, I’m interested in how you first got into producing comics and what was your inspiration?

I’m actually a multimedia artist, I do canvas, watercolours, sketches. It’s always been on my bucket list to make a comic book but I could never figure out what to make a comic book about. Until last year I never saw a long form scientific comic – they were all about superheroes. However, when I was applying to grad school, going through a ton of professors research pages I found really interesting people who inspired me to do this kind of work.

The reason I thought of doing the comic was due to Riccardo Manzotti, a researcher in Milan; he’s now a philosopher but was previously a psychologist and an AI scholar. He translates his philosophy, theories and research in the form of comics . His comic was the last thing in a newsletter I was reading and when I saw it, it made me realise that you can make art that is technical. I reached out to him and we chatted about how sometimes it’s easier to show ideas than to write about them.

I’m also inspired from my own personal experiences and from the work of professors that I’ve been inspired by. One of them is Professor Zack Lipton at Carnegie Mellon University. I read a blog post of his comparing Death Note and the Deep Learning Landscape. This was not anything I’d seen a researcher write about before. His paper on Troubling trends in ML Scholarship also inspired me to take the discussion around AI Hype one step further, beyond academic circles, in a way that would be accessible to the general public. I also really like Lex Fridman’s podcast on artificial intelligence; he goes beyond technical details and explores philosophic questions. So, the creative analogies for the comic came from them.

The last source of inspiration came from frustrations I was seeing in my own life. For example, when I had to go and pitch a product to management, their answer to everything was “OK, use deep-learning”. But that’s not how these models work. A lot of it also came from conversations I had with people who weren’t technical experts but who had bought into the hype. I can’t blame them – if the loudest voices in the industry are hyping up their work then that’s what the general public is going to hear.

To be honest the reaction to the comic has been beyond anything I could have expected. I made it thinking if this was me two years ago, if I found this, it would blow my mind. At some level, I was making it for therapeutic purposes, but there have been a lot of people who’ve related to it across the spectrum of expertise – from students up to professors. A lot of the researchers and professors that inspired me have provided positive feedback.

You also do shorter comic strips on a more regular basis, is that correct?

Yes, I’m actually working on a bunch of follow-ups, including a couple of collaborations with professors! I won’t give anything away, but make sure to follow on social media for information on the next big project.

When I realised this wasn’t just a one-off comic, I wanted to produce more long-form volumes. However, these take time to produce, especially with me starting grad school soon. So, I thought I’d have a weekly strip that would keep people engaged. Twitter is the best fodder for these kinds of things! It’s kind of spoilt twitter for me now, as everything I read translates to a comic strip in my head.

How long does it take to put together your comic strips?

The weekly strips take a couple of days. Creatively it comes in spurts for me, so when I get ideas I make a few then release them weekly. Then there are weeks where I don’t want to make any.

The long-form comics take months. The upcoming volume is taking a lot of time because I realise the script needs to be really tight.

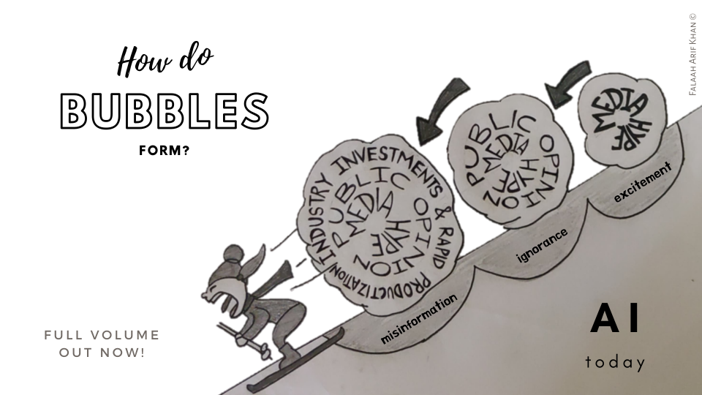

I understand that one of your goals is to demystify the hype surrounding AI. I wondered if you had any thoughts on how we could all act to help reduce the hype?

At least for me personally, I have been mailing the comic to every early-career researcher I know because a year ago I bought into the hype. I felt like I had to put the Amazon story in the comic was because I remember reading it and thinking “oh my God, what has happened!”. Now that I actually follow and understand the landscape I realise that there are a lot of people talking about trying to counter the hype, and looking at accountability, transparency and fairness. The problem is that many of the people with the loudest voices aren’t even within the circle of academia and they aren’t experts or even concerned about these issues.

Part of the issue is press releases that are used to share research and how they go from scientific writing to science fiction. There needs to be a standard that we all follow. That aspect is a lot more manageable from within the community. I think in a lot of research labs there is a culture of self-correction which is good.

It is important that respected researchers with large followings speak out. When a mis-informed tweet goes out it’s important that these respected researchers immediately speak up about the fact that it’s not representative of our landscape. This could be a real help for early career researchers, as there’s no way they will have the required depth of knowledge to understand everyone’s research and they may just follow the loudest voices. So, it’s important for our loudest voices to be in-line with the rest of the community.

It would also be good if news articles cited the part of the research paper that they were talking about. Even sharing a link to the original paper might not be enough – a lot of non-technical people aren’t going to go and read the research paper. At least citing the relevant part of the paper might help to reduce the hype. Maybe researchers could band together and set out standards for reporting?

Do you have any ideas for the area of research you’d like to pursue during or following your master’s course?

I am interested in fairness, accountability and transparency (FAccT) in ML and AI. These should be built into algorithms and systems as standard.

Linking back to the comic, one of things I speak to people about when I give them the comic is: we can’t afford to think of social sciences and engineering as separate disciplines. You need to consider the social impact of your system when you are designing it. The reason I’d like to move into FAccT in ML is because it’s a fledgling field and it’s basically developing as a separate discipline. However, I think that every algorithm needs to be tested against these metrics of fairness etc. I feel that if I can get in while the field is still developing, we can hopefully make it something that everybody has to be part of and it’s not just one set of researchers who are doing this.

I’m also very interested in reinforcement learning and online learning. I feel that a big problem to solve in the field is domain shift and generalisation across distributions. I think that online learning does a much better job there as it’s able to adapt. Of course there will be problems with specificity to the training environment and not being able to generalise across environments but I feel that RL and online learning, especially for security applications, is the area I’d like to go into in more depth.

About Falaah Arif Khan

Falaah Arif Khan is a Research Engineer at Dell EMC, Bangalore where she designs data-driven models for Identity and Access Management. She has worked on the detection of malicious behavior in login activity and on the formulation of suitable responses to malicious requests, in the form of adaptive CAPTCHAs, Multi Factor Authentication mechanisms and the strategic placement of Honeypots. Falaah’s research interests span Human-computer interaction, robust Machine learning, FAccT ML and the social impact of AI (what is also called “AI Anthropology.”) At Dell, Falaah holds the title of “youngest patent holder” and has been awarded the coveted “Game Changer Award” for outstanding innovation in the security space. Falaah has a Bachelor’s in Electronics and Communication Engineering, with a minor in Mathematics from Shiv Nadar University, India and will be heading to New York University’s Center for Data Science in Fall 2020 to pursue a Masters in Data Science.