ΑΙhub.org

Neural network method for enhancing electron microscope images

By Vandana Suresh

Since the early 1930s, electron microscopy has provided unprecedented access to the world of the extraordinarily small, revealing intricate details that are otherwise impossible to discern with conventional light microscopy. But to achieve high resolution over a large sample area, the energy of the electron beams needs to be cranked up, which is costly and detrimental to the sample under observation.

Texas A&M University researchers may have found a new method to improve the quality of low-resolution electron micrographs without compromising the integrity of samples. By training deep neural networks on pairs of images from the same sample but at different physical resolutions, they have found that details in lower-resolution images can be enhanced further.

“Normally, a high-energy electron beam is passed through the sample at locations where greater image resolution is desired. But with our image processing techniques, we can super-resolve an entire image by using just a few smaller-sized, high-resolution images,” said Yu Ding, Professor in the Department of Industrial and Systems Engineering. “This method is less destructive since most parts of the specimen sample needn’t be scanned with high-energy electron beams.”

The researchers published their image processing technique in Institute of Electric and Electronics Engineers’ Transactions on Image Processing in June.

Unlike in light microscopy where photons are used to illuminate an object, in electron microscopy, a beam of electrons is utilized. The energy of the electron beams plays a crucial role in determining the resolution of images. That is, the higher the energy electrons, the better the resolution. However, the risk of damaging the specimen also increases, similar to how ultraviolet rays, which are the more energetic relatives of visible light, can damage sensitive materials like the skin.

“There’s always that dilemma for scientists,” said Ding. “To maintain the specimen’s integrity, high-energy electron beams are used sparingly. But if one does not use energetic beams, high-resolution or the ability to see at finer scales becomes limited.”

But there are ways to get high resolution or super resolution using low-resolution images. One method involves using multiple low-resolution images of essentially the same region. Another method learns common patterns between small image patches and uses unrelated high-resolution images to enhance existing low-resolution images.

These methods almost exclusively use natural light images instead of electron micrographs. Hence, they run into problems for super-resolving electron micrographs since the underlying physics for light and electron microscopy is different, Ding explained.

The researchers turned to pairs of low- and high-resolution electron microscopic images for a given sample. Although these types of pairs are not very common in public image databases, they are relatively common in materials science research and medical imaging.

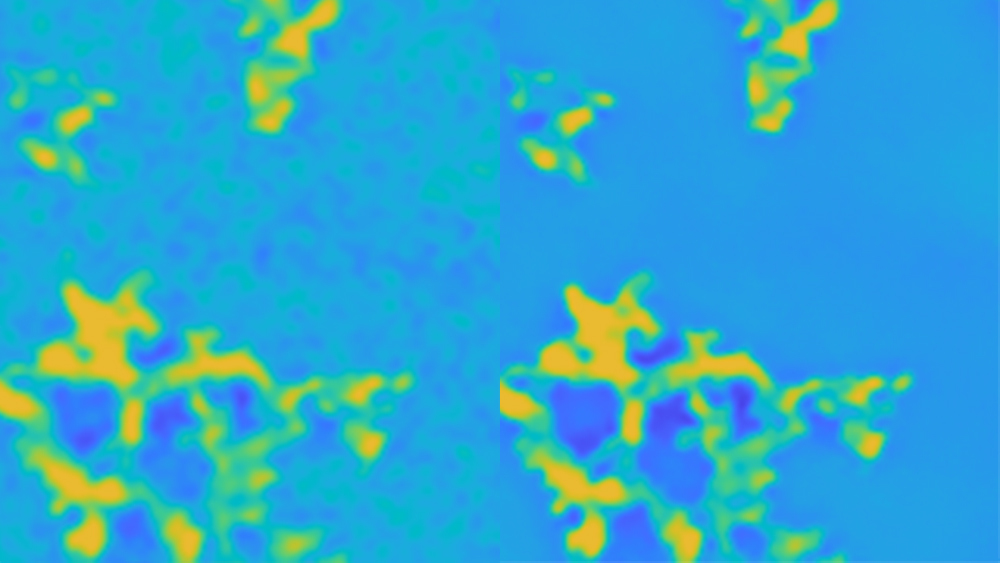

For their experiments, Ding and his team first took a low-resolution image of a specimen and then subjected roughly 25% of the area under observation to high-energy electron beams to get a high-resolution image. The researchers noted that the information in the high-resolution and low-resolution image pair are very tightly correlated. They said that this property can be leveraged even though the available dataset might be small.

For their analyses, Ding and his team used 22 pairs of images of materials infused with nanoparticles. They then divided the high-resolution image and its equivalent area in the low-resolution image into three by three subimages. Next, each subimage pair was used to train deep neural networks. Post-training, their algorithm could recognise image features, such as edges.

When they tested the trained deep neural network on a new location on the low-resolution image for which there was no high-resolution counterpart, they found that their algorithm could enhance features that were hard to discern by up to 50%.

Although their image processing technique shows a lot of promise, Ding noted that it still requires a lot of computational power. In the near future, his team will be directing their efforts in developing algorithms that are much faster and can be supported by lesser computing hardware.

“Our paired image processing technique reveals details in low-resolution images that were not discernable before,” said Ding. “We are all familiar with the magic wand feature on our smartphones. It makes the image clearer. What we aim to do in the long run is to provide the research community a similar convenient tool for enhancing electron micrographs.”

Other contributors to this research include Dr Yanjun Qian from Virginia Commonwealth University, Jiaxi Xu from the industrial and systems engineering department at Texas A&M and Dr Lawrence Drummy from the Air Force Research Laboratory.

This research was funded by the Air Force Office of Scientific Research Dynamic Data and Information Processing program (formerly known as the Dynamic Data Driven Applications System program) grants and the Texas A&M X-Grant program.

Read the arXiv version of the article here.