ΑΙhub.org

Adversarial generation of extreme samples

Modelling extreme events in order to evaluate and mitigate their risk is a fundamental goal in many areas, including extreme weather events, financial crashes, and unexpectedly high demand for online services. In order to mitigate such risk it is vital to be able to generate a wide range of extreme, and realistic, scenarios. Researchers from the National University of Singapore and IIT Bombay have developed an approach to do just that.

In work recently posted on arXiv Siddharth Bhatia, Arjit Jain, and Bryan Hooi, note that in many applications, stress-testing is an important tool. This typically involves testing a system on a wide range of extreme but realistic scenarios to check that the system can cope in such situations.

Recently, Generative Adversarial Networks (GANs) and their variants have received tremendous interest, due to their ability to generate highly realistic samples. However, existing GAN-based methods tend to generate typical samples, i.e. samples that are similar to those drawn from the bulk of the distribution. In this work, the authors seek to design deep learning-based models that can generate samples that are realistic and extreme. Their aim is to devise a method that would allow domain experts to generate extreme samples for their desired application.

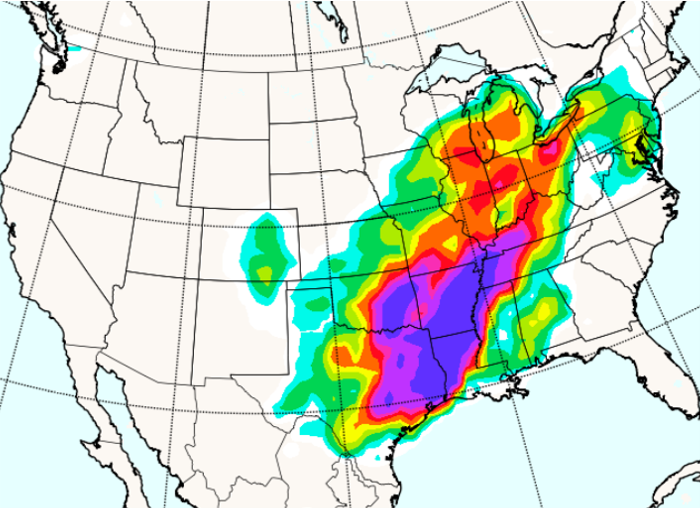

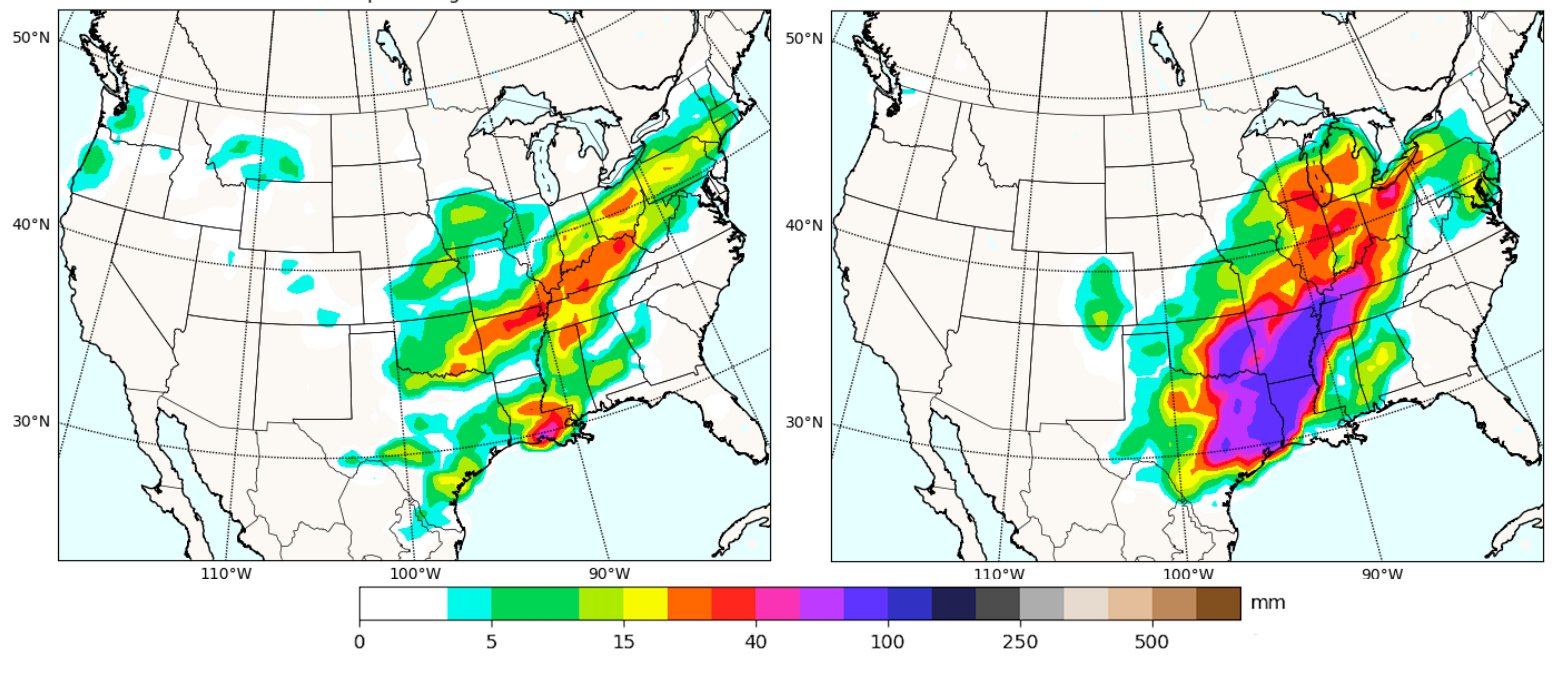

Bhatia, Jain and Hooi express the problem more formally as follows: “Given a data distribution and a criterion to measure extremeness of any sample in this data, can we generate a diverse set of realistic samples with any given extremeness probability?” An example is shown in the figure below. Here, they are interested in flood resilience, so choose to measure extremeness based on total rainfall. Generating extreme samples would mean generating rainfall scenarios with spatially realistic patterns that resemble rainfall patterns in actual floods (right side of Figure 1) which could be used for testing the resilience of a city’s flood planning infrastructure.

To model extremeness the authors draw on Extreme Value Theory (EVT), a probabilistic framework designed for modelling the extreme tails of distributions. However, there are two additional aspects to this problem which make it challenging. The first issue is the lack of training examples: in a moderately sized dataset, the rarity of “extreme” samples means that it is typically infeasible to train a generative model only on these extreme samples. The second issue is that we need to generate extreme samples at any given, user-specified extremeness probability.

The authors’ new approach, which they have called ExGAN, relies on two key ideas. Firstly, to mitigate the lack of training data in the extreme tails of the data distribution, they use a novel distribution shifting approach, which gradually shifts the data distribution in the direction of increasing extremeness. This allows them to fit a GAN in a robust and stable manner, while fitting the tail of the distribution, rather than its bulk. Secondly, to generate data at any given extremeness probability, they use EVT-based conditional generation, training a conditional GAN, conditioned on the extremeness statistic. This is combined with EVT analysis, along with keeping track of the amount of distribution shifting performed, to generate new samples at the given extremeness probability.

To verify their approach, the authors provide a thorough analysis using US precipitation data from 2020. They show that they are able to generate realistic and extreme rainfall patterns. Another important aspect of the work is that the authors are able to generate extreme samples in constant time, as opposed to the exponential time taken by the baseline.

About the authors

Siddharth Bhatia is currently working towards a PhD in Computer Science at National University of Singapore (NUS). His research is supported by the Presidents Graduate Fellowship and he was recognized as a Young Researcher by the ACM Heidelberg Laureate Forum. He received bachelors and masters degrees from BITS Pilani. His research interests include streaming anomaly detection and scalable machine learning. For more information, please visit his webpage

Siddharth Bhatia is currently working towards a PhD in Computer Science at National University of Singapore (NUS). His research is supported by the Presidents Graduate Fellowship and he was recognized as a Young Researcher by the ACM Heidelberg Laureate Forum. He received bachelors and masters degrees from BITS Pilani. His research interests include streaming anomaly detection and scalable machine learning. For more information, please visit his webpage

Arjit Jain is a senior undergraduate student at Indian Institute of Technology Bombay majoring in Computer Science and Engineering. His research interests are broadly in the area of Computer Vision, and Generative Models.

Arjit Jain is a senior undergraduate student at Indian Institute of Technology Bombay majoring in Computer Science and Engineering. His research interests are broadly in the area of Computer Vision, and Generative Models.

Bryan Hooi is an Assistant Professor in the School of Computing and the Institute of Data Science at National University of Singapore. He received his PhD in Machine Learning from Carnegie Mellon University in 2019. His research interests include scalable machine learning and graph mining. For more information, please visit his webpage.

Bryan Hooi is an Assistant Professor in the School of Computing and the Institute of Data Science at National University of Singapore. He received his PhD in Machine Learning from Carnegie Mellon University in 2019. His research interests include scalable machine learning and graph mining. For more information, please visit his webpage.

Read the research in full

ExGAN: Adversarial Generation of Extreme Samples, Siddharth Bhatia, Arjit Jain, Bryan Hooi (2020)

The authors have made their code and datasets publicly available here.