ΑΙhub.org

New sparse RNN architecture applied to autonomous vehicle control

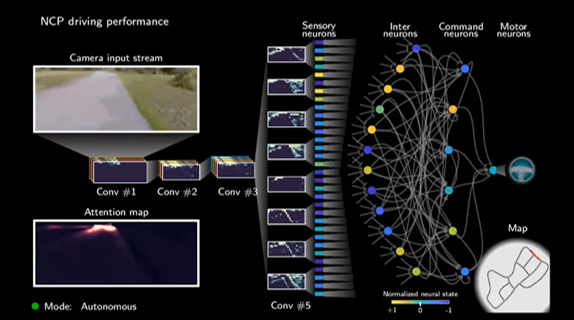

Researchers from TU Wien, IST Austria and MIT have developed a recurrent neural network (RNN) method for application to specific tasks within an autonomous vehicle control system. What is interesting about this architecture is that it uses just a small number of neurons. This smaller scale allows for a greater level of generalization and interpretability compared with systems containing orders of magnitude more neurons.

The researchers found that a single algorithm with 19 control neurons, connecting 32 encapsulated input features to outputs by 253 synapses, learnt to map high-dimensional inputs into steering commands. This was achieved by use of a liquid time-constant RNN, a concept that they introduced in 2018. Liquid time-constant (LTC) RNNs are a subclass of continuous-time RNNs, with a varying neuronal time-constant.

“The processing of the signals within the individual cells follows different mathematical principles than previous deep learning models,” noted Ramin Hasani (TU Wien and MIT CSAIL). “Also, our networks are highly sparse – this means that not every cell is connected to every other cell. This also makes the network simpler.”

“Today, deep learning models with many millions of parameters are often used for learning complex tasks such as autonomous driving,” said Mathias Lechner (IST Austria). “However, our new approach enables us to reduce the size of the networks by two orders of magnitude. Our systems only use 75,000 trainable parameters.”

You can watch the algorithm in action in this short video put together by the team:

The system works as follows: firstly, the camera input is processed by a convolutional neural network (CNN). This network decides which parts of the camera image are interesting and important, and then passes signals to the crucial part of the network – the RNN-based “control system” (as described above) that then steers the vehicle.

Both parts of the system can be trained simultaneously. The training was carried out by feeding many hours of traffic videos into the network, together with information on how to steer the car in a given situation. Through this training, the system learnt the appropriate steering reaction depending on a particular situation.

“Our model allows us to investigate what the network focuses its attention on while driving. Our networks focus on very specific parts of the camera picture: the curbside and the horizon. This behaviour is highly desirable, and it is unique among artificial intelligence systems,” said Ramin Hasani. “Moreover, we saw that the role of every single cell at any driving decision can be identified. We can understand the function of individual cells and their behaviour. Achieving this degree of interpretability is impossible for larger deep learning models.”

Find out more

The published article:

Neural circuit policies enabling auditable autonomy, Mathias Lechner, Ramin Hasani, Alexander Amini, Thomas A. Henzinger, Daniela Rus & Radu Grosu.

Google colab showing how to stack NCPs with other layers

arXiv article introducing the notion of liquid time-constant RNNs:

Liquid time-constant recurrent neural networks as universal approximators, Ramin M. Hasani, Mathias Lechner, Alexander Amini, Daniela Rus, and Radu Grosu.