ΑΙhub.org

RoboCup Humanoid League: Interview with Maike Paetzel-Prüsmann

As part of RoboCup 2021, events in the Humanoid League will be taking place virtually from 24-27 June. In the Humanoid League, autonomous robots with a human-like body plan and human-like senses play soccer against each other.

We spoke to Maike Paetzel-Prüsmann, who serves on the executive and organising committees, about the league, how the competition usually works in the physical environment, and the changes they’ve made to the event so that it can be held virtually.

Could you tell us a bit about the Humanoid League?

In the Humanoid League there are very specific rules regarding what the robots need to look like. To make them as human-like as possible, there are a lot of constraints around how they look and how they can sense their environment. They have roughly human-like body proportions, they need to walk on two legs, they are only allowed to use human-like sensors. For example, they are only permitted to use up to two cameras to sense their environment. They can’t use LIDARs, laser scanners or other sensors like that.

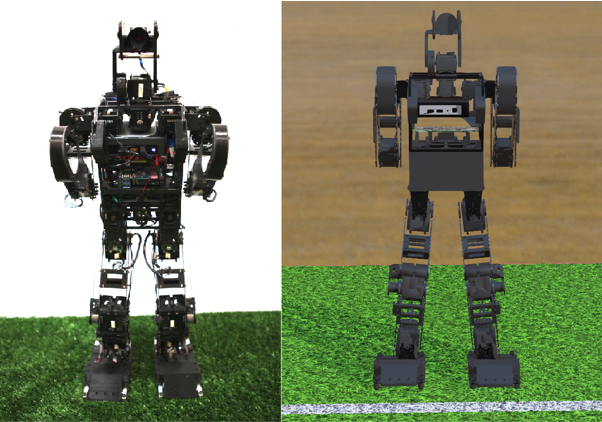

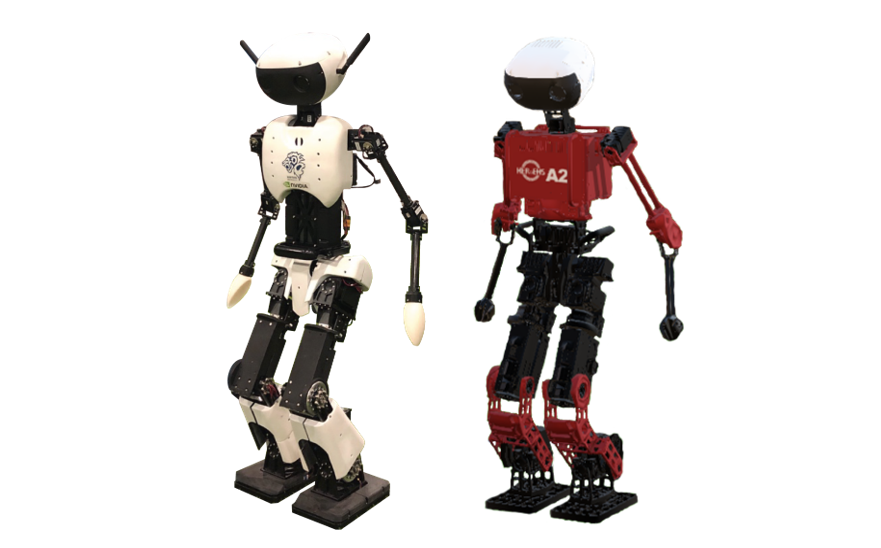

We are a hardware league, which means that teams actually develop the robots themselves. Almost all of them have completely custom-built robots. Some of them start from a commercial robot platform and custom build things around it, but I would say that all the teams in our league actually do some hardware things.

The hardware aspect is the main part of the league, and that makes it particularly challenging to go virtual. For the physical competitions, usually, the teams arrive with their robots and they still have a substantial amount of work to do on them, for example, they have to calibrate them to work in the environment where the competition is held, to deal with the specific light conditions, the specific artificial turf, the ball. If they’ve arrived by plane they also need to reassemble the robots, which typically have to be disassembled for the flight. All of this is a huge part of a normal RoboCup experience.

When the competition was held physically in 2019, how many teams took part?

In 2019 we had three sub-leagues for different size classes. The smallest are the kid size robots, and in that league we had 16 teams. The next class is teen size, which are medium size robots, where we had eight teams. For the adult size we had five teams.

Adult size robots are the ones that are actually as tall as we are. In this class they only play two against two on the field (compared to four against four for the kid size). As these robots are still fairly unstable there is always a person standing behind the robot to catch it in case it falls. At this stage, the robots would not survive a fall.

So, in total we have around 25-30 teams, with some teams competing in multiple size classes.

In 2020, actually before Covid, we decided that we would remove the teen size as a league. We had very few teams that only competed in this size class. Most of them also competed in either the kid size or adult size leagues too. So, now we will only have the two size classes: kids and adults.

How long do the matches last?

For the normal round-robin matches there are two halves of 10 minutes each, with a five-minute half-time break. For the knock-out stages we can also add two extra halves of extended time (five minutes each) and penalty shootouts. So, the round-robin matches last around 25 minutes in total, and the knock-out matches can last up to 45 minutes in total.

I’m interested in the positioning of the cameras. You said that teams can have up to two cameras. Do they have to put them where a person’s eyes would be or can they do in other places?

They have to be in the head, and we have strict requirements on the field of view to ensure that it is human-like. So, you couldn’t put one at the front and one at the back, or one on top of the other. There is a maximum field of view they can have in both dimensions.

There are actually very few teams that use two cameras and dual vision – most use just one camera and look around more. What we saw with the few teams that tried to use them is that the problem is they need to be calibrated very well to each other. When the robot falls, things can shift a little, and just a few millimetres can really mess up the calculations. So what they ended up doing was just disregarding the second camera and basically only playing with one.

Is there quite a difference between the teams in terms of the hardware, software and methodologies that they use?

Yes, I would say there is. The robots look very different. Some of the hardware approaches are very different, especially in the adult size where there are a lot of difficulties involved in meeting the constraints, such as having such a heavy robot and being able to move it, and stability. We have seen some quite different, and some very novel, approaches used to try and solve these issues.

In kid size we see a little bit less variety, but there are still some things that vary quite a bit between teams. For example, how many motors they use, how they stabilize the walking, how they use the arms to stabilize the walking.

Some things stay almost constant – for example, most teams try to max out the foot size, because that is something that obviously gives stability. When we moved to artificial grass, where it’s particularly difficult to stabilize, a lot of teams ended up using cleats under the feet. It was introduced by one or two teams initially, then a lot of teams saw that it gave an advantage, so they also converged to using this.

When did you get involved with the league?

Personally, I got involved in 2010 when I was still a bachelors student. My team in Hamburg was in the Standard Platform League (SPL). Part of the team, and the main research group that was involved with us moved, so we were left without a full team. We decided to switch leagues and we did this as a team of only students. Our first world championship was in 2012 in Mexico.

You must have seen a lot of changes in terms of the progression of the league.

Definitely. When I started you still had six poles around the field that had different colour codes to make it easier to localise on the field, there were yellow and blue goals so you knew which side you were playing, and you had a bright orange ball. There was also just a normal green carpet. Since then we’ve seen a lot of changes, with artificial grass, no localisation poles, the same coloured (white) goals, and a black and white ball. There are a lot of things that look similar and are difficult to resolve. We saw a lot of own goals in the first year that this was introduced.

We had a lot of problems resolving the symmetry in the first year. In one match, our robot was actually going towards the wrong goal. It then had some kind of software failure right in front of the goal. When our software stack crashes the robot just sits down and doesn’t do anything. It was 10cm from our own goal with the ball and just sat down – that was a lucky coincidence!

So, what happens in a game when the software crashes?

There is something called “incapable robot”, so if your robot doesn’t get up for 20 seconds, or has another failure that prevents it from playing, then the referee asks you to remove it from the field. It gets penalised and has to restart from the sideline.

I imagine it’s quite different moving to a virtual environment. How is this year’s competition going to work?

That was a huge challenge that we faced when we found out there was going to be a virtual competition. We already have two simulation leagues in RoboCup and we didn’t want to replace those, necessarily. In the SPL, where they all play with the same robot, they can have teams in their own lab playing with two different software versions. This is something that doesn’t work for us. Also, a lot of the teams are currently not allowed into their labs, so they can’t work on their robots.

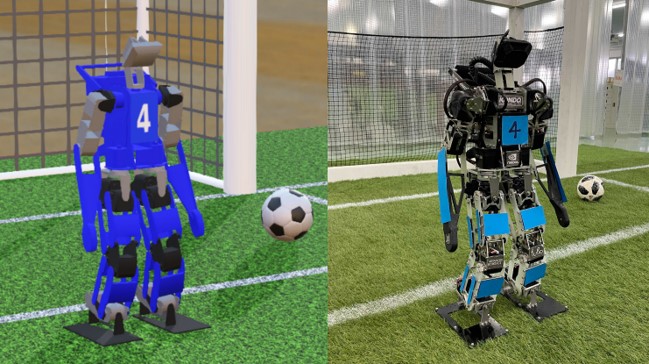

We knew it wasn’t going to be possible to go for anything that involves hardware, so the only option was simulation. We wanted to keep the aspect of having the different robots and having the opportunities and challenges that are introduced by having those different types of robots. We wanted a 3d simulation with different robots and a greater possibility to transfer from simulation to reality. We believe that our league would benefit if we had this simulation system. We want to push the simulation-to-real transfer further.

We got in touch with two companies that produce simulators. One of them is Cyberbotics, who make the Webot simulator, and this is the one we are using. They have supplied the simulator and software has been written that actually does all the refereeing automatically.

This is all cloud based. In kids size, for example, where we play four versus four robots, all robots have their own virtual machines. Teams send in Docker images and their code is run on these virtual machines. We have four docker images for each team then we have one virtual machine that runs the simulator. The refereeing and the game controller (which is basically what keeps track of how many goals are scored by each team etc), runs on one machine. The teams communicate over the virtual network and get to send motor commands and sensor requests to the simulator and, with this information, they can do their calculations and carry out the games as they would in a normal environment.

Presumably, the robots in the simulator are similar to the real robots as in they have the same “sense” restrictions, and they can’t know exactly where all the other players are on the field through other means?

This is one of the important things, because normally in a simulator you can request all kinds of information from the simulated world, like “where is the ball”? Obviously, that is something we don’t want, so that’s why we provided this controller that only gives very constrained information. We wanted this competition to feel as close to the real competition as possible.

The way it works is that the simulator runs the simulation and we get a file that we can replay and re-render. It shows what has been done in the game in real time. The simulation, with all the computational constraints, runs about ten times slower than real time. When we show it, we have the 10 minute half as an actual 10 minutes. We will be streaming those on Twitch (Field A and Field B). That way the teams can watch how their robots did and this will be the first time they see what happens. The teams also get all the log files afterwards so they can run analysis.

We have four competition days and the teams are allowed to update their software throughout. The teams then actually get the competition feeling. They can make updates and improve throughout the event. We also wanted to keep the teams engaged and create some excitement around the competition.

This year introduced a mock competition for teams to try out the set-up, and to make sure they could connect to the virtual simulator. We’ve seen that teams are treating this as a normal tournament, working day and night trying to update and improve their robots.

We’ve seen a lot of exchanges between teams, helping each other to solve problems. What I think is one of the greatest things about RoboCup is this exchange between teams and this idea of trying to evolve research together and try to make a great tournament together. That is something we are seeing again this year.

Do you think that the league will stick with any of the virtual elements once you are able to return to the physical competition?

We haven’t figured out exactly how it will work but we definitely want to keep the virtual element. There are two options. One is to build a new league with the 3d Simulation League, which is using a different simulator but is interested in converging.

Another option is to have an intermediate tournament. In recent years, teams have organised local get togethers in autumn or spring for teams to test their software in between the main RoboCup tournament. We could have a virtual autumn tournament for teams to get together more globally.

The goal for the humanoid league is that robots could win the human world championship by 2050. Is that possible?

It’s hard to say because, when you watch the games now they are very far away from that, especially in adult size. However, in the 10 years I’ve been involved with RoboCup, the progress in research and development has been tremendous. If we keep up this level of development I would think that it would be possible to at least attempt the game. Maybe we wouldn’t win it, but we could be at the stage where we could at least play against humans in 2050. That would be a huge accomplishment.

If you had looked 10 years ago and told people that the robots would walk on artificial grass, without localisation aids, working under natural light conditions, people would have said that’s not achievable in 10 years.

Every year we develop the rules and try to really challenge teams. We want to stay open to welcome new teams and, at the same time, challenge existing teams, giving them the chance to advance their research every year.

Because the league is so visual you can really see the progress. If you watch the games from 10 years ago you can easily see the progress. That’s great for the public. Often this kind of development isn’t so visual but here we can really see the differences.

Find out more

Humanoid League website

RoboCup 2021 website

The games will be streamed on Twitch TV, using two channels:

https://www.twitch.tv/robocuphumanoidfielda

https://www.twitch.tv/robocuphumanoidfieldb

There will be commentary to accompany the games and teams are invited to commentate on their own games too. This will help spectators make sense of what is going on.

The schedule is here.

tags: RoboCup, RoboCup2021