ΑΙhub.org

The problem of complete, irreversible data anonymisation

By Álvaro Moreton and Ariadna Jaramillo

The concept of data anonymisation

Broadly speaking, data anonymisation can be defined as the process of irreversibly removing identifiable (confidential or private) information of both natural and legal persons[1] from raw datasets (e.g., text databases, audio recordings, etc.). But there is more to anonymisation than meets the eye, especially when it comes to actual humans!

The General Data Protection Regulation (GDPR) does not explicitly define anonymisation. However, it states in Recital 26 that “[the] principles of data protection should apply to any information concerning an identified or identifiable natural person. […] The principles of data protection should therefore not apply to anonymous information, namely information which does not relate to an identified or identifiable natural person or to personal data rendered anonymous in such a manner that the data subject is no longer identifiable”.

The personal data or information to which Recital 26 refers to can be found in GDPR, Article 4(1). Simply put, they are unique identifiers (e.g., names, national identification numbers, etc.) that allow for the direct identification of individuals, and online identifiers provided by the device, app, tool or protocol used by the data subject (e.g., IP address, cookie identifier, radio frequency identification tag, etc.) as indicated in Recital 30.

But ‘identifiable’ does not end here, as a person can also be indirectly identified by linking other publicly available or privately acquired information concerning his/her persona (e.g., place of birth, race, religion, physical attributes, activities, employment information, medical information, education information, etc.), making anonymisation an extremely delicate and complicated task. This type of information is known as quasi-identifiers.

For example, think of a university that has decided to carry out a study on the profiles of their international students. Data provided by students include, among others, place of birth and major. If only one Asian student is undertaking the philosophy major, it would be very easy to re-identify this individual if only direct identifiers are removed or marked, and the quasi-identifiers are left unaddressed.

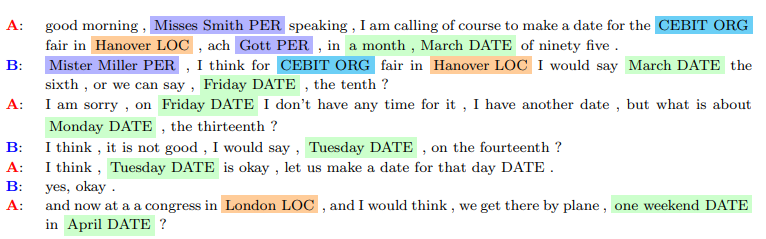

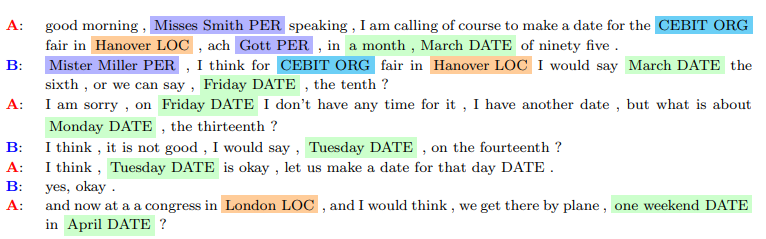

This process of de-identification[2], removing identifiers and quasi-identifiers, does not diminish the value or utility of the data. On the contrary it is a convenient way to share data containing personal and/or private information. This enables the monetisation of data (e.g., by selling or trading anonymised datasets on customer habits), reporting to third parties (e.g., government agencies when required by the law), analysis of open data (e.g., university publications), etc. Along those lines, COMPRISE uses large-scale anonymised speech and text data to train speech-to-text and spoken language understanding for a variety of languages and dialog domains without compromising the users’ privacy.

Risks of anonymisation

Unlike pseudonymisation (GDPR Article 4(5)), which still allows for the re-identification of user identifiable data, data anonymisation must be irreversible.

In this regard, Recital 26 of the GDPR states that “[to] determine whether a natural person is identifiable, account should be taken of all the means reasonably likely to be used, such as singling out, either by the controller or by another person to identify the natural person directly or indirectly. To ascertain whether means are reasonably likely to be used to identify the natural person, account should be taken of all objective factors, such as the costs of and the amount of time required for identification, taking into consideration the available technology at the time of the processing and technological developments”.

In other words, the anonymisation does not need to be infallible, but secure enough to withstand reasonably likely means of attack. However, achieving anonymisation under the conditions stated above still remains a tricky task, as explained below.

It was common practice for research centres, companies and institutions to remove unique identifiers from datasets but keep quasi-identifiers (e.g., the 1990s GIC medical records, 2006 AOL website queries, 2006 Netflix Prize data). This would result in a dataset where names and social security numbers of the data subjects have been removed, but their professions, ethnicity, post code, gender, place of birth, etc. would remain identifiable in the data.

This practice would not be such a problem if the cost and time required to make the data re-identifiable was inaccessible, but this is no longer the case as the three organisations above (GIC, AOL & Netflix) found out to their own expense.

The availability of public data is a recurring issue when it comes to achieving permanent, irreversible anonymisation. The average user shares an astonishing amount of personal data, most of the time, without being aware of how it can be used by third parties. The scenario worsens for high profile individuals whose personal data is even more exposed to the public eye (e.g., a politician whose every movement is published on newspapers) or individuals with very unique attributes (e.g., being a quintuplet or work in a very specialised field).

It is the availability of data (i.e., identifiers and quasi-identifiers) from multiple sources like newspapers, social networks, electoral registers, etc., and the possibility to combine said data with anonymised (de-identifiable) datasets that increase the risk of re-identification.

For example, Netflix hosted a contest aiming at improving the platform’s algorithm in 2006. They published what was thought to be anonymised data samples of over 100 million movie ratings made by 500,000 users. Using this data, researchers from the Texas University found that 68% of the users would be identifiable with a small amount of additional information, just two ratings and dates with a three-day error, and that 99% of the users would be identifiable with a little more information, with eight ratings (of which two may be completely wrong) and dates with a 14-day error. This case may not have become such a good textbook example of poorly anonymised data if the ‘additional information’ was realistically unobtainable, but the Internet Movie Database (IMDB) was publicly available, and users sometimes used their real name to rate contents with the date the rating was made included. This enables an attacker to use what the user identifies as their public persona (IMDB public ratings that anyone can see) to unravel and link to the user’s private persona (anonymised Netflix private ratings) without the user’s consent. This type of attack, where information is obtained from multiple sources and combined to achieve de-identification, is known as linkage attack.

At the time of writing, two more methods are commonly used to de-anonymise data. One is the inference method, that consists of deducing an attribute from a set of other attributes. Consider the heading ‘The new surgical roster has two specialities: paediatrics and neonatology’. Based on the set of attributes contained in this heading (position and specialities), the identity of the data subject(s) could be inferred. The other is the singling out method, which consist of isolating one or all of the attributes that could serve to identify an individual within a dataset, is another common method used by attackers. An example of this would be an anonymised database that contains information on COVID-19 positive cases in a small county over the last month. An individual could be identified simply by correlating the attributes ‘date of discharge’ and ‘ethnicity’. Sometimes a single attribute will suffice to single out an individual.

Evaluating the risks of de-identification

Fortunately, even though it is impossible to create a permanently irreversible anonymised dataset, the most reasonable way to proceed when creating anonymised data is with a risk-based approach. In other words, to determine whether the resulting data falls under the category of ‘personal data’ or ‘non-personal data’ and if the risk related to the ‘de-anonymisation of personal data’ is acceptable or not. One way to verify this is by using the ‘motivated intruder test’.

The motivated intruder is defined as follows:

“The ‘motivated intruder’ is taken to be a person who starts without any prior knowledge but who wishes to identify the individual from whose personal data the anonymised data has been derived. This test is meant to assess whether the motivated intruder would be successful. The approach assumes that the ‘motivated intruder’ is reasonably competent, has access to resources such as the internet, libraries, and all public documents, and would employ investigative techniques such as making enquiries of people who may have additional knowledge of the identity of the data subject or advertising for anyone with information to come forward. The ‘motivated intruder’ is not assumed to have any specialist knowledge such as computer hacking skills, or to have access to specialist equipment or to resort to criminality such as burglary, to gain access to data that is kept securely.”

By analysing how the motivated intruder was able to de-anonymise the data, it will then be possible to evaluate and assess the risk involved and modify the anonymisation process if and where required. This evaluation may include an analysis of where the data will be disclosed and the ease to perform the ‘singling out’, ‘linkability’ and ‘inference’ methods of de-anonymisation. Finally, how the data users’ interaction with the datasets are managed and how the infrastructure is employed to implement restrictions to the data usage.

Conclusions

Being able to completely anonymise a dataset is highly unlikely. The amount of information available that facilitates linkage attacks and other forms of re-identification is immense. The adaptation on mass of voice-enabled assistants is increasing the availability of voice data and audio recordings from voice-based devices are just one of the many sources of identifiers and quasi-identifiers. This is one new example of how an individual can be identified where the cost and time required to do so has reduced.

Even if complete anonymisation is not possible, what is possible is to assess the risk of re-identification that each anonymised dataset has and try to prevent re-identification by all likely and reasonable means available at the given moment and implement the most suitable techniques that protects the data subjects’ identity. Anonymisation, just like encryption, is not a static solution, but an evolving risk that requires periodic reassessment.

[1] A “natural person” is the legal term of an individual human being, as opposed to a “legal person” which means a public of private organization.

[2] De-identification refers to the process of removing subject identifiable items from the data. This may be the anonymization of the data but also the pseudonymization of the data, using techniques such as masking identifiers and generalizing quasi-identifiers within the data.