ΑΙhub.org

Reducing the cost of localized named entity recognition

By Thomas Kleinbauer

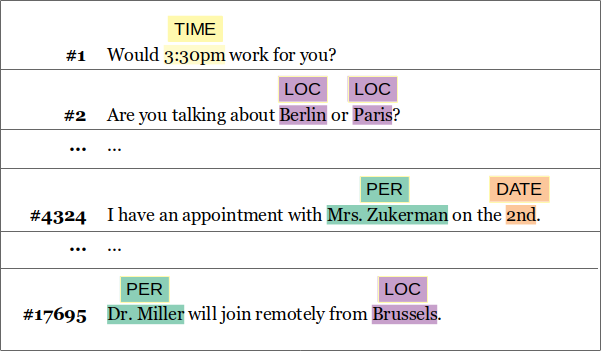

In the field of natural language processing (NLP), being able to automatically recognize named entities (NEs) is an important capability that is relevant to a variety of tasks. For instance, a train ticket booking service must be able to identify dates and locations for the system to function correctly and understand when and from where to where you would like to travel. Named entity recognition (NER) is a well-researched subfield of NLP and today’s best-performing approaches make use of supervised machine learning methods, such as deep neural networks. These methods need 1) many example sentences that contain named entities, and 2) each named entity in these example sentences must be labelled with its respective type, i.e., every organization name is labelled ORG, every person’s name is labelled PER, etc. Once both are obtained, a training algorithm can process the data and create what is called a model: a machine-readable representation of how to detect named entities in sentences together with their type. The great thing about it is that, when trained properly, the model can then be used to find the named entities in a new sentence that the system has not seen before.

However, as you may have noticed, there’s just one little problem with this approach. The process to create the model consists of three major steps: 1) obtaining a large quantity of data, 2) preparing the obtained data as training data, and 3) running the training algorithm on the data. While the third step means a lot of work for our computer, we don’t mind that because that’s what computers are for, but the problem lies with the second step; manually labelling examples of named entities in tens of thousands of sentences is a lot of work for us! Luckily, there are a variety of training datasets and models for standard named entities, like the ones mentioned above, that are available for download, so one doesn’t have to do all these steps. However, in some cases, the named entities to be found by the system differ significantly from those in the training data. For instance, in a biomedical application, you might be looking for the names of diseases, symptoms, or medications. A recognizer that was trained to detect only the standard NEs will be of no use in such a case, as it can only detect the NEs that the training data was labelled with. This is why a developer of any application that requires specialized named entity recognition faces the challenge of annotating substantial amounts of data to train a new model. This costly and cumbersome task can be prohibitive, especially for smaller companies.

The COMPRISE project, funded by the European Union under the Horizon 2020 program, has tasked itself with creating new and innovative techniques for voice assistants. Finding new methods to reduce the costs of developing such systems is one of the three pillars of the project, with the other two being the increase of privacy and inclusiveness of voice assistants. For named entity recognition, COMPRISE has investigated the use of large pre-trained language models to help reduce the annotation efforts described above. So, what are pre-trained language models? Traditionally, a language model had to solve the simple but important task of predicting the next word in a sentence when the first words are already available. Today, this kind of feature is very familiar to many users of text messaging apps, but it turns out that the latest generation of language models are so powerful that they can serve as the basis for many other NLP tasks. Furthermore, these language models that have been pre-trained on billions of examples can be downloaded and used freely by researchers and companies around the world.

The question now becomes “How can a model that is ordinarily used to predict the next word help with classifying named entities?”. The following method is surprisingly simple but, as it turns out, very effective.

The named entity recognition task is split up into two steps, 1) find all occurrences of named entities in the given input sentence, and 2) for each of these entities, determine its type. The first step can be reasonably approximated with a part-of-speech (POS) tagger that uses solely syntactic information. At least in English, named entities rarely occur back-to-back in a sentence, so whenever the POS tagger finds sequences of named entities, it is a good heuristic to assume that they are in fact just part of a single multi-word entity, such as “New York”. The second step is where the pre-trained language model comes into play. Let’s look at an example, namely the sentence:

The second wave of COVID-19 was associated with a higher incidence of the disease.

The POS tagger finds “COVID-19” to be a proper noun and thus a candidate for a named entity. We can then use that sentence together with 1) a simple template to create 2) the test sentence as indicated below.

1) [Candidate Proper Noun] is a [___]

2) COVID-19 is a [___]

The second slot at the end of the template is for the language model to fill, so when we pass “COVID-19 is a ___” as input, the language model gives suggestions for a good next word in return. Furthermore, the language model also provides a confidence value for each possible continuation of the template sentence. So, for instance, “COVID-19 is a kindly” will come with a very low associated confidence value because this sentence makes no sense, but “COVID-19 is a disease” will have a rather high confidence value. In order to leverage this effect for named entity recognition, we create a list of words for each label, containing only words that make sense for that label. Here are a few examples:

DIS: disease, illness, condition, …

SYM: symptom, pain, feeling, …

MED: medication, medicine, pill, prescription, …

Now we just have to retrieve the language model’s confidence value for each of the words in these lists, and whichever label contains the word with the highest confidence is our final prediction.

This approach works astonishingly well, and it has one major advantage: because pre-trained language models are already available off the shelf, neither manual labelling of data nor any machine learning is necessary. The only “work” that needs to be performed is to create the above word lists, which is better left to a domain expert. However, if you do have a small amount of labelled in-domain data already, or can afford to produce it, the performance of this approach can be improved further by fine-tuning the language model to this set of in-domain data. Depending on the exact circumstances, as little as 100 labelled sentences can already make a big difference in the quality of the resulting named entity recognizer.

Now this, in the scientific world, is considered as a major cost reduction.