ΑΙhub.org

Machine learning in chemistry – a symposium

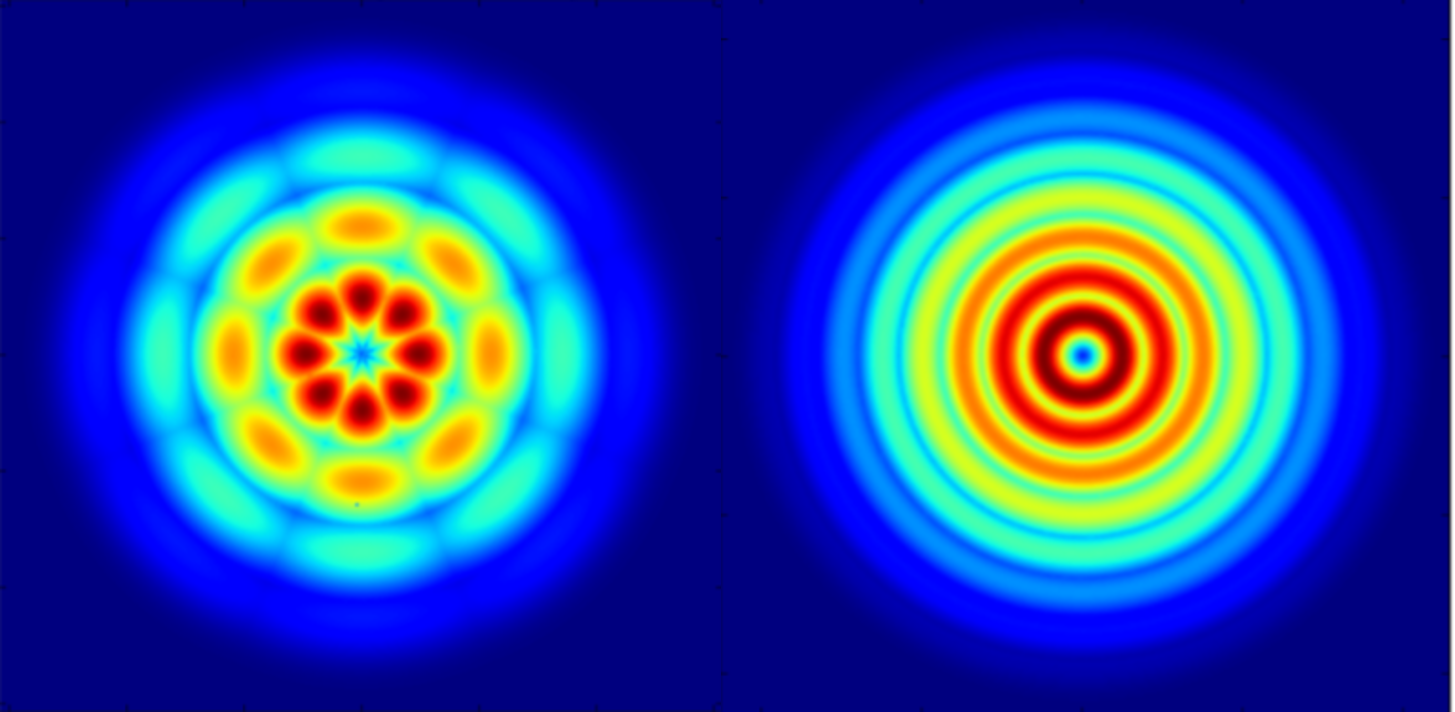

Image from TorchANI: A Free and Open Source PyTorch Based Deep Learning Implementation of the ANI Neural Network Potentials, work covered in the first talk by Adrian Roitberg. Reproduced under a CC BY NC ND 4.0 License.

Image from TorchANI: A Free and Open Source PyTorch Based Deep Learning Implementation of the ANI Neural Network Potentials, work covered in the first talk by Adrian Roitberg. Reproduced under a CC BY NC ND 4.0 License.

Earlier this month, the Initiative for the Theoretical Sciences, City University of New York (CUNY) organised a symposium on Machine learning in chemistry.

Moderated by Seogjoo Jang (CUNY) and Johannes Hachmann (University at Buffalo, SUNY), the event comprised four talks covering: quantum chemistry, predicting energy gaps, drug discovery, and “teaching” chemistry to deep learning models.

The event was recorded, and you can watch in full below:

The talks

Starts at 1:30

A Star Wars character beats Quantum Chemistry! A neural network accelerating molecular calculations

Adrian Roitberg, University of Florida

Abstract: We will show that a neural network can learn to compute energies and forces for acting on small molecules, from a training set of quantum mechanical calculations. This allows us to perform very accurate calculations at roughly a 107 speedup versus conventional quantum calculations. This opens the door for many possible applications, where the speed versus accuracy bottleneck have made them unfeasible until now.

Starts at 33:40

Machine learning energy gaps of molecules in the condensed phase for linear and nonlinear optical spectroscopy

Christine Isborn, University of California Merced

Abstract: This talk will present our results leveraging the locality of chromophore excitations to develop machine learning models to predict the excited-state energy gap of chromophores in complex environments for efficiently constructing linear and multidimensional optical spectra. By analyzing the performance of these models, which span a hierarchy of physical approximations, across a range of chromophore–environment interaction strengths, we provide strategies for the construction of machine learning models that greatly accelerate the calculation of multidimensional optical spectra from first principles.

Starts at 1:02:41

Accelerated molecular design and synthesis for drug discovery

Connor Coley, Massachusetts Institute of Technology

Abstract: The typical molecular discovery paradigm is an iterative process of designing candidate compounds, synthesizing those compounds, and testing their performance, where each repeat of this cycle can require weeks or months, requires extensive manual effort, and relies on expert intuition. Computational tools from machine learning to laboratory automation have already started to streamline this process and promise to transition molecular discovery from intuition-driven to information-driven. This talk will provide an overview of our efforts to develop “predictive chemistry” tools to accelerate the planning and execution of chemical syntheses, as well as deep generative models that learn to propose new molecular structures that can be validated in the lab.

Starts at 1:36:09

More than mimicry? The challenges of teaching chemistry to deep models

Brett Savoie, Purdue University

Abstract: Deep generative chemical models refer to a family of machine learning architectures that can digest chemical property data and suggest new molecules or materials with targeted properties. These models have generated interest for their potential to predict chemistries based on structure-function design rules learned directly from data. Nevertheless, these models are extremely data hungry and have several common failure mechanisms. In this talk I will summarize contemporary strategies for training these models, discuss where progress has been made, and provide some opinions on where work is still needed.