ΑΙhub.org

Machine learning fine-tunes graphene synthesis

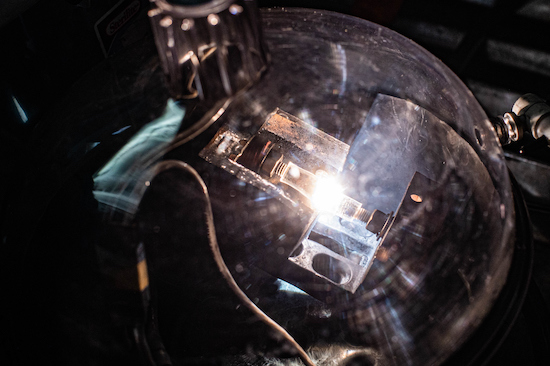

Rice University chemists are employing machine learning to fine-tune its flash Joule heating process to make graphene. A flash signifies the creation of graphene from waste. (Credit: Jeff Fitlow/Rice University)

Rice University chemists are employing machine learning to fine-tune its flash Joule heating process to make graphene. A flash signifies the creation of graphene from waste. (Credit: Jeff Fitlow/Rice University)

By Mike Williams

Rice University scientists are using machine learning techniques to streamline the process of synthesizing graphene from waste through flash Joule heating.

This flash Joule process has expanded beyond making graphene from various carbon sources, to extracting other materials, like metals, from urban waste.

The technique is the same for all of the above: blasting a jolt of high energy through the source material to eliminate all but the desired product. But the details for flashing each feedstock are different.

The researchers describe in Advanced Materials how machine learning models that adapt to variables and show them how to optimize procedures are helping them push forward.

“Machine learning algorithms will be critical to making the flash process rapid and scalable without negatively affecting the graphene product’s properties,” Tour said.

“In the coming years, the flash parameters can vary depending on the feedstock, whether it’s petroleum-based, coal, plastic, household waste or anything else,” he said. “Depending on the type of graphene we want — small flake, large flake, high turbostratic, level of purity — the machine can discern by itself what parameters to change.”

Because flashing makes graphene in hundreds of milliseconds, it’s difficult to tease out the details of the chemical process. So Tour and company took a clue from materials scientists who have worked machine learning into their everyday process of discovery.

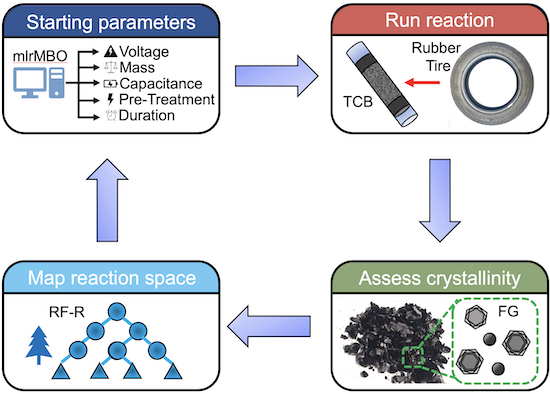

Machine learning is fine-tuning Rice University’s flash Joule heating method for making graphene from a variety of carbon sources, including waste materials. (Credit: Jacob Beckham/Tour Group)

Machine learning is fine-tuning Rice University’s flash Joule heating method for making graphene from a variety of carbon sources, including waste materials. (Credit: Jacob Beckham/Tour Group)

“It turned out that machine learning and flash Joule heating had really good synergy,” said Rice graduate student and lead author Jacob Beckham. “Flash Joule heating is a really powerful technique, but it’s difficult to control some of the variables involved, like the rate of current discharge during a reaction. And that’s where machine learning can really shine. It’s a great tool for finding relationships between multiple variables, even when it’s impossible to do a complete search of the parameter space.

“That synergy made it possible to synthesize graphene from scrap material based entirely on the models’ understanding of the Joule heating process,” he said. “All we had to do was carry out the reaction — which can eventually be automated.”

The lab used its custom optimization model to improve graphene crystallization from four starting materials — carbon black, plastic pyrolysis ash, pyrolyzed rubber tires and coke — over 173 trials, using Raman spectroscopy to characterize the starting materials and graphene products.

The researchers then fed more than 20,000 spectroscopy results to the model and asked it to predict which starting materials would provide the best yield of graphene. The model also took the effects of charge density, sample mass and material type into account in their calculations.

Co-authors are Rice graduate students Kevin Wyss, Emily McHugh, Paul Advincula and Weiyin Chen; Rice alumnus John Li; and postdoctoral researcher Yunchao Xie and Jian Lin, an associate professor of mechanical and aerospace engineering, of the University of Missouri. Tour is the T.T. and W.F. Chao Chair in Chemistry as well as a professor of computer science and of materials science and nanoengineering.

The Air Force Office of Scientific Research (FA9550-19- 1-0296), U.S. Army Corps of Engineers (W912HZ-21-2-0050) and the Department of Energy (DE- FE0031794) supported the research.