ΑΙhub.org

The AI pretenders

By Dr Damian Clifford, Dr Yvette Maker, Professor Jeannie Marie Paterson and Professor Megan Richardson

Tech and start-up companies providing ‘artificial intelligence’ (or AI) products can be divided by the degree to which their devices either replicate or distinguish themselves from humans.

Some interactive AI products deliberately adopt the persona of a children’s book robot. Other tech companies are on a quest to make robots more lifelike and interactions with virtual bots more naturalistic or ‘seamless’.

But should they really be doing this?

Most modern robot ethics state that machines shouldn’t mislead humans about their identity. Bots resembling humans may contain formal or fine print disclosures of their identity but if the overall effect is lifelike, it’s questionable whether these disclaimers have any real effect.

Like privacy policies, most people may not read or understand them, and they may not be tailored to different users like consumers with disability or older people.

But why are we concerned about robots and virtual bots pretending to be human, or human like?

From an ethical perspective, misleading people is almost always problematic because it involves treating them as means to another person’s ends. In the context of bot representing itself as human, the risk is that people who are misled give away more information and are more trusting than they should be.

So does that mean that a greater degree of ‘friction’ in the human-machine relationship – where the person is in no doubt they are interacting with AI – makes it safer?

We all probably know that Alexa the digital assistant isn’t real. But a lot of effort goes into making the voice of Alexa and its equivalents naturalistic – but not too naturalistic say the tech companies.

This is clearly successful on some level as Alexa apparently gets proposals of marriage, which it politely refuses. And much of the advertising for Alexa plays with the idea of what it (or she) would look like in human form.

We still don’t know enough about the influence humanistic bots may have on human trust. Nonetheless, there are concerns that the combination of the device’s friendliness combined with a person’s lack of knowledge about how it is set up, controlled and operated, may leave some consumers giving away more information than they should.

This concern is especially pertinent when it comes to data privacy and cyber security. If users don’t understand the limits of the device, they simply may not take the precautions needed to protect themselves.

We recently conducted a survey of 1,000 Australians about their use of digital assistants and concerns they do (or don’t) have when using these devices. We’re still in the early stages of analysing the results but there are already some interesting insights.

Just over half of respondents reported using a digital assistant or smart speaker frequently or occasionally, and that privacy concerns are a live issue for consumers.

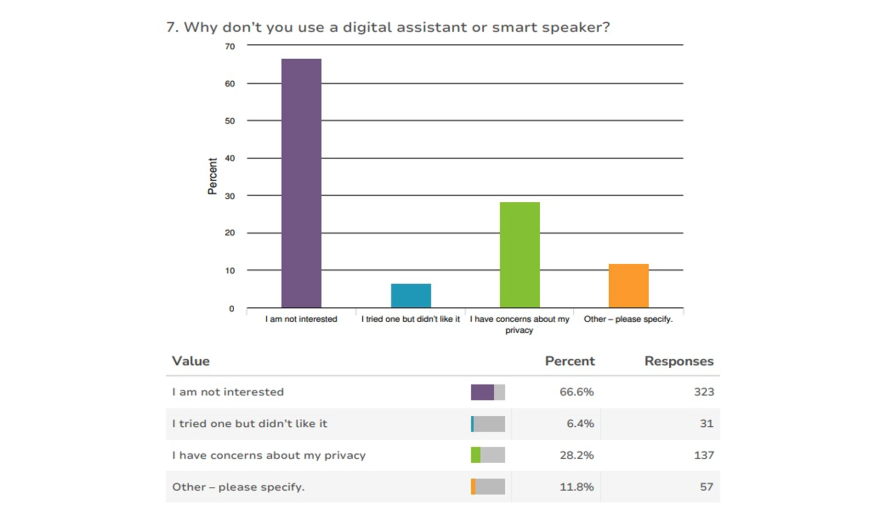

Of the people who said they don’t use a digital assistant, just under 30 per cent said that one of the reasons for this was concerns about their privacy.

Of respondents who did occasionally or frequently use a digital device, almost one third were moderately concerned, and almost a quarter were either very or extremely concerned.

Nearly 50 per cent of users were concerned about the device overhearing conversations, recording and collecting data about the user without them knowing, or not knowing where or for how long recordings, data or other information would be stored or for what purpose.

Just over 40 per cent of users were concerned about not having control over what happened to their recordings or other information.

Concerningly, 65.5 per cent of people surveyed who used a digital device reported that they didn’t know whether they could change the privacy settings, with another 11.1 per cent saying there was nothing they could do to change those settings.

Comprehensive responses to these kinds of privacy concerns are probably not found in formal law alone, but also in the very design of these devices.

Importantly, the effects that currently go into humanlike conversation might also be used in features that remind users to turn off the device during private conversations or updating their software and passwords.

More generally, a less natural or more clunky interface might remind users they are actually dealing with a machine. Any design changes should be made in consultation with users themselves, to ensure that their information and consumer needs are met.

In this context, ironically, less trust between user and digital assistant in the future might be a good thing.

This article was first published on Pursuit. Read the original article.