ΑΙhub.org

Applying explainable AI algorithms to healthcare

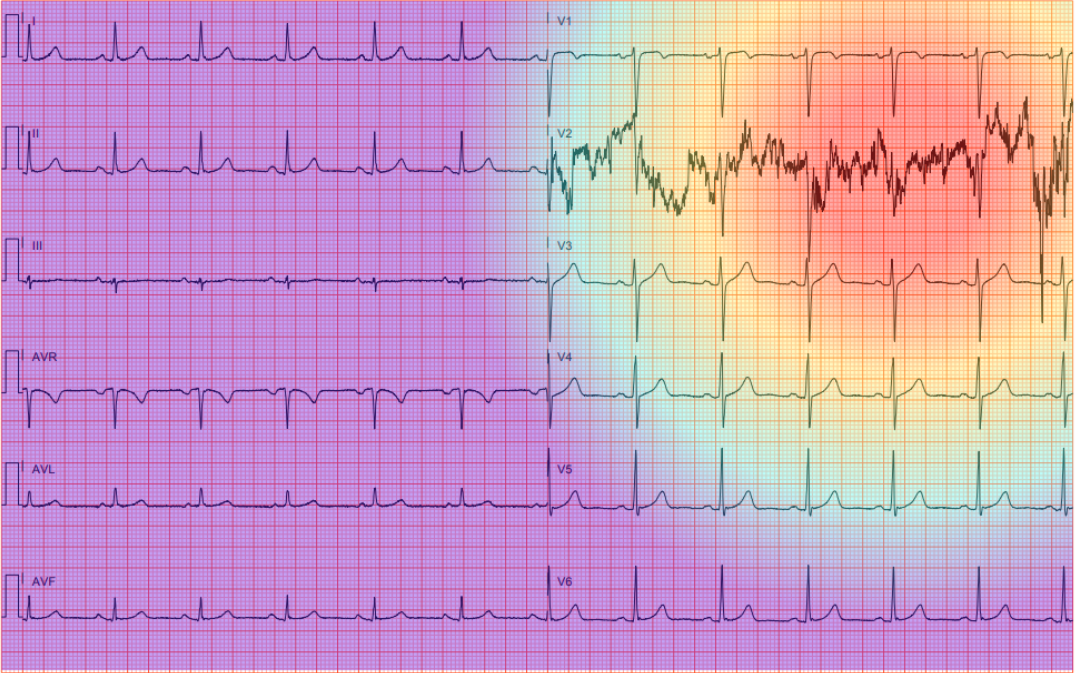

Saliency map explanation for an ECG exam that is predicted to be low-quality. Red highlights the part of the image most important to the model’s prediction, while purple indicates the least important area.

Saliency map explanation for an ECG exam that is predicted to be low-quality. Red highlights the part of the image most important to the model’s prediction, while purple indicates the least important area.

Ana Lucic, a PhD candidate at the Information Retrieval Lab (IRLab) of the Informatics Institute of UvA, has developed a framework for explaining predictions of machine learning models that could improve heart examinations for underserved communities. The work of Lucic is part of the subfield of AI, called explainable artificial intelligence (XAI).

“We need explainable AI”, says Lucic, “because machine learning models are often difficult to interpret. They have complex architectures and large numbers of parameters, so it’s not clear how the input contributes to the output.” Such neural networks are like a black box: some data go in, some predictions come out, but what happens in the box and why, remains largely hidden. Lucic: “The aim of explainable AI is to try to understand how machine learning models make predictions, with the hope of making the black box more transparent.”

Brazil

In her latest project, Lucic aimed to contribute to better healthcare for underserved communities in Brazil. To do so, she collaborated with Brazilian software company Portal Telemedicina, which connects patients living in remote regions with medical specialists in bigger cities through their telemedicine platform. This allows patients to have medical exams such as electrocardiograms (ECGs) recorded in their local clinics, while the diagnosis of the exams is performed by specialists elsewhere in the country.

Sometimes, however, the specialist concludes that the quality of the ECG is too low and that the recording has to be done again. By that time, the patient has often already gone home. And in a remote area, many patients find it difficult to go once again to the clinic, which can put them at greater risk of undiagnosed heart problems.

Longitudinal study

Lucic: “In this project, we want to use AI to predict whether or not an ECG-recording has a quality issue. If there is a problem, the technician can do another recording immediately instead of having the patient come back to the clinic later. In my work, we developed a longitudinal study framework for evaluating the effect of flagging low-quality exams in real time, while also providing an explanation for the low-quality flag. My hypothesis is that if the system includes an explanation in addition to the low-quality flag, it would aid technicians in determining the cause of the low-quality issue.”

Based on an interview study, Lucic concluded that the best explanation to help the technicians is to present them with a so-called saliency map that shows in some colour-coding which parts of the image have a high or a low quality. “Our framework is set up to run over the course of twelve weeks, which will be, to my knowledge, the first time that a longitudinal study for evaluating AI-explanations will be done.”

Typically, user studies are done only once. “But that is not how people interact with AI-systems”, Lucic says. “People interact with such systems over time. And if you want to measure the user’s trust in the AI-system, which is what a lot of explainable AI aims to do, then it’s hard to measure that at just at one moment in time.”

Paper

Lucic and colleagues published their findings in the paper Towards the Use of Saliency Maps for Explaining Low-Quality Electrocardiograms to End Users at the ICML 2022 Workshop on Interpretable Machine Learning in Healthcare. Lucic: “I see it as a framework that can be used to evaluate explainable AI in the real world. We are now waiting for the results of the study so we can understand the effect of explanations on the workflow of the technicians who perform the exams.”

Lucic did this work as part of her PhD-research on explainability in machine learning, under the supervision of professor Maarten de Rijke and professor Hinda Haned. Lucic: “In my PhD-thesis, I developed several algorithms that generate explanations for different types of machine learning models, while trying to keep in mind what’s important for different groups of users. There’s still a lot of work to be done in explainability.”

XAI a young field

As explainable AI is such a young field, people use different definitions of concepts like explainability, transparency and interpretability. With more work to be done over the next years, Lucic hopes to get an agreement on the definitions so that the field of explainable AI can leap forward towards clear evaluation frameworks, clear datasets and clear tasks for explainability benchmarks.

Research information

Explaining Predictions from Machine Learning Models: Algorithms, Users, and Pedagogy

Ana Lucic, PhD Thesis.