ΑΙhub.org

Interview with Steven Kolawole: A sign-to-speech model for Nigerian sign language

We hear from Steven Kolawole about his paper on sign-to-speech models for Nigerian sign language. Steven told us about the goals of this research, his methodology, and how the work has inspired research in other languages.

What is the title of your paper?

Sign-to-speech model for sign language understanding: A case study of Nigerian sign language.

Could you tell us about the implications of your research and why it is an interesting area for study?

The biggest goal of the research was to reduce the communication barrier between the hearing-impaired community and the general populace, focusing on sub-Saharan Africa. Sub-Saharan Africa is one of the regions with the highest number of cases of hearing disabilities and, additionally, the region with the lowest number of solutions targeted towards solving this problem. And investigating why this is the status quo was very interesting.

Could you explain your methodology?

The most significant part of the work was creating a dataset for a sub-Saharan African country because, before this work, no dataset existed for any of the numerous countries’ sign language. I created a pioneer dataset for the Nigerian sign language by reaching out to a TV sign language broadcaster and two special education schools in Nigeria. We created over 8000 images and annotated them by drawing boundary boxes around them. We built three different models using YOLOv5 and ResNet50 SSD FPN (for Object Detection) and MobileNetv2 (for image classification). The YOLOv5 model performed the best; hence a text-to-speech conversion system was built on the model’s predictions, and we deployed the architecture using Docker and DeepStack for real-time translation.

Working with students to create the dataset of sign language images.

Working with students to create the dataset of sign language images.

What were your main findings?

Interestingly, most of the significant findings were discovered before the actual experiments started. During the literature review, I realized how scarce low-resourced datasets could be in a region like sub-Saharan Africa. Considering that the problem we were studying was in a usually overlooked field, where datasets are generally non-existent, it required grit, more than anything else, to champion the dataset creation with meagre and personal resources.

Another finding I realized after finishing the work was its limitation – the one-sidedness of this work. While this research in its current form might help the hearing-impaired community communicate with society at large by converting sign language to text or speech, the translation only goes one way. It does not include a speech-to-sign model (or an alternative) that helps someone unfamiliar with sign language communicate back to the signer. Meaning that while it might improve how we understand signers, it doesn’t improve how the signers understand us.

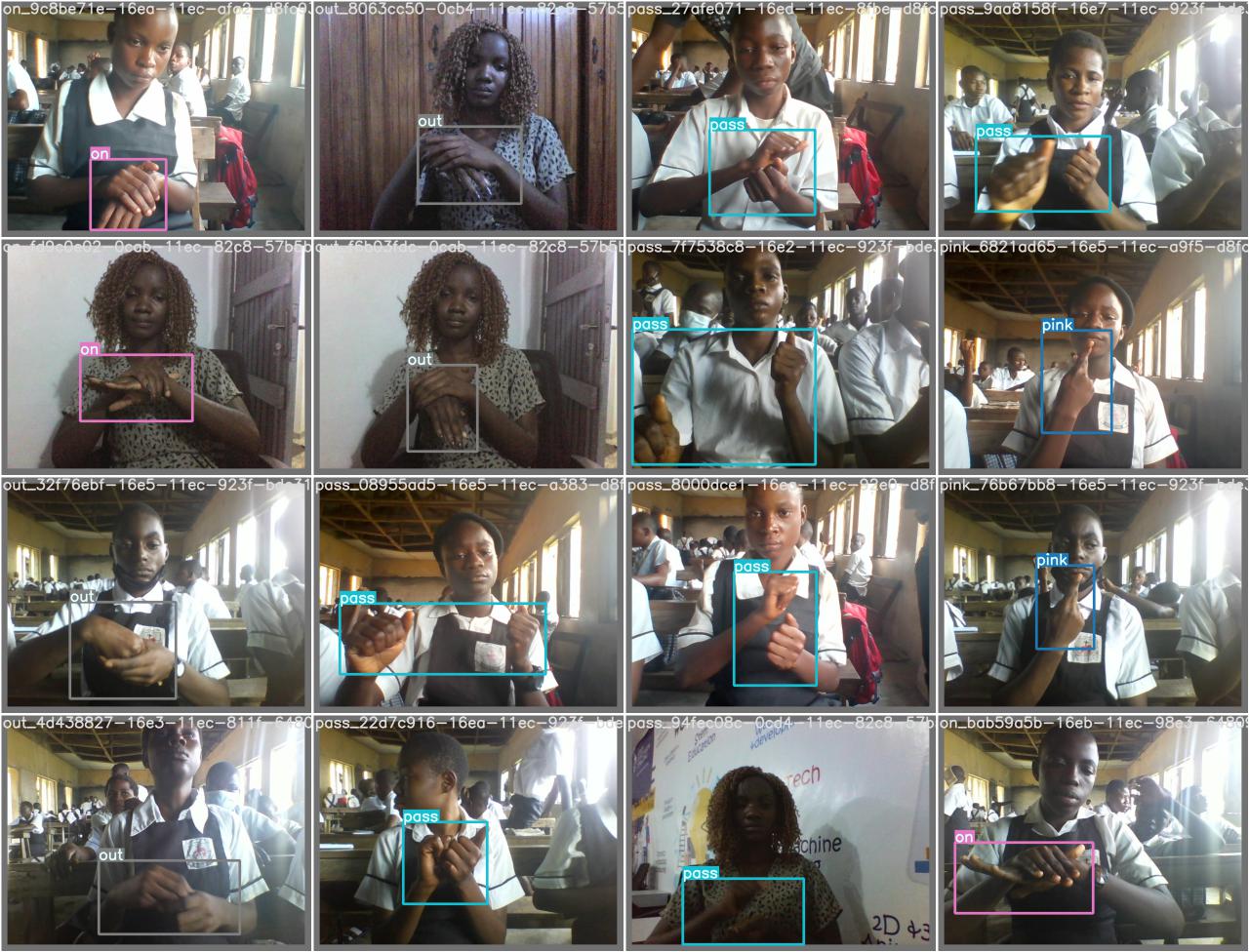

An example of a batch of test images, complete with labels.

An example of a batch of test images, complete with labels.

What further work are you planning in this area?

I don’t have any more work planned toward this. However, I am glad that my work has inspired several other African researchers to do the same in their locality. For example, I met a Ghanaian graduate student at Deep Learning Indaba interested in creating an even larger dataset for the Ghanaian and Cameroonian sign languages. I am currently collaborating with him in a supervisory role on this project.

About Steven

|

Steven Kolawole is a Computer Science Undergrad in his final semester and an independent ML researcher with ML Collective. His research generally focuses on driving accessibility in research via resource-efficient machine learning in terms of small compute and small data resources, and, these days, specifically on efficient Federated Learning and Optimization. |

Read the research in full

Sign-to-speech model for sign language understanding: A case study of Nigerian sign language

Steven Kolawole, Opeyemi Osakuade, Nayan Saxena, Babatunde Kazeem Olorisade