ΑΙhub.org

Accelerating laboratory automation through robot skill learning

Transforming materials discovery plays a pivotal role in addressing global challenges. The applications of new materials could range from clean energy storage, to sustainable polymers and packaging for consumer products towards a more circular economy, to drugs and therapeutics. Stemming from the COVID-19 pandemic, where scientists had to halt experiments due to stringent social distancing measures or accelerate their efforts towards quickly producing a vaccine, there has recently been an increased interest in using robotics and automation in laboratory environments. The challenge here is that laboratories have been designed by and for humans and thus the available glassware, tools and equipment pose difficult problems for traditional automation methods that are inherently open loop and not adaptable. Learning-based methods that rely on autonomous trial and error are increasingly being used to achieve robotic tasks that could not be previously addressed with automation. For laboratory robotics this is particularly beneficial for scenarios where humans would naturally adapt their movements for example in the presence of molecules that exhibit hardness, are hygroscopic or exhibit different properties. As a result, methods that rely on learning through interaction are crucial to success in such domains.

In robotics, reinforcement learning has become a compelling tool for robots to autonomously acquire behaviours and skills, by training an agent to interact with its environment and learn useful behaviours towards this goal. In this work, we introduce model-free reinforcement learning to the laboratory task of sample scraping. While there exist different laboratory skills that would benefit from this approach, autonomous sample scraping is fundamental in a large number of materials discovery workflows. This stems from the need to recover as much of the sample as possible since synthesising molecules is costly in both time and money.

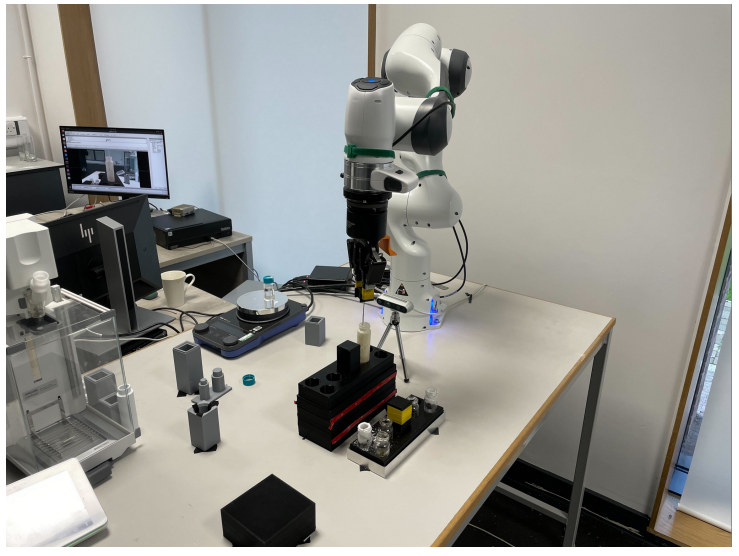

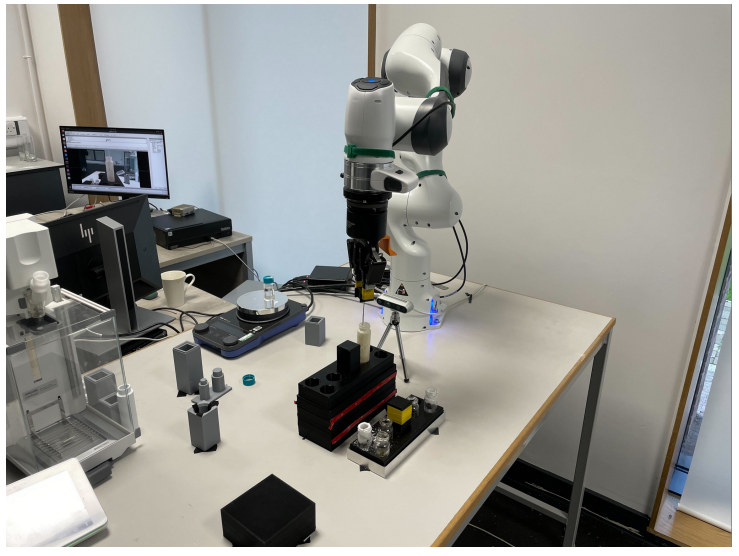

Inspired by how human chemists carry out the task, we defined scraping as follows: the goal is to reach the target position at the bottom of a glass vial, while maintaining contact with the vial wall for material removal. We learn a scraping policy for a Franka Emika Panda robotic arm that relies on proprioceptive and force observations. We also explored curriculum learning [1], where the key idea is to learn first on simple examples before moving to more difficult problems. Here, we increased the challenge of the scraping task by moving the starting pose to outside the vial, such that the task now covers both insertion and scraping. By using a curriculum, we could successfully learn the more challenging insertion and scraping task, while the method without a curriculum fails to learn. We also demonstrate our method on a real robotic platform, illustrated in Figure 1 using tools and glassware that are typically used by human chemists. We trained our policy directly on hardware using a plastic vial, and then tested the model on a glass vial. We adopted this method to minimise potential glass breakage during training exploration.

Figure 1: An overview of the autonomous robotic scraping setup.

Figure 1: An overview of the autonomous robotic scraping setup.

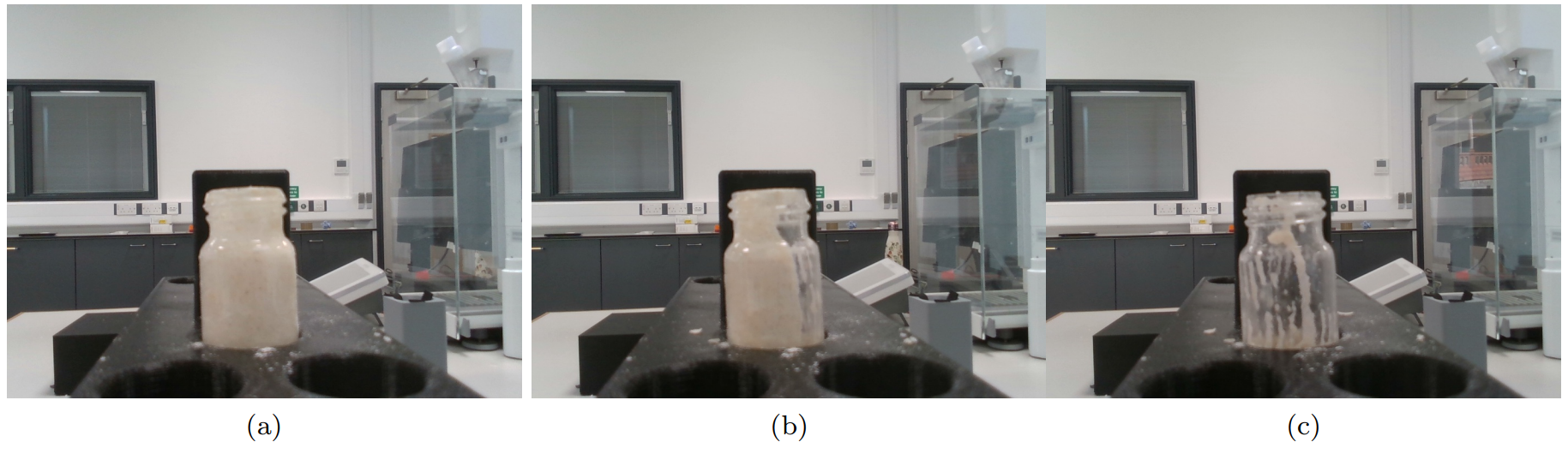

Figure 2: Qualitative results of autonomous sample scraping, which illustrates (a) the initial vial condition, (b) midway through (powder partially removed) and (c) the powder scraped off.

Figure 2: Qualitative results of autonomous sample scraping, which illustrates (a) the initial vial condition, (b) midway through (powder partially removed) and (c) the powder scraped off.

For this task, a vial with powder on its inner walls was used and the robot was able to scrape off the majority of the powder, as illustrated in Figure 2. As we carried out the experiments on an open bench, we used food-grade material (flour) as the material inside the vial. Our work was shown to also generalise to different laboratory equipment, where the task was successful with different sized vials and scrapers of different length. This experiment even provided insight into optimising laboratory scraping for human scientists, particularly on the tool choice and how this could affect task success. We envisage that with an increased deployment of autonomous robotic scientists we could provide bi-directional knowledge to human scientists on optimisation of manual laboratory tasks, which would potentially also increase human throughput.

This work is a first step towards deploying the next generation of robotic scientists within a material discovery lab as part of the EU-funded ADAM project. In our earlier paper [2] that was published in ICRA 2022 and was an Outstanding Automation Paper finalist, we introduced an architecture for heterogeneous robotic chemists. This work will contribute towards this vision of having autonomous robotic systems carrying out laboratory experiments, albeit with more intelligence.

References

[1] S. Narvekar, B. Peng, M. Leonetti, J. Sinapov, M. E. Taylor, and P. Stone, “Curriculum learning for reinforcement learning domains: A framework and survey,” J. Mach. Learn. Res., vol. 21, pp. 181:1–181:50, 2020.

[2] H. Fakhruldeen, G. Pizzuto, J. Glawucki, and A. I. Cooper, “Archemist: Autonomous robotic chemistry system architecture,” IEEE International Conference on Robotics and Automation, 2022. [Read on arXiv here.]