ΑΙhub.org

Interview with Teresa Salazar: Developing fair federated learning algorithms

In their paper FAIR-FATE: Fair Federated Learning with Momentum, Teresa Salazar, Miguel Fernandes, Helder Araujo, and Pedro Henriques Abreu develop a fairness-aware federated learning algorithm which aims to achieve group fairness while maintaining classification performance. Here, Teresa tells us more about their work.

What is the topic of the research in your paper?

With the widespread use of machine learning algorithms to make decisions which impact people’s lives, the area of fairness-aware machine learning has been receiving increasing attention. Fairness-aware machine learning algorithms ensure that predictions do not prejudice unprivileged groups of the population with respect to sensitive attributes such as race or gender. However, the focus has been on centralized machine learning, with decentralized methods receiving little attention.

Federated learning is an emerging decentralized technology that creates machine learning models using data distributed across multiple clients. In federated learning, the training process is divided into multiple clients which train individual local models on local datasets, without the need to share private data. At each communication round, each client shares its local model updates with the server that uses them to create a global shared model.

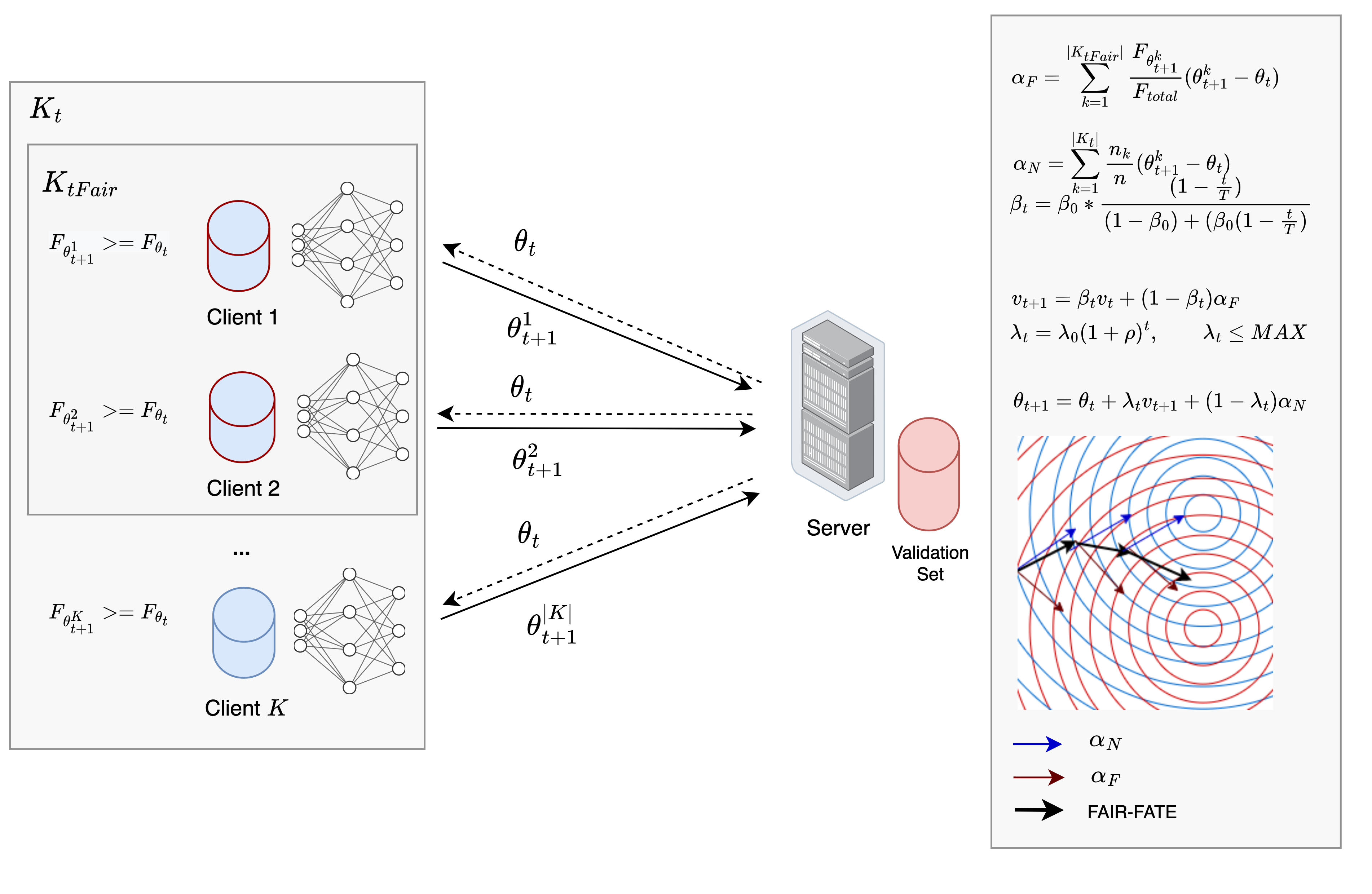

In this work we developed FAIR-FATE: a novel fairness-aware federated learning algorithm which aims to achieve group fairness while maintaining classification performance. To the best of our knowledge, this is the first approach in machine learning that aims to achieve fairness using a fair Momentum estimate. In addition, FAIR-FATE is a flexible algorithm that does not rely on any fairness local strategy at each client.

Could you tell us about the implications of your research and why it is an interesting area for study?

Developing fair federated learning algorithms is of utmost importance. Because of its decentralized nature, there are several reasons why federated learning algorithms may contribute to potential biased decisions.

Firstly, since in federated learning each client has its own private dataset, it may not be representative of the global distribution and thus can lead to the introduction or exacerbation of bias in the global model. Secondly, typical centralized fair machine learning solutions require centralized access to the sensitive attributes information, and, consequently, cannot be directly applied to federated learning without violating privacy constraints. Finally, the aggregation algorithms used in the server may introduce bias by, for example, giving higher importance to models of clients which contain more data, amplifying effects of under-representing unprivileged groups in a dataset.

Because of these reasons, finding federated learning algorithms which facilitate collaboration amongst clients to build fair machine learning models while preserving their data privacy is a great challenge.

Outline of the FAIR-FATE algorithm.

Outline of the FAIR-FATE algorithm.

Could you explain your methodology?

FAIR-FATE uses a new fair aggregation method that computes the global model by taking into account the fairness of the clients. In order to achieve this, the server has a validation set that is used at each communication round to measure the fairness of the clients and the current global model. Afterwards, the fair Momentum update is computed using a fraction of the previous fair update, as well as the average of the clients’ updates that have higher fairness than the current global model, giving higher importance to fairer models. Since Momentum-based solutions have been used to help overcome oscillations of noisy gradients, we hypothesise that the use of a fairness-aware Momentum term can overcome the oscillations of non-fair gradients.

What were your main findings?

The proposed approach is evaluated on four real-world datasets which are commonly used in the literature for fairness-related studies: the COMPAS, the Adult, the Law School and the Dutch Census datasets. Several experiments are conducted on a range of non-identical data distributions at each client, considering the different sensitive attributes and target classes in each dataset.

Experimental results on the described datasets demonstrate that FAIR-FATE significantly outperforms state-of-the-art fair federated learning algorithms on fairness without neglecting model performance under different data distributions.

Revisiting the question presented in this work: Can a fairness-aware Momentum term be used to overcome the oscillations of noisy non-fair gradients in federated learning?

We conclude that applying momentum techniques can help in achieving group fairness in federated learning.

What further work are you planning in this area?

Interesting directions for future work are: testing FAIR-FATE using multiple fairness metrics and multiple sensitive attributes simultaneously; extending the work to various application scenarios such as regression or clustering; focusing on individual fairness notions.

We hope that this work inspires the community to develop more fairness-aware algorithms, in particular, in federated learning, and consider Momentum techniques for addressing bias in machine learning.

Read the research in full

FAIR-FATE: Fair Federated Learning with Momentum, Teresa Salazar, Miguel Fernandes, Helder Araujo, Pedro Henriques Abreu.

About Teresa

|

Teresa Salazar received a B.S. degree in Informatics Engineering from the University of Coimbra in 2018 and a M.S. degree in Informatics from the University of Edinburgh in 2019 with specialisation in machine learning and natural language processing. Since 2020, she is attending the Doctoral Program in Information Science and Technology at the University of Coimbra, being a member of the Centre for Informatics and Systems of the University of Coimbra. Her primary research interests are mainly focused on topics in fairness and imbalanced data, natural language processing, and information retrieval. |