ΑΙhub.org

Benchmarking quality-diversity algorithms on neuroevolution for reinforcement learning

Members of the AIRL lab at Imperial College, and authors of the reported work in this blog post. From left to right: Bryan Lim, Dr Antoine Cully (director of the AIRL lab), Manon Flageat, Luca Grillotti, Dr Simón C Smith, and Maxime Allard.

Members of the AIRL lab at Imperial College, and authors of the reported work in this blog post. From left to right: Bryan Lim, Dr Antoine Cully (director of the AIRL lab), Manon Flageat, Luca Grillotti, Dr Simón C Smith, and Maxime Allard.

Learning and finding different solutions to the same problem is commonly associated with creativity and adaptation, which are important characteristics of intelligence. In the AIRL lab at Imperial College, we believe in the importance of diversity in learning algorithms. With this focus in mind, we develop learning algorithms known as Quality-Diversity algorithms.

Quality-Diversity (QD) algorithms

QD algorithms [1] are a relatively new and growing family of optimization algorithms. In contrast to conventional optimization algorithms, which aim to find a single high-performing/optimal solution, QD algorithms search for a large diversity of high-performing solutions. For example, instead of just one way to walk, QD algorithms learn a diversity of good gaits to walk, or instead of just one way to grasp a cup, many different ways of grasping a cup.

As QD is a growing field, new promising applications and algorithms are released every month. In our work, we propose to apply QD to deep reinforcement learning tasks. We introduce a set of benchmark tasks and metrics to facilitate the comparison of QD approaches in this setting.

The reinforcement learning (RL) and deep reinforcement learning (deep RL) settings

As QD algorithms are general optimization algorithms, they can be used on any optimization/problem setting, RL is an example of such a setting. In RL [2], the agent gets positive or negative rewards based on the outcome of its actions. These rewards act as reinforcement to consolidate beneficial behaviors. For example, to get a robot to learn how to walk forward without falling, we give it positive rewards each time it manages to walk and negative rewards each time it falls. Deep RL [2] is the problem domain in which neural networks are used by the agent for sequential decision making to solve RL tasks.

Neuroevolution in deep RL setting

Conventionally, gradient descent is used to optimize the parameters of neural networks. This is also the case for the neural networks used in deep RL. On the other hand, neuroevolution [3], as the name suggests, is inspired by evolutionary computation methods, in which the parameters of neural networks are evolved instead via some variation/perturbation operators. QD algorithms are evolutionary algorithms and can be used to perform neuroevolution. Here, we are interested in QD algorithms applied to neuroevolution for deep RL.

A challenge in RL compared to the more common and mature sub-field of supervised learning, is that there is no pre-existing dataset. The data being collected for the agent to learn, is obtained by the agent itself through interaction with the environment. This introduces challenges in exploration and generalization. As QD algorithms aim to find a population of diverse agents, they are a promising approach to address these challenges.

A new framework for QD applied to neuroevolution in deep RL

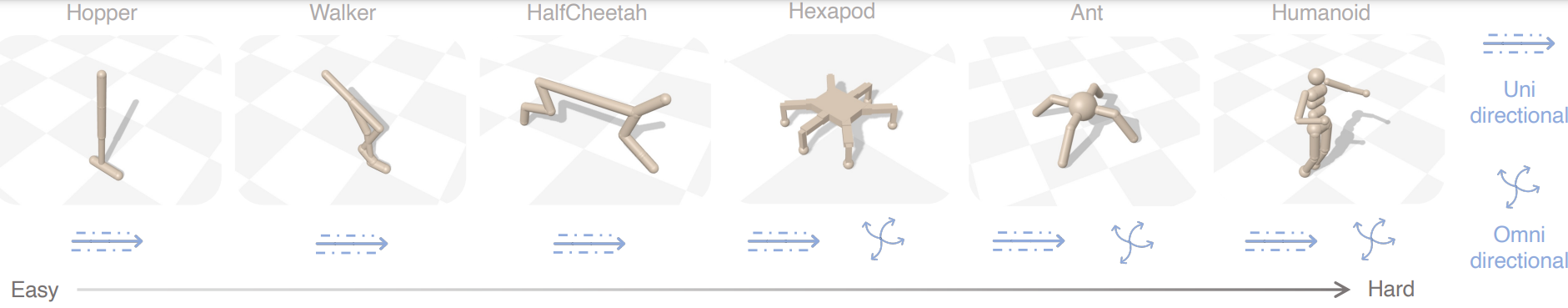

We propose a new benchmark that aims to formalize several tasks, both new and that have already been used extensively across QD literature, in one common framework. We consider these tasks across a range of different types of robots which consist of simpler systems with low number of degrees of freedom to higher dimensional systems similar to real-world robots (see figure below displaying images of our environments).

Among some other desiderata of benchmarks, we also heavily considered the time required to evaluate algorithms using this benchmark. We leverage the recent advances in hardware acceleration and parallel simulators to enable algorithms to be evaluated quickly. We use QDax [4], which is a recent library developed in Jax in which these tasks are implemented using the Brax simulator which has shown to accelerate the evaluations of QD algorithms [4].

Beyond the tasks themselves, we also took a deeper look at the metrics used to analyze the algorithms. As we aim to learn a population of diverse and high-performing agents, the quality and diversity of the population has been quantified using a single scalar value in literature, called the QD-score metric. However, as it is a single value, it fails to capture the full state of the resulting population of agents. For example, a same QD-score can be obtained by (1) a large population of very diverse agents that are not good and (2) a small population of good agents. Hence, we formalize a more general metric called the “archive profile” where the shape of the curve gives us this additional information while still allowing us to recover the QD-score through the area under the curve.

Additionally, noisy problems have been demonstrated to be a challenge for QD algorithms [5]. Noise impacts the ability of the algorithm to quantify the true performance and novelty of solutions. Common deep RL tasks are usually noisy, so do our benchmark tasks. One of our findings is that the inherent stochasticity present in deep reinforcement learning problems further amplifies this issue. In addition, due to the noise challenge, most of the common QD metrics become less meaningful in interpreting the results. We thus also introduce a new evaluation procedure to quantify this impact more meaningfully.

Our most promising finding is that some tasks in the proposed benchmark are still unsolvable with the QD algorithms we have today. This leaves room for improvement for QD algorithms on this set of benchmarks and a good goal for researchers in the community.

What is next?

Setting up this task suite opens a lot of research directions. We now have a fast, replicable, easy-to-set-up benchmark, as well as a set of baselines and metrics. This constitutes a powerful framework to create, test and develop new QD approaches with the aim to tackle common issues such as the one we raised in our work. Using this new framework, our lab would continue focusing on improving QD algorithms and extending the range of their applications!

References

[1] Chatzilygeroudis, K., Cully, A., Vassiliades, V., & Mouret, J. B. (2021). Quality-Diversity Optimization: a novel branch of stochastic optimization. In Black Box Optimization, Machine Learning, and No-Free Lunch Theorems (pp. 109-135). Springer, Cham.

[2] Arulkumaran, K., Deisenroth, M. P., Brundage, M., & Bharath, A. A. (2017). Deep reinforcement learning: A brief survey. IEEE Signal Processing Magazine, 34(6), 26-38.

[3] Lehman, J., & Miikkulainen, R. (2013). Neuroevolution. Scholarpedia, 8(6), 30977.

[4] Lim, B., Allard, M., Grillotti, L., & Cully, A. (2022). Accelerated Quality-Diversity for Robotics through Massive Parallelism. arXiv preprint arXiv:2202.01258.

[5] Flageat, M., & Cully, A. (2020). Fast and stable MAP-Elites in noisy domains using deep grids. arXiv preprint arXiv:2006.14253.

Read the research in full

Benchmarking Quality-Diversity Algorithms on Neuroevolution for Reinforcement Learning, Manon Flageat, Bryan Lim, Luca Grillotti, Maxime Allard, Simón C. Smith, Antoine Cully.