ΑΙhub.org

Interview with Katharina Weitz and Chi Tai Dang: Do we need explainable AI in companies?

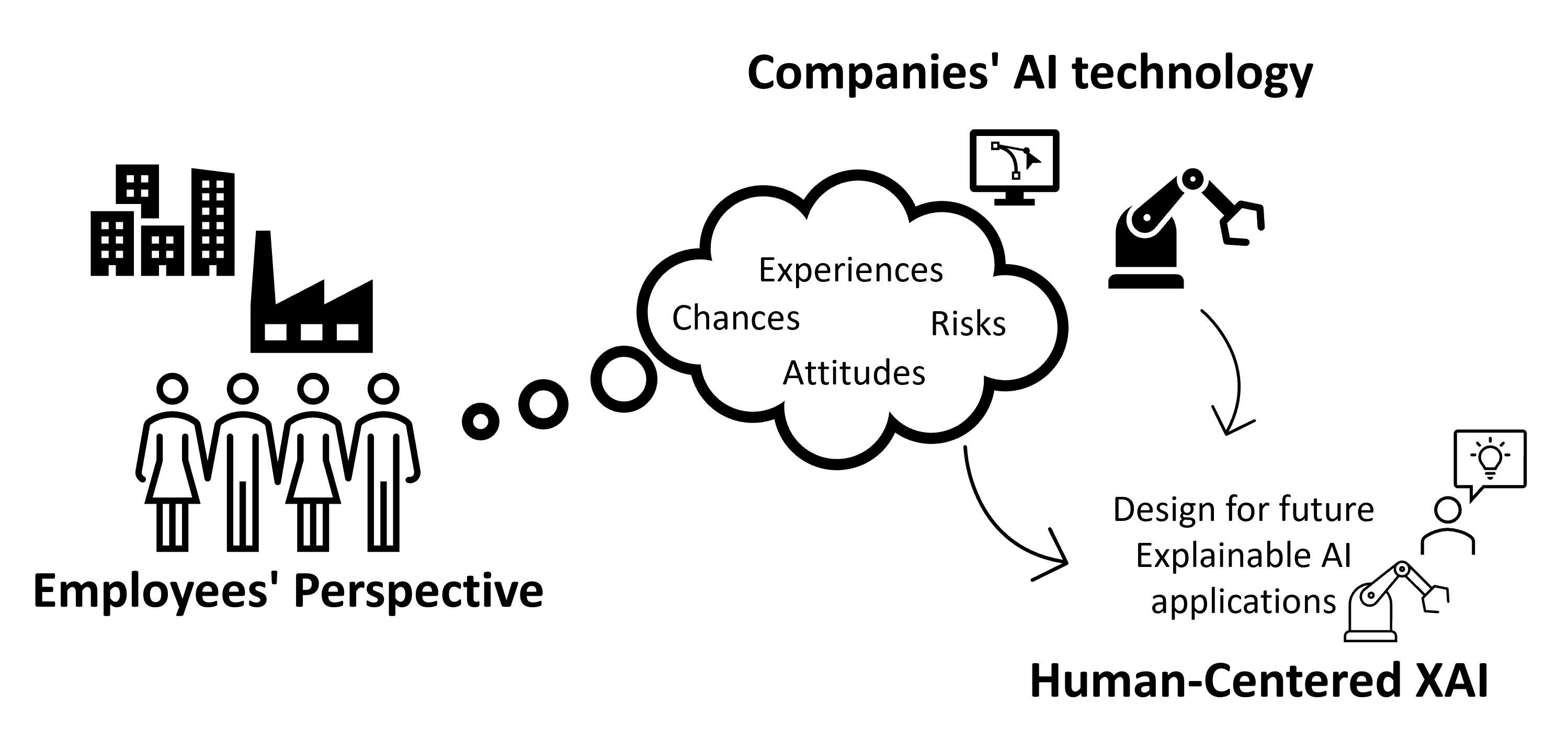

In their project report paper Do We Need Explainable AI in Companies? Investigation of Challenges, Expectations, and Chances from Employees’ Perspective, Katharina Weitz, Chi Tai Dang and Elisabeth André investigated employees’ specific needs and attitudes towards AI. In this interview, Katharina and Chi Tai tell us more about this work.

What is the topic of the research in your paper, and why is it an interesting area for study?

Our paper examines the current state of AI use in companies. It is particularly important to us to capture the perspective of employees. We study human-centered AI. This research area emphasises that AI systems must be developed with human stakeholders in mind. This means, for example, that AI systems should be designed so that humans can understand and trust them appropriately. Designing such understandable systems is part of explainable AI (XAI) research. In the coming years, AI will play an essential role in many companies, making studies about XAI relevant not only for the research community but also for companies to use AI in their applications successfully. Our study put employees (i.e., company specialists and managers) at the centre of future AI development by investigating their needs and attitudes towards AI and XAI. What are their impressions of the use of AI in companies? What do they see critically, and what do they see positively? Do they feel up to dealing with AI? Do they understand the AI systems they use?

In your paper, you investigated employees’ attitudes towards AI. Could you explain your methodology?

The methodology we used to assess employee attitudes was: we simply asked them. However, we know that employees in companies have many tasks in their daily business, and answering questions is time-consuming. That’s why we developed an online questionnaire that doesn’t take too much time but still provides enriching insights. The questionnaire asked for general questions about the company they work in (e.g., size & field of the company, employees’ position), personal information (e.g., age, gender), and AI-related questions regarding the company (e.g., opportunities & risks of AI in their company) and from their personal perspective (e.g., personal attitude towards AI and XAI). Overall, we asked 52 questions. The study was supported by acatech (National Academy of Science and Engineering), which supports independent advice for society and policymakers on topics like digital & self-learning, mobility and technology & society. We drew on acatech’s extensive corporate network to reach company employees. As the client of the study, they supported us in distributing the link to the companies.

What were your main findings?

The survey was part of a project we conducted on behalf of acatech in collaboration with the University of Stuttgart and Fraunhofer IAO. We pursued various research questions, including the change in employees’ work and the training opportunities on AI in companies.

In our paper, we focus on the needs of employees regarding AI and XAI. We looked at employee responses on a personal level (e.g., How do employees rate their XAI and AI knowledge) and on a company level (e.g., Do companies already use AI technology, and if so, which applications exist in companies?). Our results show that employees see opportunities in productivity and flexibility (for example, through better adaptation to customer requirements) through AI. They see financial aspects as risks of AI use (AI solutions have to be adapted to the company and their work processes, many hours of work and many investments in hardware and infrastructure are required to get from the initial idea to a functioning, operational AI system). Employees rated their knowledge of XAI and AI as very good, a result that we do not find in other areas (e.g., education or healthcare). Also, in evaluating the AI technologies used in the company, employees said they found them useful, reliable and understandable. We also found a correlation between employees’ attitudes toward AI and the evaluation of AI technology in the company.

Besides our paper focusing on XAI, the complete project report presents insights into the ongoing discussion about the extended competence requirements for using AI in companies. The report is freely available at here.

Do you have any recommendations for companies wishing to deploy AI?

Our paper is only a first step towards designing human-centered AI systems in companies. We found that employees have a clear picture of AI technologies and have a fairly positive view of XAI. XAI could be a potential driver to foster successful usage of AI by providing transparent and comprehensible insights into AI technologies. Supportive employees on the management level are valuable catalysts.

- For companies: How can they train employees to understand and adequately handle AI technology?

- For research: How can we develop AI in such a way that it provides explanations that support humans in their tasks within the work process?

What further work are you planning in this area?

Our paper describes the actual state of AI in companies. What must naturally follow this research is the development of human-centric AI systems. Here, we focus primarily on researching XAI. In our paper “An Error Occurred!” – Trust Repair With Virtual Robot Using Levels of Mistake Explanation, for example, we investigate the influence of industrial robots’ explanations on user trust. Especially the topic of robot errors and how to deal with them is an enormously important issue for manufacturing companies. Employees who are interrupted in their work by constant errors from robots and are degraded to “problem solvers” for the robot can no longer perform their work without disruption and with concentration but are also frustrated and annoyed by such technical systems. This leads to a loss of confidence in these systems and, worst case, to resignation.

About the authors

|

Katharina Weitz received a MSc in Psychology and Computing in the Humanities (Applied Computer Science) at the University of Bamberg, Germany. At the Lab for Human-Centered AI at the University of Augsburg, she investigates the influence of the explainability of AI systems on people’s trust and mental models. In addition to her research activities, she communicates scientific findings to the general public in books, lectures, workshops, and exhibitions. |

|

Dr Chi Tai Dang received a MSc in Computer Science at the University of Ulm, Germany and a PhD in Computer Science / HCI at the University of Augsburg, Germany. As a postdoctoral researcher at the Lab for Human-Centred AI, he explores IoT technologies and smart homes / environments, i.e. intelligent adaptive and learning systems based on machine learning and AI. |