ΑΙhub.org

#AAAI2023 invited talk: Manuela Veloso on experience-based insights from AI in robotics and AI in finance

Manuela Veloso won the 2023 Robert S. Engelmore Memorial Award, which recognises outstanding contributions to automated planning, machine learning and robotics, their application to real-world problems and extensive service to the AI community. The winner of this prize is invited to give a lecture at the annual conference on Innovative Applications of Artificial Intelligence (IAAI) (which is collocated with the AAAI Conference on Artificial Intelligence, and this year took place from 7-14 February). Manuela’s talk focussed on her research on autonomous robots, and how she has transferred expertise and knowledge from that domain to the field of AI in finance. In both cases, humans interact with AI systems to jointly solve complex end-to-end problems.

Manuela began her research career investigating autonomous robots. Over the years, this has included work on service robots and, through the RoboCup competition, on soccer playing robots. In 2018, Manuela made the move from academia (where she had been Head of the Machine Learning Department at Carnegie Mellon University) to JP Morgan Chase (the largest bank in the USA), to head up a new AI research department. The finance world was a completely unknown environment for her, and, after a life in academia, curiosity was one of the reasons for making the move.

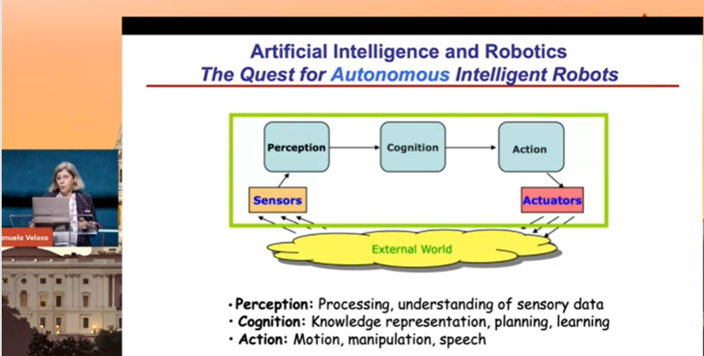

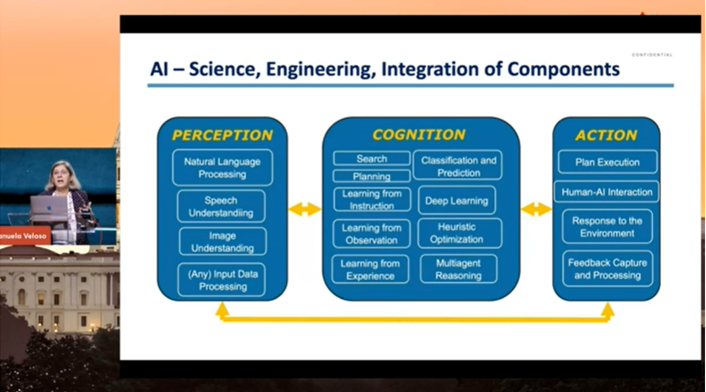

For her entire career, Manuela has been driven by the goal of connecting three critical pillars of AI research: perception, cognition, and action, to solve real-world problems. In terms of autonomous robots, these three aspects are manifested in the physical hardware of robots through sensors and actuators. At JP Morgan, Manuela returned to these “perception, cognition, action” principles, however, she looked at the problem as one of integration of the different components of AI.

Perception, cognition, and action for robotics.

Perception, cognition, and action for robotics.

Perception, cognition, and action applied to finance.

Perception, cognition, and action applied to finance.

During her talk, Manuela detailed a number of interesting projects that she has been involved in during her career, and just a few of those are highlighted here.

RoboCup

RoboCup is an international project to promote the development of autonomous robots and AI methods through the game of soccer. Manuela was one of the founders, and, back in 1997, the first competition was held. In those days, the set-up consisted of a table-top pitch and, by today’s standards, small, basic robots. Fast-forward to the RoboCup competitions of today and it is amazing to see the progress. Manuela used this example to illustrate the point that grand advancements don’t happen immediately, it takes time to develop concepts and ideas.

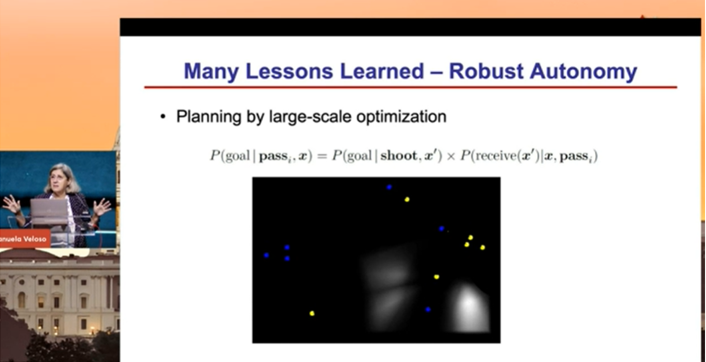

One of the aspects of RoboCup that Manuela focussed on was the planning algorithms used by the teams. In the small-sized league there is an overhead camera which sends the positions of all of the robots to a shared vision system. This is then used by each team in their planning algorithm. You can see an example of planning in the figure below, which is represented as a probability map. Taking into account the positions of team-mates and opponents, the algorithm provides the probabilities of success (i.e. likelihood of scoring a goal) if the ball is passed to a particular position on the pitch. The more intense the white areas, the higher the probability of success.

As the RoboCup competition progressed, teams started to block potential receivers of the ball so that the robot in possession couldn’t directly find a teammate. To overcome this, Manuela and her team changed their algorithm so that the robot in possession passed into a space that the receiver could move to and collect the ball.

CoBots

Manuela spoke about work conducted with several PhD students during her time at Carnegie Mellon University. This concerned an autonomous collaborative robot (CoBot) that, since 2010, has clocked up more than 1,200km traversing the corridors at the University. Over the years, the robot has seen countless improvements. It became so well localised, that it slowed down when approaching a transition from one floor surface to another, for example, when moving from wood to carpet.

However, one of the limitations of the robot is that it doesn’t have arms. Therefore, it has no mechanism to, for example, press a lift button to get to another floor in the building. To overcome this problem, Manuela had the idea to get the robot to ask a passing human for help. The team also programmed the robot to send an email to ask for help if it got stuck.

Manuela made the point that a robot (or AI system) is capable of a limited number of skills. One of the challenges in human-AI interaction lies in mapping the request of a human to a particular skill of the robot (or system). As an example, Manuela highlighted the task of asking the robot to go to her office. As humans we might ask this in a number of ways (for example “Go to Manuela’s office”, “Take me to Professor Veloso’s office”, “I’d like to go to the office of the Department Head”), and we need algorithms that can map that variation in human language for the same request to the specific skills of movement and navigation.

Function analysis – “Buy, don’t buy”

When Manuela arrived at JP Morgan Chase, she took a tour of the trading floor. It was much like as portrayed in films, with people surrounded by computer screens plastered with graphs, buying and selling stocks. She realised that the traders were not using any maths to make the decisions, they were simply looking at graphs of time-series data. As soon as the tour finished, she headed back to her team and suggested they take the images of the time-series data, train a neural network, and use it to classify images as “buy” or “don’t buy”. They were able to reproduce with 95% accuracy what humans had done on historical data.

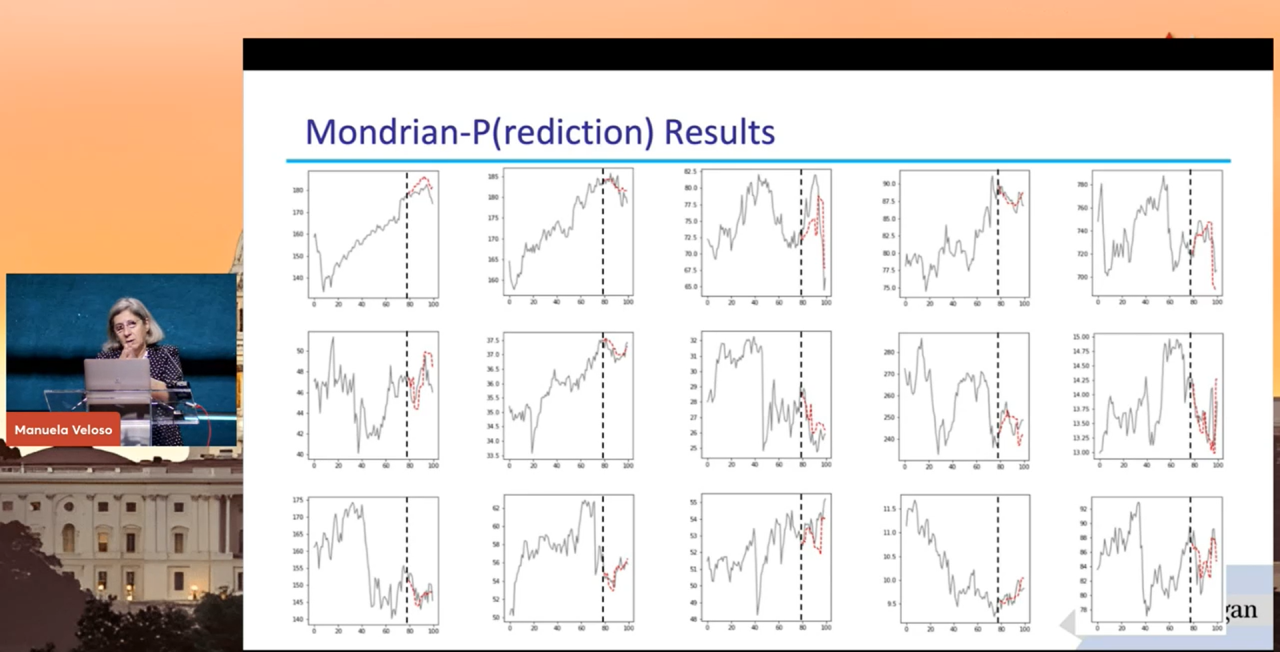

The next step in the project concerned predicting the next points in these time-series data. What they did was train a neural network on millions of images of time-series. To test, they gave the network the first 80 steps of a 100 series, and as output it predicted the next 20 steps. This was all based on the image alone, i.e. filling in pixels, rather than being based on any governing formulas of the time-series themselves. You can see from the image below that these predictions were, on average, very close to the actual time-series. At the moment, the team are able to make predictions dynamically and they have a sliding “window” which predicts the next steps continuously as the series progresses. This was a novel way of looking at the problem, as nobody had ever considered treating these time-series plots as images before.

Time-series plots, with the last 20 steps predicted by the neural network algorithm in red, and the actual data in blue.

Time-series plots, with the last 20 steps predicted by the neural network algorithm in red, and the actual data in blue.

Generating PowerPoint slides

Manuela stressed that AI in finance is not all about trading on the markets. It’s about using AI in many domains, including making things easier for employees, data security, fighting financial crime, and more.

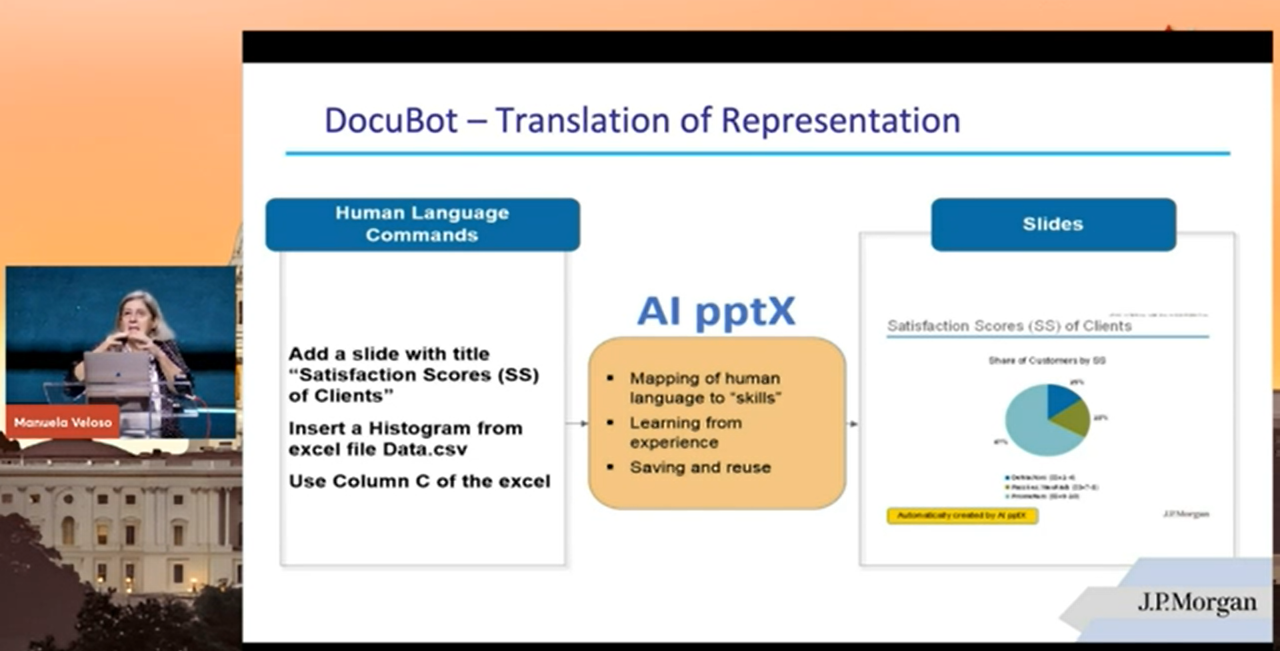

For this example, Manuela returned to the concept of mapping human language requests to an AI skill, as mentioned above for CoBots. This time the skill was automatic generation of PowerPoint slides, which happened through language requests. A schematic for this process is shown in the figure below.

Schematic to show the process of generating powerpoint slides from language requests.

Schematic to show the process of generating powerpoint slides from language requests.

The system that Manuela and her colleagues developed was able to generate decks of slides from a written request from an employee. It pulled data from different file types and compiled everything within document templates with all titles, text and figures completed. One extra feature was the ability for the user to make corrections, again via written requests. For example, the user could say “change the colour of the figure title to black on all slides”, and the system would make the change. In an industry that is awash with slide decks, this tool could save employees precious time.

Humans and AI

To conclude, Manuela outlined her vision for future research, namely AI-first architecture. For every task or problem posed, you first ask whether AI could be used to carry out that task, either fully or partially. If so, you develop algorithms to that end. If not, then a human continues to do that task. Currently, AI systems are generally used for problems of low complexity. As the algorithms improve, and research progresses, AI will gradually take on more tasks, and tasks of increasing complexity.

tags: AAAI, AAAI2023