ΑΙhub.org

Improving the understanding of metal-organic frameworks

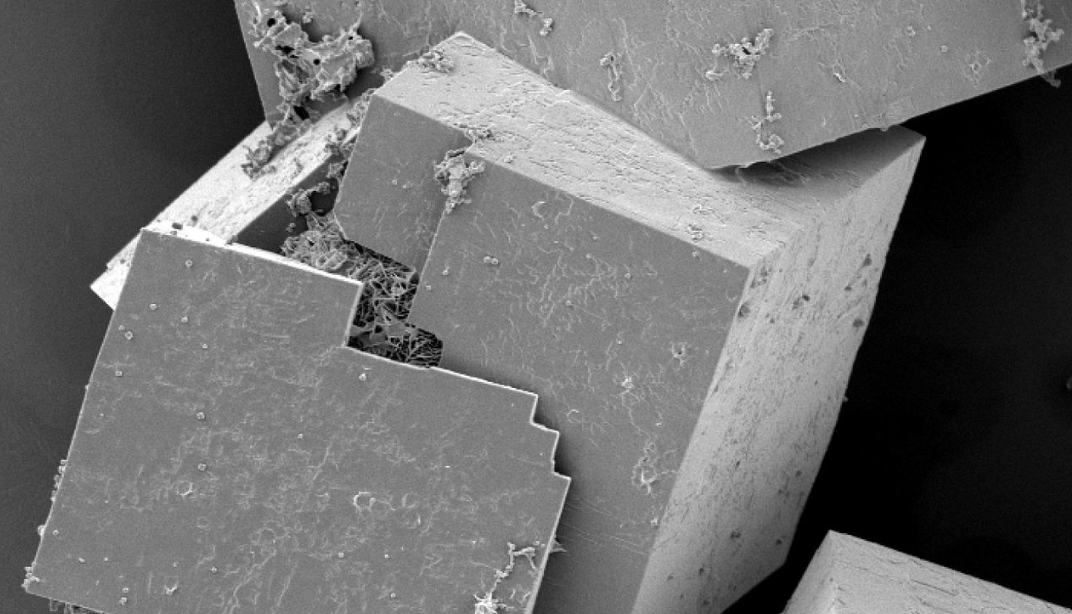

Scanning electron microscope image of MOF crystals. Image credit: CSIRO. Reproduced under a CC BY 3.0 licence.

Scanning electron microscope image of MOF crystals. Image credit: CSIRO. Reproduced under a CC BY 3.0 licence.

By Nik Papageorgiou

How does an iPhone predict the next word you’re going to type in your messages? The technology behind this, and also at the core of many AI applications, is called a transformer; a deep-learning model that handles sequences of data in parallel, and can be fine-tuned for specific tasks.

Now, researchers at EPFL and KAIST have created a transformer for Metal-Organic Frameworks (MOFs), a class of porous crystalline materials whose potential applications include energy storage and gas separation. MOFs are composed of thousands of tunable molecular building blocks (metal nodes and organic linkers), and, considering all possible configurations, a vast number of MOFs could potentially be synthesised. Given this vast space, it is a challenge to find the material that has the characteristics you are looking for. One option is to use machine learning techniques to search the property-structure space.

The “MOFtransformer” developed by the researchers is based on the transformer architecture that forms the core of popular language models such as GPT-3, the predecessor to ChatGPT. The central idea behind these models is that they are pre-trained on a large amount of text, so when we start typing on an iPhone, for example, models like this autocomplete the most likely next word.

“We wanted to explore this idea for MOFs, but instead of giving a word suggestion, we wanted to have it suggest a property,” says Professor Berend Smit, who led the EPFL side of the project. “We pre-trained the MOFTransformer with a million hypothetical MOFs to learn their essential characteristics, which we represented as a sentence. The model was then trained to complete these sentences to give the MOF’s correct characteristics.”

The researchers then fine-tuned the MOFTransformer for tasks related to hydrogen storage, such as the storage capacity of hydrogen, its diffusion coefficient, and the band gap of the MOF (an “energy barrier” that determines how electrons can move through a material).

The approach showed that the MOFTransformer could get results using far less data compared to conventional machine-learning methods, which require much more data. “Because of the pre-training, the MOFTtransformer knows already many of the general properties of MOFs; and because of this knowledge, we need less data to train for another property,” says Smit. Moreover, the same model could be used for all properties, while in conventional machine learning, a separate model must be developed for each application.

The researchers hope that the MOFTransformer will pave the way for the development of new MOFs with improved properties for hydrogen storage and other applications.

The MOFTransformer library is available here.

Read the article: A Multi-modal Pre-training Transformer for Universal Transfer Learning in Metal-Organic Frameworks.