ΑΙhub.org

Neurosymbolic AI for graphs: a crime scene analogy

Have you ever watched a mystery movie and noticed a critical link before the characters did? Maybe this critical link even helped you solve the mystery before the end of the story. If so, think about how you made that connection: did you notice something that “just didn’t add up?” Maybe, instead, one character’s behavior seemed too suspicious from the beginning. Whether you made a logical deduction or made a prediction based on behavioral patterns, you performed link prediction, a common task researchers use on computational graph structures.

In our recent survey paper, we focus specifically on methods which operate on graph structures. Graphs can be visualized as a series of nodes, which represent objects or entities, connected by edges, which represent the relationships between them. To better understand, let’s use an analogy: imagine that a crime was committed, and an investigator is trying to determine who was responsible. To solve this mystery, the investigator might create one of those photo-covered corkboards that we see in the movies, such as the one shown below. Essentially, each item is a suspect or a piece of evidence, and the investigator connects the items using strings to represent known interactions or relationships between them.

Image by macrovector on Freepik

Image by macrovector on Freepik

The corkboard is like a graph structure. In this case, the nodes are the items, and the strings connecting the photos are edges. While the investigator knows about some of the connections between suspects and pieces of evidence, she wants to figure out who or what else is likely connected. Oftentimes, we do not know all of the edges in a graph, and we need a method to predict whether an edge, or a link, exists between two nodes. This is called link prediction.

There are various ways to accomplish this. Some methods use logic: for example, if Suspect A claimed to be with Suspect B the evening of the crime, and Suspect B met with Suspect C, then Suspect A and Suspect C are also likely to have met that evening. We call those methods symbolic. Other methods use a machine learning algorithm to discover patterns across subjects based on previous cases. Based on those patterns, these methods, which we call neural or deep learning methods, make predictions as to how likely certain nodes are connected. However, we see a tradeoff between these categories of link prediction. While the former category of methods is completely interpretable to a human, it does not do very well on large datasets. In contrast, the predictions of the latter category are not easily interpretable, but the methods scale and perform well on large graphs.

We studied novel approaches which aim to combine the complementary features of these two categories, called neurosymbolic.

Imagine two people are asked to look at the corkboard: a detective and a scientist. The detective is best at discovering patterns and interrogating suspects for details, such as lie indicators and suspicious behavior, so he is more like our neural approaches. The scientist is more knowledgeable about forensic science, and she thinks very logically. She will represent our symbolic methods. There are many different ways these two could work together to find new connections on the corkboard.

The main contribution of our paper is a taxonomy of neurosymbolic approaches for reasoning on graph structures. We classify the methods into three categories:

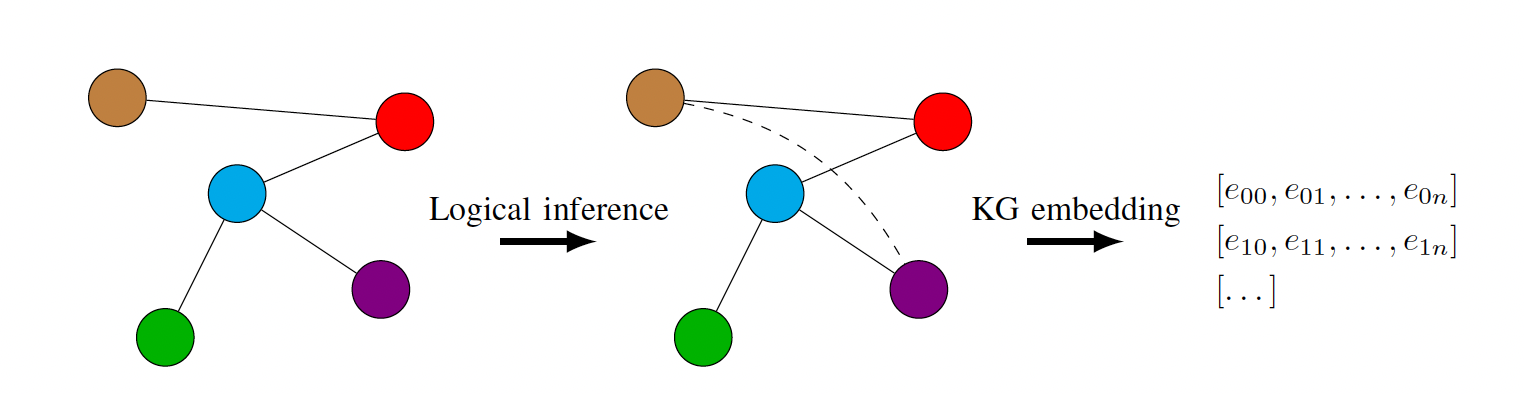

- Logically-informed embedding approaches. These are the simplest approaches, in which logical inference updates or augments the graph, then the neural method operates upon the augmented graph. For example, the scientist might analyze the forensic evidence and conclude that, based on known genetic markers and DNA evidence, two of the suspects are siblings. The detective, driving the investigation, will add this information to his knowledge base and continue doing his job.

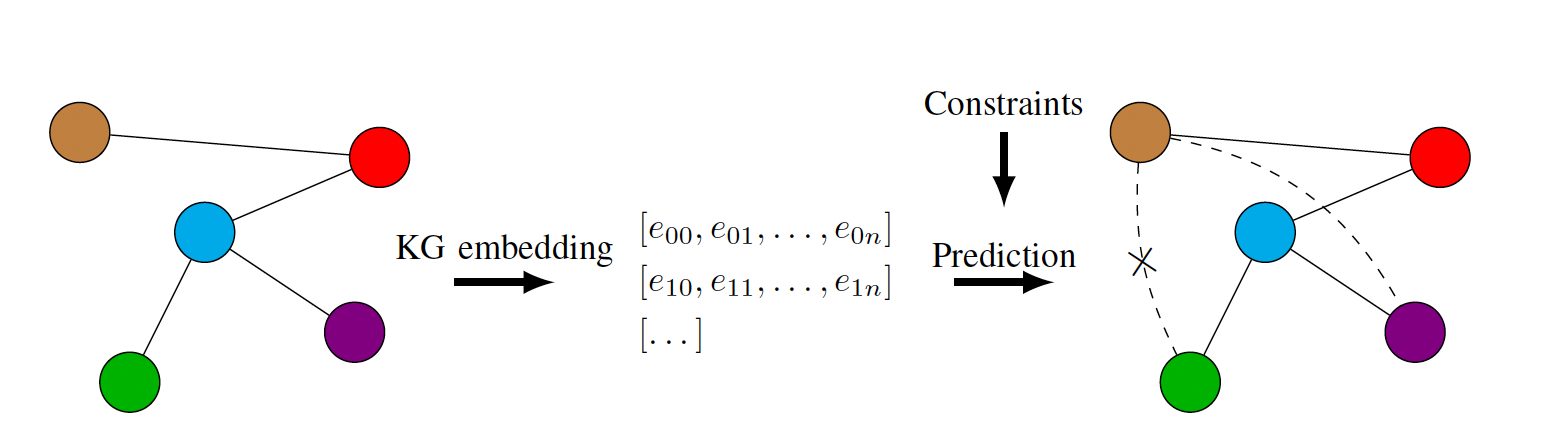

- Learning with logical constraints. In this case, the neural method is restricted by logical rules so that the types of predictions made are limited. Any progress made by the detective must comply with the scientist’s logical understanding of the case. Perhaps, for example, the detective narrows his suspects down to three men. However, the scientist explains to the detective that the footprints they found were smaller than the average man’s. According to the scientist, the detective needs to rethink his current theory. This type of collaboration can happen while the detective is investigating the case, during which some ideas will be discarded by the scientist, or just before making a final prediction, during which the scientist will check whether it contradicts her logic.

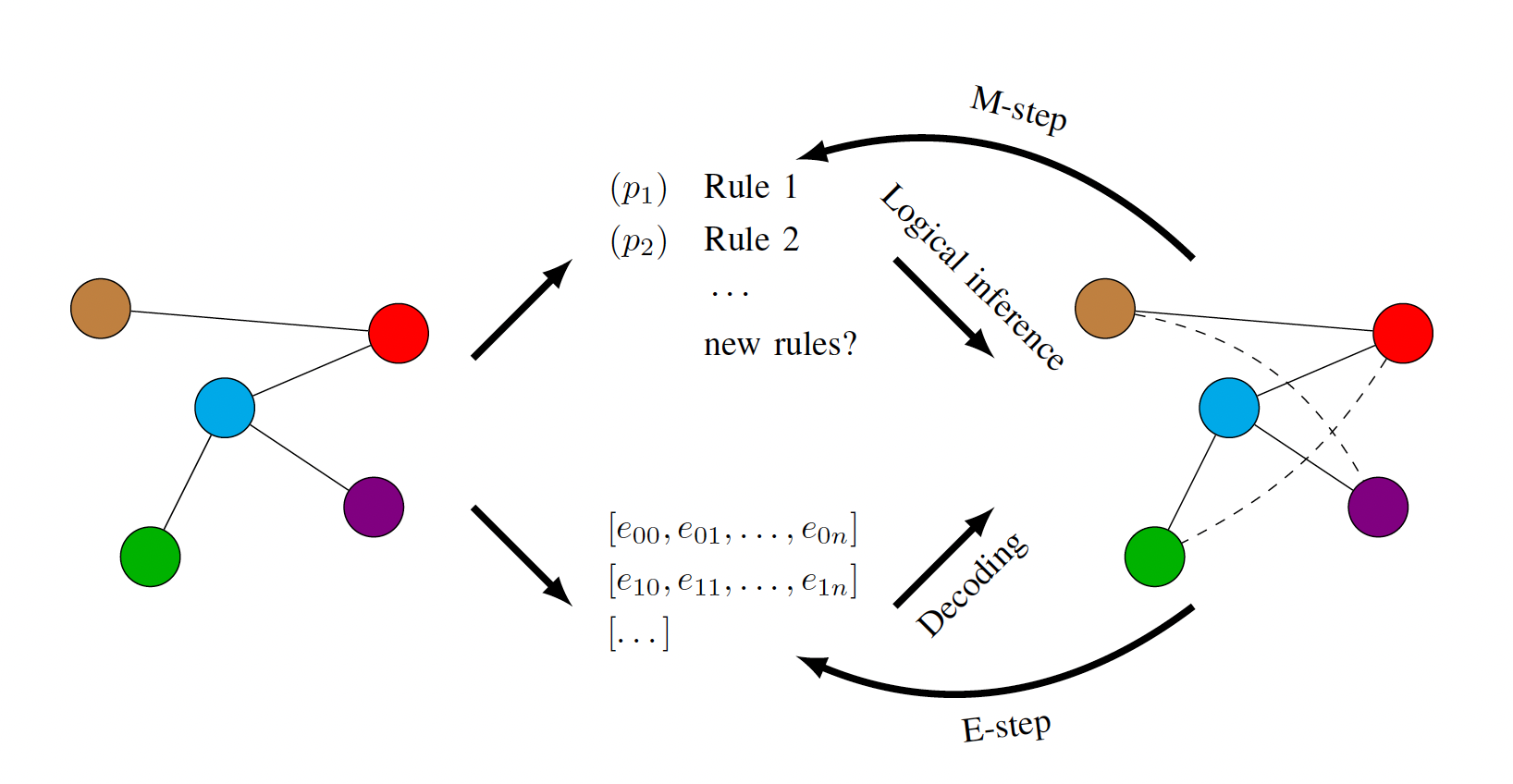

- Rule learning. Finally, this category generates explanations alongside predictions by developing logical rules to describe the patterns discovered. A major subcategory of these methods involves iterative feedback back and forth between the neural and logical modules, forcing the other to make updates to itself. In the previous example, we had a rule which is often, but not always true. These types of rules are common in the real world. The scientist knows that some rules need to hold true for the story to make sense, but she is more confident about some of them than others. From the patterns spotted by the detective, the scientist might adjust her confidence in the rules and, in some cases, come up with more. Perhaps, in the previous footprint example, the detective claims to be very certain that the criminal is a man. In this case, the scientist might have to adjust her mindset and accept that the criminal could be an exception to her rule. The detective will benefit from the extra connections that can be made thanks to these rules. From these methods, we often get associated confidences alongside generated rules, telling us which rules were most important in making the final predictions.

Each category is further divided into subcategories, with 10 in total. Every subcategory is also related to the more general classification of neurosymbolic AI by Kautz. We have analyzed and categorized 34 different tools in total and collected all the publicly available code which can be found on our Github.

Our survey also covers prospective directions in the field. We identify some underexplored application areas and potential improvements to current approaches. These include working end-to-end, integrating multi-modal data, adding conditional edge types, reasoning about spatiotemporal features or adapting the tools to work for few shot learning problems.

Reasoning about graphs using neurosymbolic approaches is a relatively young area of research; in fact, the tools we surveyed were only developed between 2015 and 2022. However, it has shown great potential and it is applicable to many interesting problems. By making the landscape of approaches clearer, we hope to make the field more accessible. We encourage researchers to find more and better ways so that detectives and scientists can work together.

- Our paper: Neurosymbolic AI for Reasoning on Graph Structures: A Survey, Lauren Nicole DeLong, Ramon Fernández Mir, Matthew Whyte, Zonglin Ji, Jacques D. Fleuriot

- Our curated code: Neurosymbolic AI for Reasoning on Graph Structures: The GitHub Organization